Computer CPU-GPU shared cache control method and system

A CPU-GPU, shared cache technology, applied in memory systems, computing, program control design, etc., can solve the problems of complex dynamic adjustment process, waste of valuable system resources, and inability to adjust in time.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

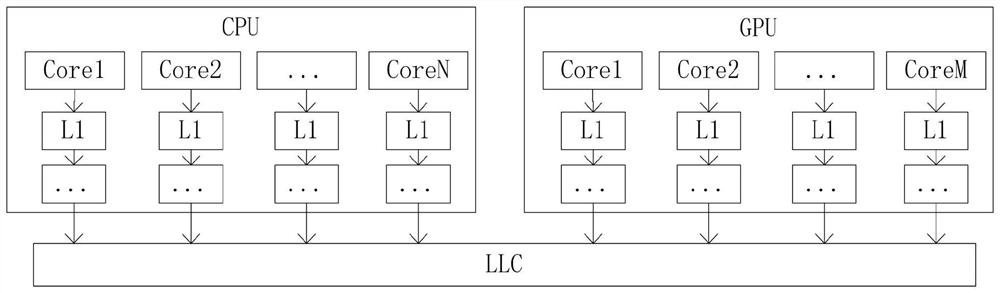

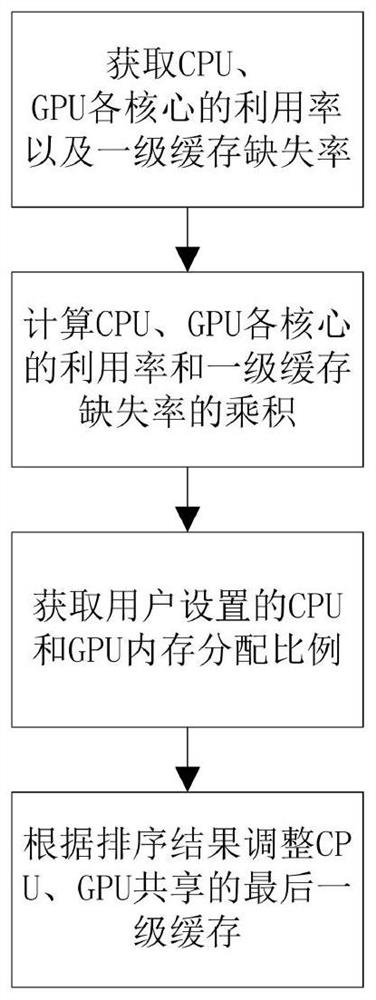

[0036] In one embodiment, the present invention provides a computer CPU-GPU shared cache control method, applied in the CPU-GPU fusion architecture, comprising the following steps:

[0037] Obtain the utilization rate of each core of the CPU and the miss rate of the first-level cache, and the utilization rate of each core of the GPU and the miss rate of the first-level cache;

[0038] Calculate the product of the utilization rate of each core of the CPU and the miss rate of the first-level cache, and obtain C n , n=1,...,N; Calculate the product of the utilization rate of each GPU core and the first-level cache miss rate, and get G m , m=1,...,M; wherein, N is the number of CPU cores, and M is the number of GPU cores;

[0039] Get the CPU and GPU memory allocation ratio set by the user, according to the ratio and C n , G m get C' n , G' m , and for C' n , G' m put in order;

[0040] Adjust the last-level cache shared by CPU and GPU according to the sorting results.

...

Embodiment 2

[0065] In another embodiment, the present invention also provides a computer CPU-GPU shared cache control system, which is applied in the CPU-GPU fusion architecture, wherein the method includes the following modules:

[0066] The first obtaining module is used to obtain the utilization rate of each core of the CPU and the miss rate of the first-level cache, and the utilization rate of each core of the GPU and the miss rate of the first-level cache;

[0067] The calculation module is used to calculate the product of the utilization rate of each core of the CPU and the first-level cache miss rate to obtain C n , n=1,...,N; Calculate the product of the utilization rate of each GPU core and the first-level cache miss rate, and get G m , m=1,...,M; wherein, N is the number of CPU cores, and M is the number of GPU cores;

[0068] The second obtaining module is used to obtain the CPU and GPU memory allocation ratio set by the user, according to the ratio and C n , G m get C' n ,...

Embodiment 3

[0075] In addition, in another embodiment, the present invention also provides a computer-readable storage medium for storing computer program instructions, which is characterized in that, when the computer program instructions are executed by a processor, the computer program instructions described in Embodiment 1 are implemented. Methods.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com