Federal learning data processing system based on gradient compression

A federated and gradient technology, applied in the computer field, can solve problems such as large time consumption, long total model training time, and low model training efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to further explain the technical means and effects of the present invention to achieve the intended purpose of the invention, the following is a specific implementation of a gradient compression-based federated learning data processing system proposed in accordance with the present invention in conjunction with the accompanying drawings and preferred embodiments. And its effect, detailed description is as follows.

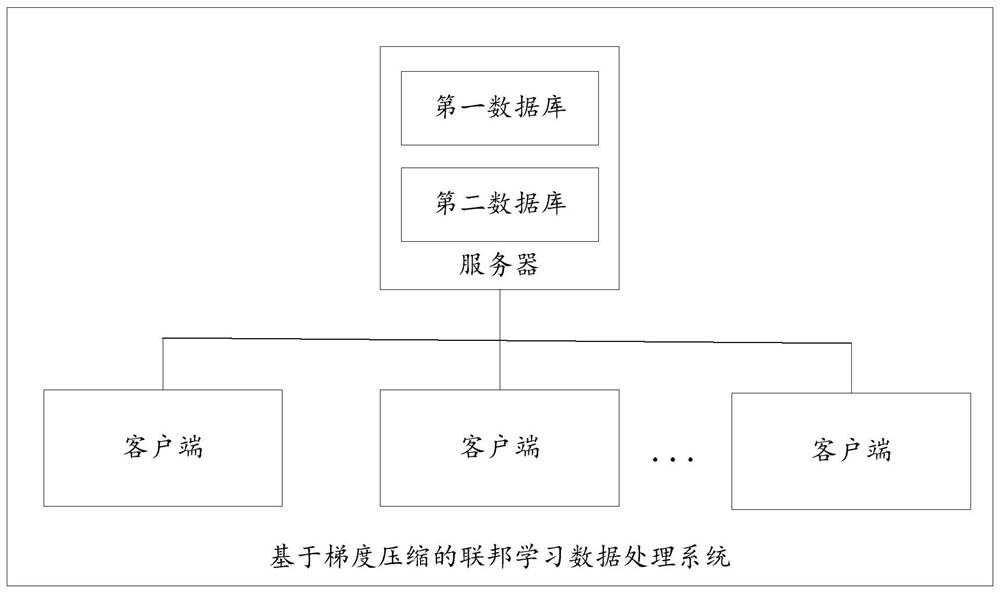

[0018] The embodiment of the present invention provides a federated learning data processing system based on gradient compression, such as figure 1 As shown, it includes a server, M clients, a processor and a memory storing computer programs, wherein a first database and a second database are stored in the server, and the fields of the first database include client id and client The latest round of federation aggregation that the terminal participated in, the fields of the second database include the round of federation aggregation and the global mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com