Deep learning-based lane line detection and vehicle transverse positioning method

A technology for lane line detection and lateral positioning, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve the problems of poor robustness, high demand, and difficulty in meeting the real-time requirements of automatic driving, and reduce the parameters of operation. volume and speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

[0039] This invention may be embodied in many different forms and should not be construed as limited to the embodiments set forth herein. Rather, these embodiments are provided so that this disclosure will be thorough and complete, and will fully convey the scope of the invention to those skilled in the art. In the drawings, components are exaggerated for clarity.

[0040] The data used in the concrete experiment of the present invention comes from Tusimple data set, has included 6408 images with label, and this data set carries out the label of lane line by the coordinate of a series of points, divides the height equally on the image, The ordinate values of the lane lines are generated, and the abscissa of each lane line is generated according to these ordinate values.

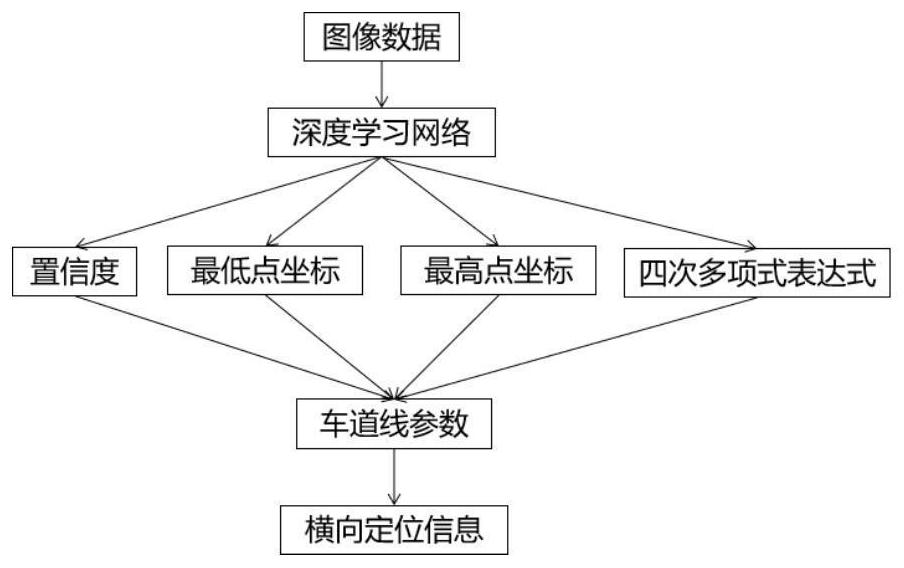

[0041] like figure 1 As shown, the present invention discloses a method ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com