Pedestrian flow monitoring method based on pedestrian detection and tracking

A pedestrian detection and crowd flow technology, applied in character and pattern recognition, image data processing, instruments, etc., can solve the problems of low detection accuracy and slow detection speed, and achieve high detection accuracy, fast detection speed, and fast pedestrian tracking Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

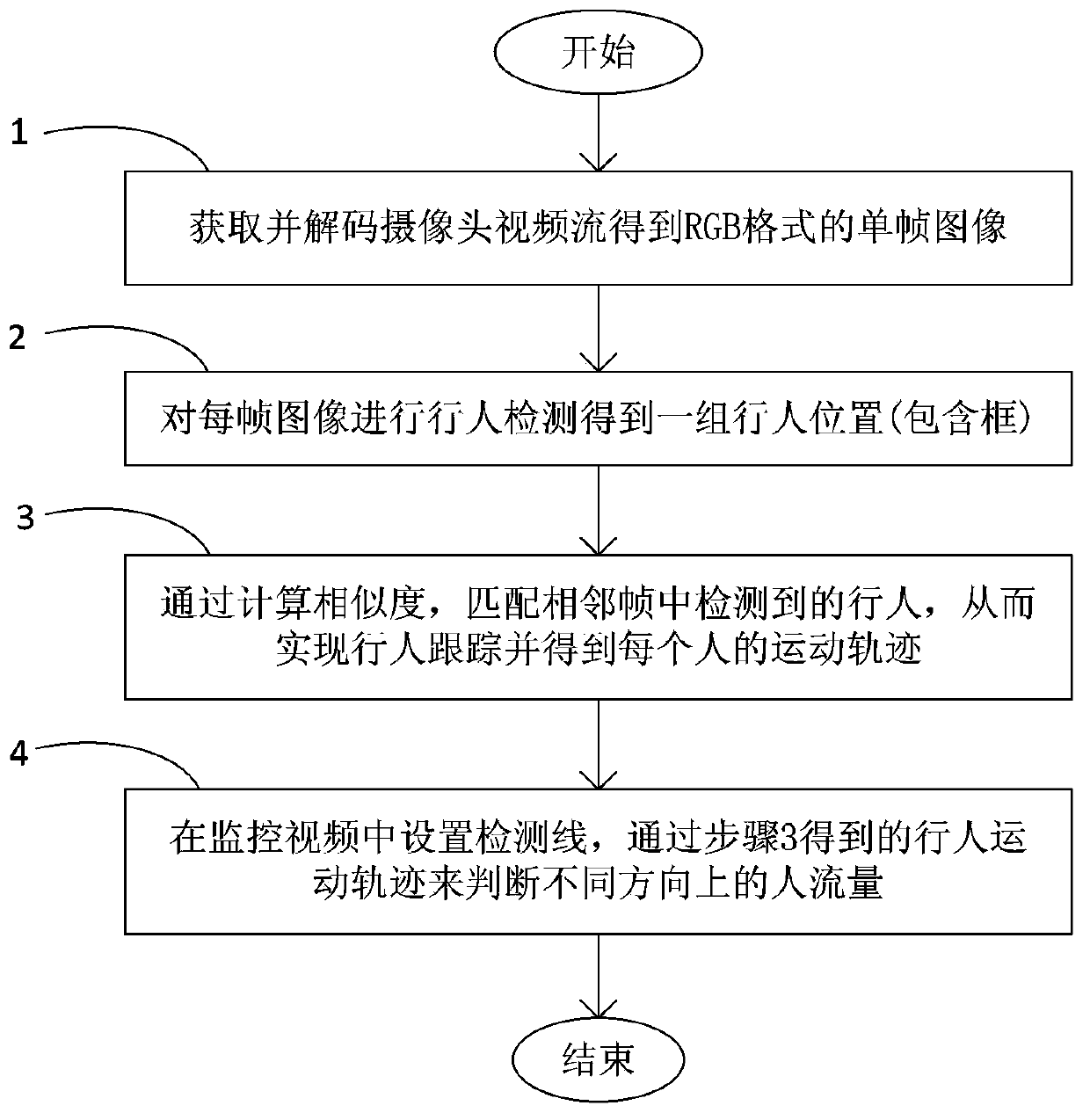

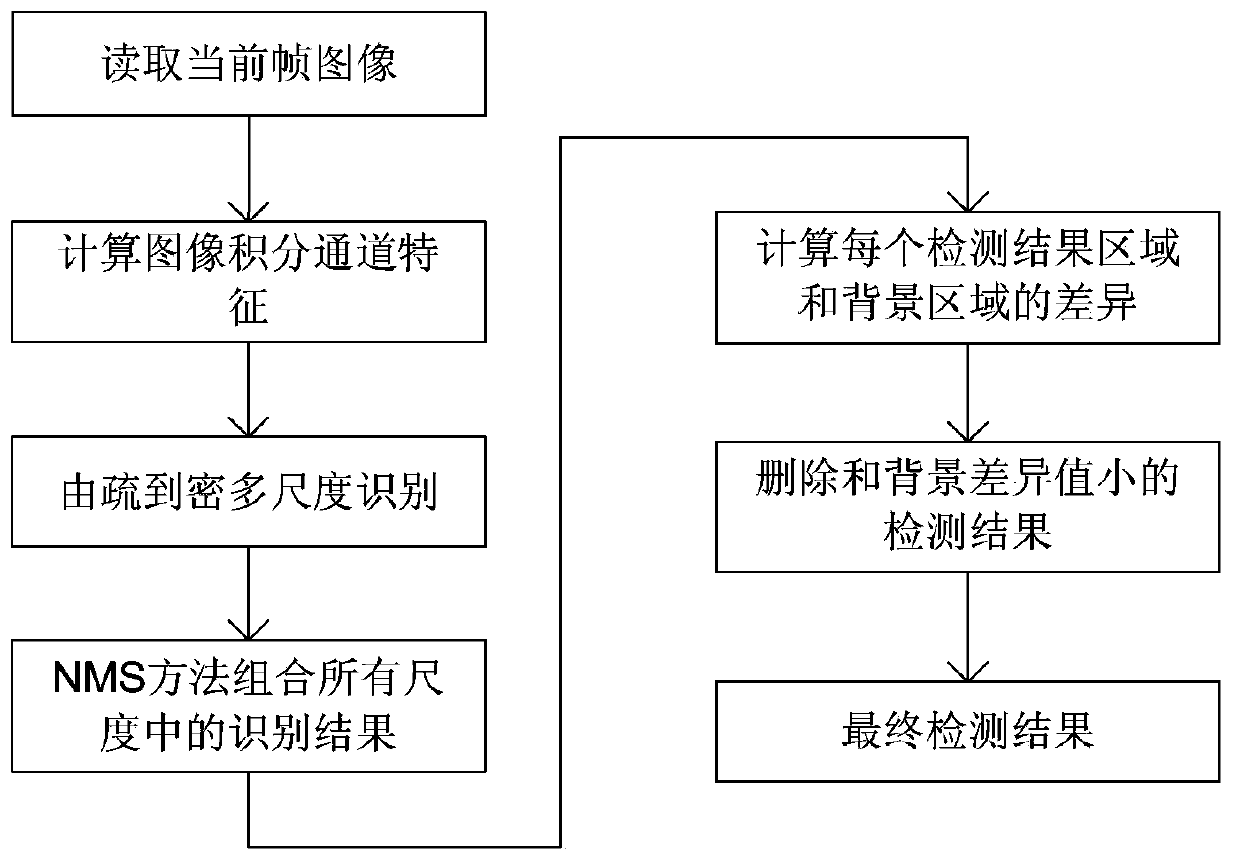

[0035] The purpose and effects of the present invention will become more apparent by describing the present invention in detail below in conjunction with the accompanying drawings.

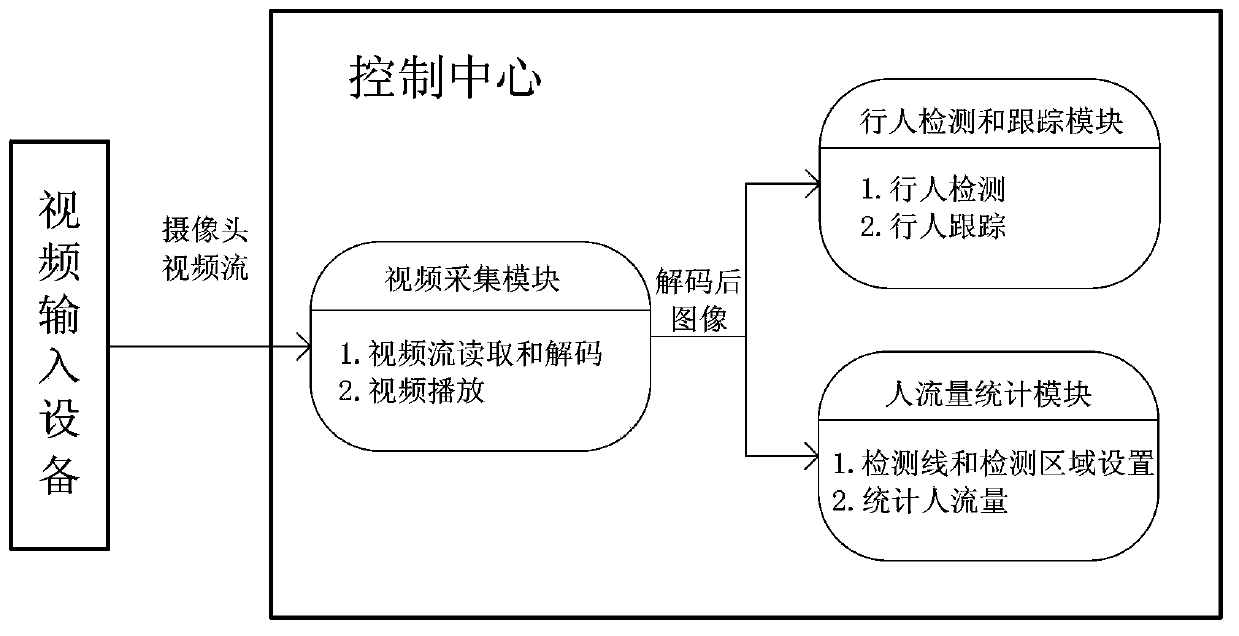

[0036] The present invention is based on pedestrian detection and tracking pedestrian flow monitoring method in figure 2 The flow monitoring system shown is implemented. The flow monitoring system includes: a video input device and a control center, and the video input device and the control center are connected through a LAN network port.

[0037] Video input device: The video input device required by this system can be one or more. The video input device can be a surveillance camera or a traditional camera. Lower than RGB888. The camera is three to five meters above the ground, and the shooting angle is 30 to 60 degrees obliquely downward. It is required that the placement position and shooting angle of the camera make most of the people's whole body appear in the shooting area, and there is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com