Visual inertial navigation method, device and apparatus and computer readable storage medium

A vision and difference calculation technology, applied in the field of inertial navigation, can solve the problems of low calculation efficiency, poor calculation accuracy and stability, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

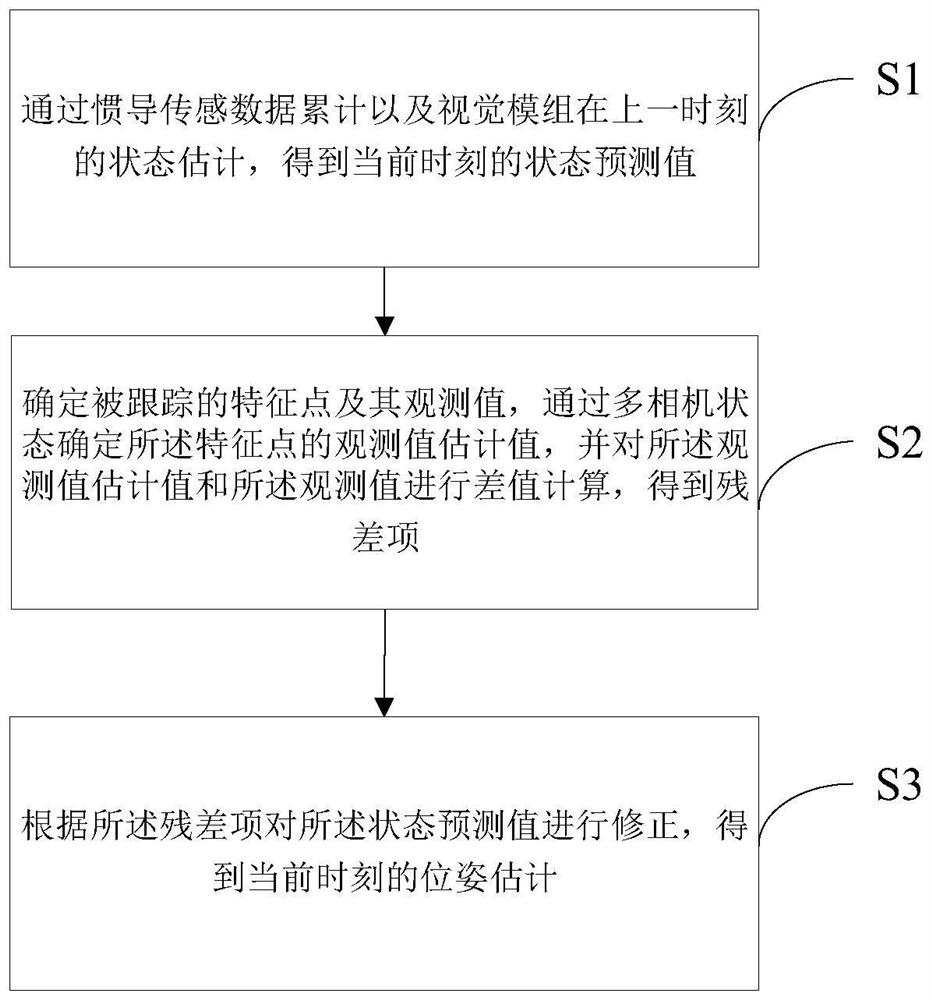

[0031] figure 1 It is the first flow chart of the visual inertial navigation method provided by the embodiment of the present invention. This embodiment proposes a visual inertial navigation method, which includes:

[0032] S1. Obtain the state prediction value at the current moment through the accumulation of inertial navigation sensor data and the state estimation of the vision module at the previous moment;

[0033] S2. Determine the tracked feature point and its observation value, determine the estimated value of the observed value of the feature point through the multi-camera state, and calculate the difference between the estimated value of the observed value and the observed value, and obtain the residual item ;

[0034] S3. Correct the predicted state value according to the residual term to obtain the pose estimation at the current moment.

[0035] In this embodiment, firstly, the pose of the vision module is predicted from the previous moment to the current state b...

Embodiment 2

[0040] figure 2It is the second flowchart of the visual inertial navigation method provided by the embodiment of the present invention. Based on the above-mentioned embodiments, the state prediction value at the current moment is obtained through the accumulation of inertial navigation sensor data and the state estimation of the vision module at the previous moment, including:

[0041] S11. During the time period from the previous moment to the current moment, accumulate the acceleration count value and the angular velocity count value in the inertial navigation sensor data, and at the same time, combine the state estimation of the vision module at the last moment, Obtain the state prediction value.

[0042] In this embodiment, when the required RGB image or grayscale image combined with the depth image is acquired according to the preset image acquisition frequency, since the frequency of acquiring inertial navigation sensor data by the inertial navigation component is high...

Embodiment 3

[0053] image 3 It is the third flow chart of the visual inertial navigation method provided by the embodiment of the present invention. Based on the above embodiments, the tracked feature points and their observed values are determined, the estimated observed values of the feature points are determined through the multi-camera state, and the difference between the estimated observed values and the observed values is calculated to obtain residuals, including:

[0054] S21. Perform feature extraction and matching according to the RGB image or grayscale image acquired by the vision module, to obtain tracked feature points and their observed values.

[0055] In this embodiment, after the initialization is completed, firstly, the corresponding RGB image or grayscale image is obtained through the visual module, and then, according to the current positioning requirements and corresponding parameter configuration, relevant feature extraction and matching operations are perfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com