3D hand-eye calibration method and device for mobile robot

A mobile robot, hand-eye calibration technology, applied in the directions of instruments, non-electric variable control, two-dimensional position/channel control, etc., can solve the problems that the existing technology cannot be applied, achieve the effect of suppressing amplification, and improve the calibration accuracy and stability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

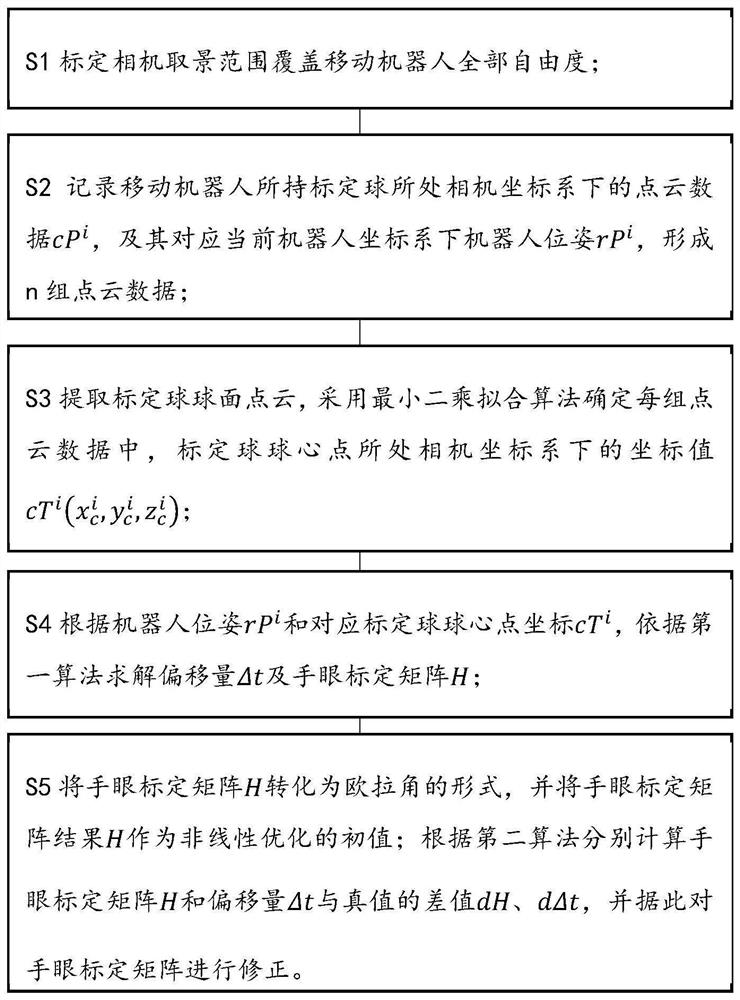

[0079] Such as Figure 1 to Figure 2 As shown, another aspect of the present invention also provides a mobile robot 3D hand-eye calibration method, in a preferred embodiment, the steps include:

[0080] S1 calibrates the viewing range of the camera to cover all degrees of freedom of the mobile robot. In this embodiment, the calibration step includes:

[0081] 1) Fix the calibration ball on the robot actuator to ensure that there will be no displacement between the calibration ball and the robot during the calibration process.

[0082] 2) Adjust the robot to the field of view of the 3D camera to ensure that the 3D camera can capture the calibration ball.

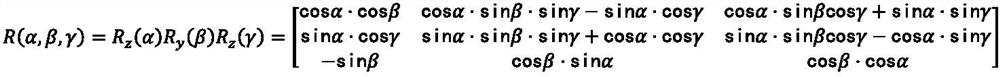

[0083] 3) Move the robot within the field of view of the 3D camera, and record the pose information rP of the robot in the robot coordinate system during each movement, including the position and attitude rR i (R x , R y , R z ). At the same time, use the 3D camera to collect the point cloud data cP of the calibration...

experiment example

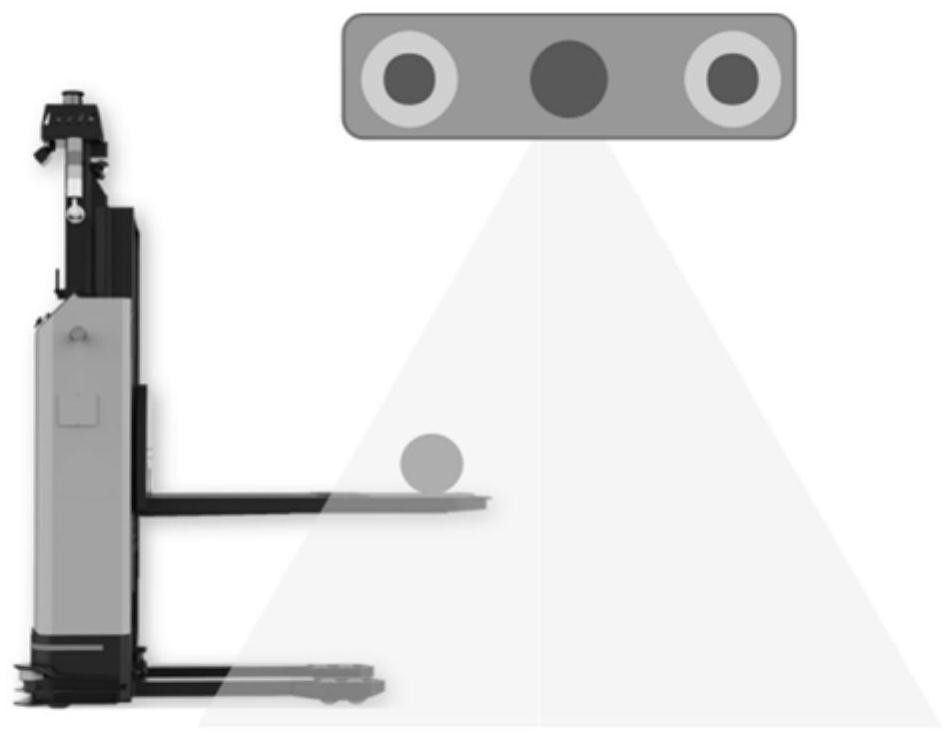

[0125] In order to describe the solution of the present invention more specifically, the implementation of the 3D hand-eye calibration of the mobile robot of the present invention will be described in detail below in conjunction with the accompanying drawings, taking an AGV forklift and a 3D camera as examples.

[0126] Without loss of generality, compared with the mechanical arm, the AGV forklift only has the ability to move in the three directions of X, Y, and Z and rotate around the Z axis. By introducing the application process of this method on the AGV forklift, it can be better explained The method has strong versatility.

[0127] At the same time, it is worth mentioning that the calibration method in this case abandons the traditional two-step algorithm, that is, first solve the offset between the calibration ball and the robot, and then solve the hand-eye calibration matrix, and use a one-step solution instead. Solve the calibration ball together with the robot's offse...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com