Driver intention recognition method considering human-vehicle-road characteristics

A technology of driver's intention and identification method, which is applied to the driver's input parameters, vehicle components, external condition input parameters, etc., and can solve the problems of poor applicability and low reliability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] specific implementation plan

[0039] The following is attached Figure 1-6 The technical solution of the present invention is described in detail.

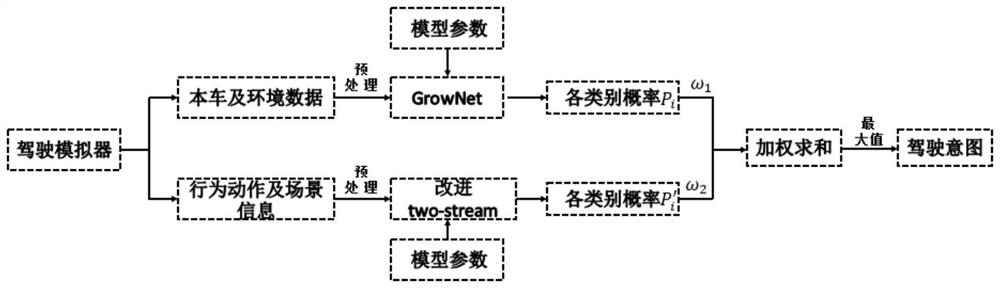

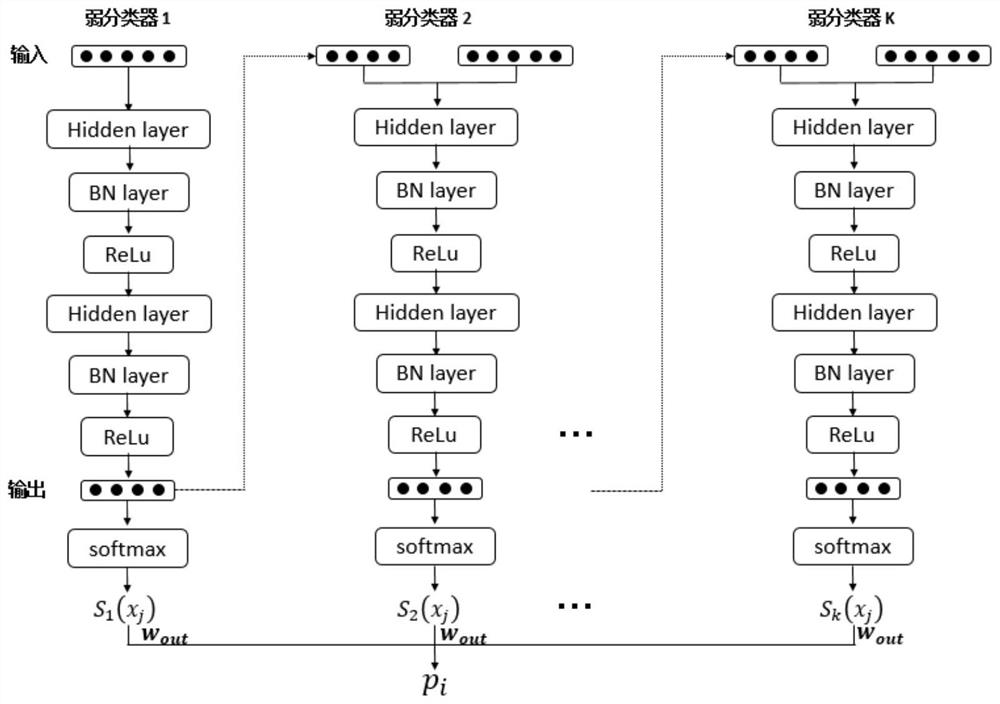

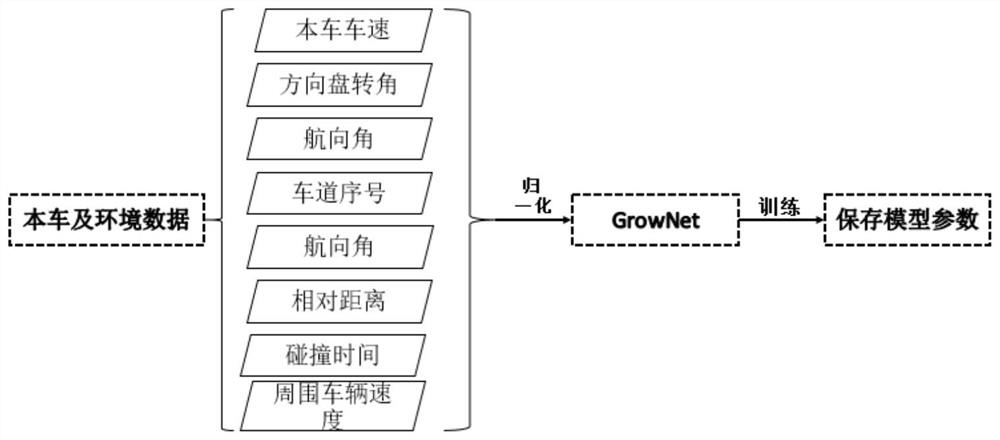

[0040] like figure 1 As shown, this embodiment provides a kind of driver's intention recognition method that considers human-vehicle-road characteristic, comprises the following steps

[0041] Step 1. Obtain the relevant data of the vehicle and its surrounding vehicles, driver behavior and scene information outside the cab recorded from the driving simulator.

[0042] This is achieved in step 1 by:

[0043] Step 1.1. Arrange two cameras, display screens, and place No. 1 camera directly in front of the driver, and No. 2 camera is facing the driver's screen. There must be no less than three display screens. vision.

[0044] Step 1.2. After arranging the hardware equipment, use the supporting software of the driving simulator to construct the driving scene according to the requirements of the type of intention recognition...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com