An eye movement interaction method and device based on head timing signal correction

A timing signal and head movement technology, applied in the field of computer vision, can solve problems such as low accuracy, poor robustness, environmental brightness, and sensitivity to human eye opening and closing.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

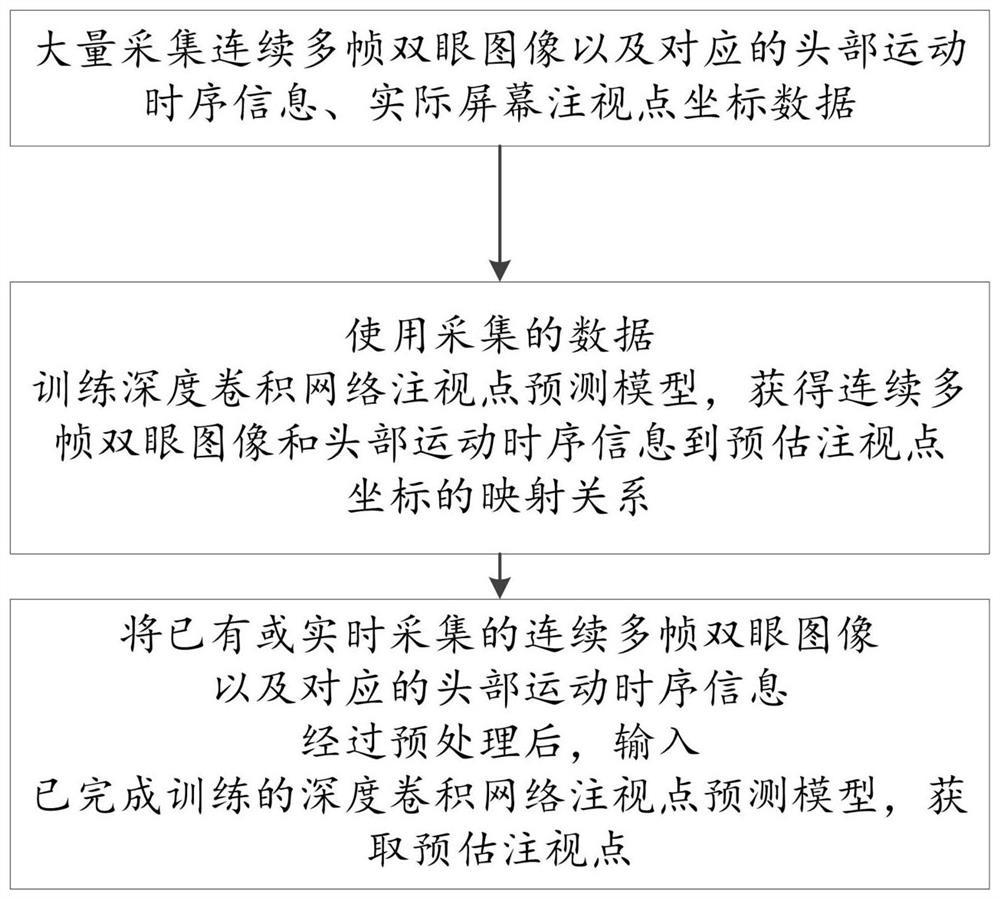

[0036] Please refer to figure 1 , which shows an overall flow chart of an eye movement interaction method based on head timing signal correction provided by an embodiment of the present invention.

[0037] Such as figure 1 As shown, the method of the embodiment of the present invention mainly includes the following steps:

[0038] S1: Collect continuous multi-frame binocular images, corresponding head movement timing information, and actual screen gaze point coordinates as the first collected data; collect the first collected data of a large number of different people in different scenarios as the first collected data group, Perform preprocessing on the data in the first collection data group.

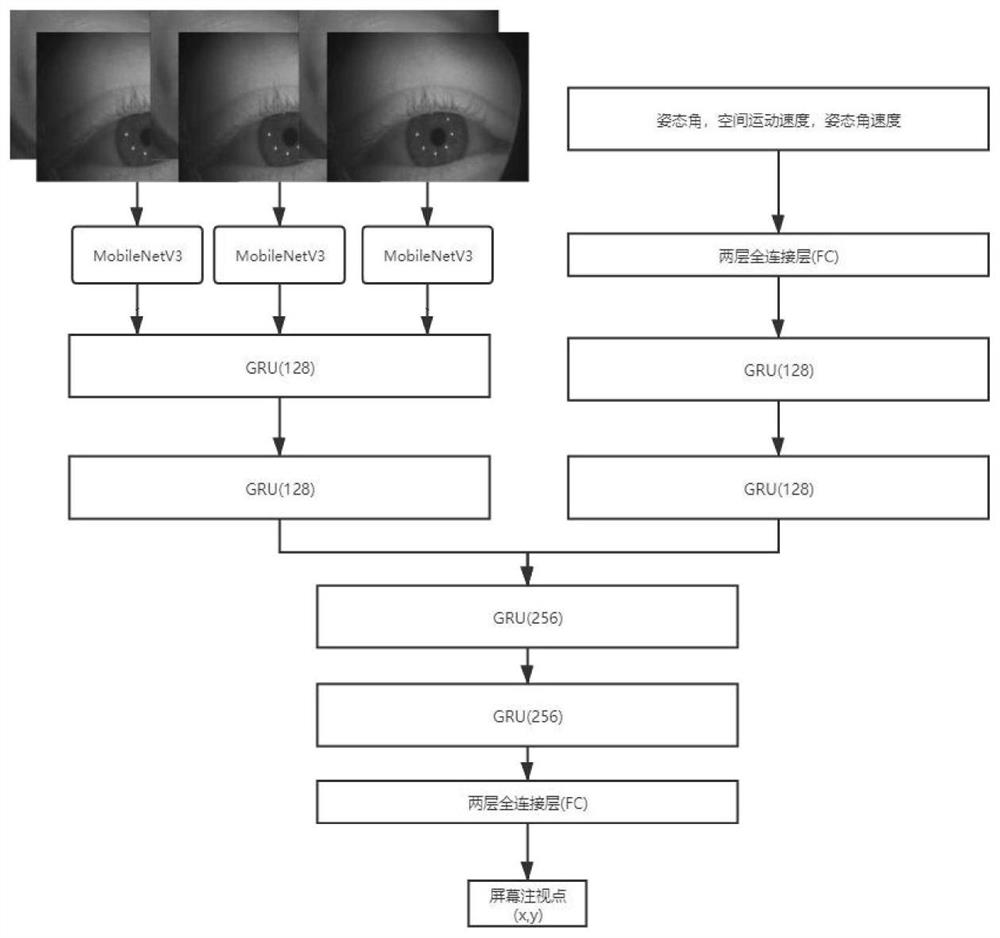

[0039] The binocular image data of the wearer is collected by the near-eye camera of the head-mounted device, and the binocular image is preprocessed into a 128*128 image. The corresponding binocular images in each frame are compressed successively and the mean and standard deviation...

Embodiment 2

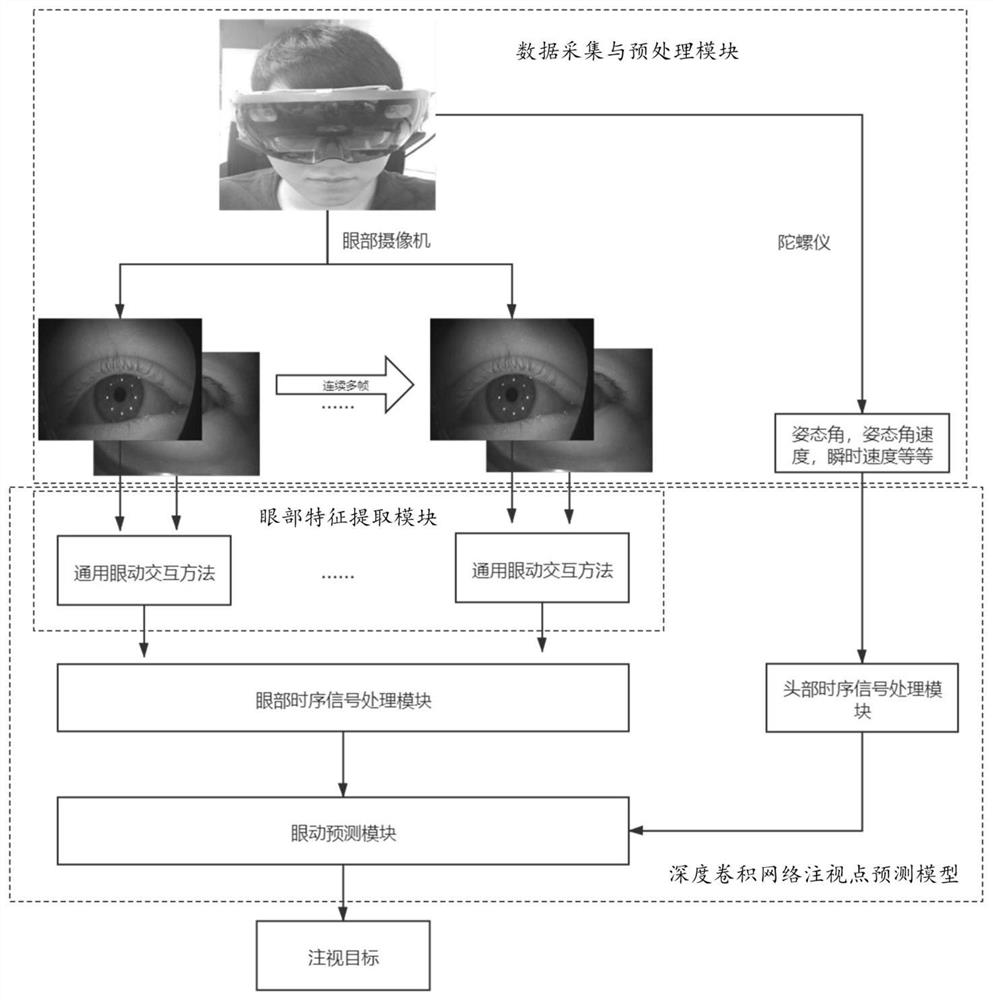

[0068] Furthermore, as an implementation of the methods shown in the above embodiments, another embodiment of the present invention also provides an eye movement interaction device based on head timing signal correction. This device embodiment corresponds to the foregoing method embodiment. For the convenience of reading, this device embodiment does not repeat the details in the foregoing method embodiment one by one, but it should be clear that the device in this embodiment can correspond to the foregoing method implementation. Everything in the example. image 3 A block diagram of an eye movement interaction device based on head timing signal correction provided by an embodiment of the present invention is shown. Such as image 3 As shown, in the device of this embodiment, there are following modules:

[0069] 1. Data collection and preprocessing module: collect continuous multi-frame binocular images and corresponding head movement timing information, and actual screen ga...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com