Multi-view target recognition and retrieval method and device based on incremental learning

A technology of target recognition and incremental learning, applied in multi-view target recognition and retrieval methods based on incremental learning, and in the field of devices, can solve problems such as simple and rough structures, inability to adapt to target categories online, etc., to improve accuracy and reduce disasters Effects of sexual amnesia and distraction reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

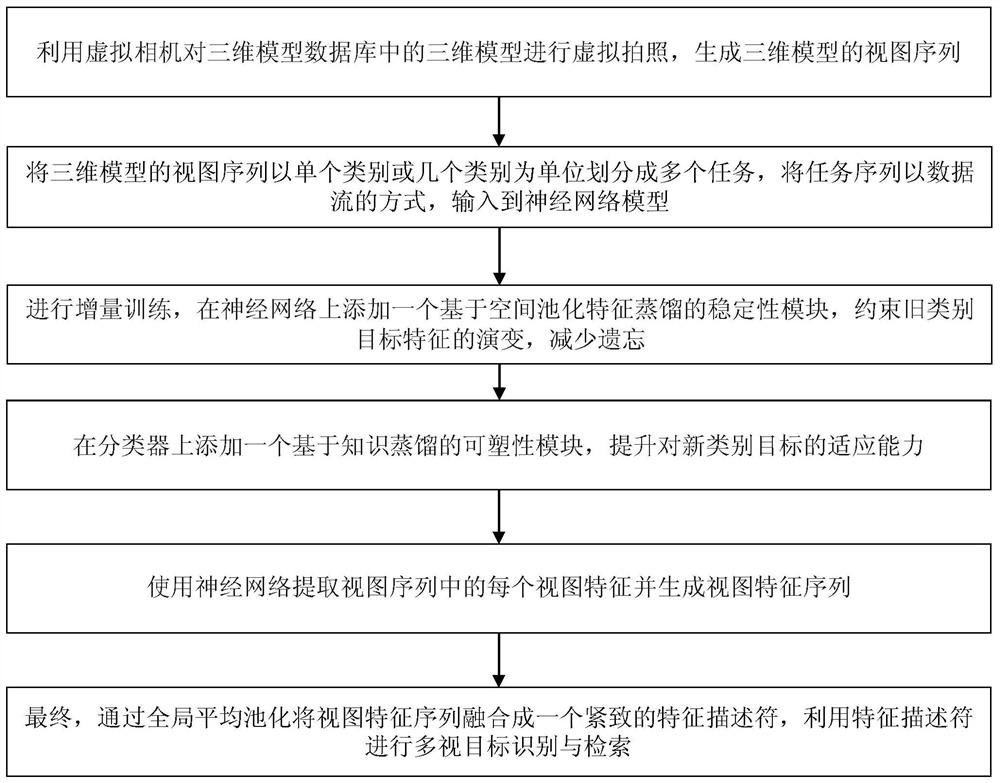

[0034] Multi-view target identification and retrieval method based on incremental learning, see figure 1 The method includes the following steps:

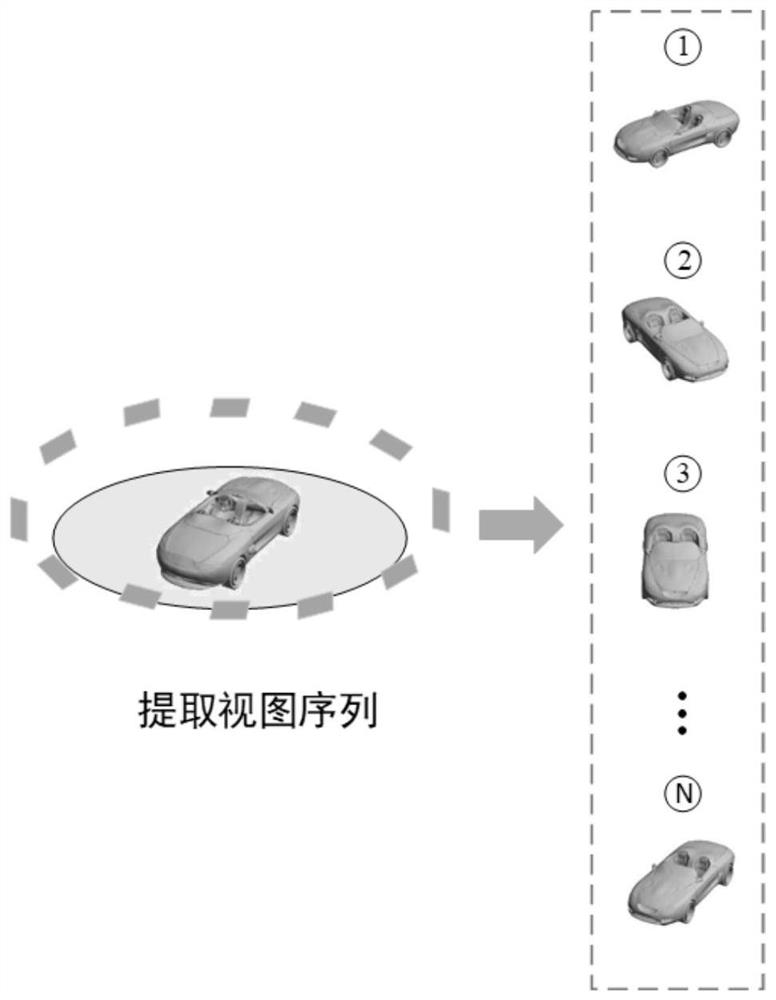

[0035] 101: Use the virtual camera to virtualize the three-dimensional model in the three-dimensional model database, generate a view sequence of the three-dimensional model;

[0036] 102: Sequence of the three-dimensional model is divided into a plurality of task sequences in a single category or several categories, and the task sequence is input into the neural network, and the categories that have been trained as a category The old category, the category containing unsuccessful tasks as a new category;

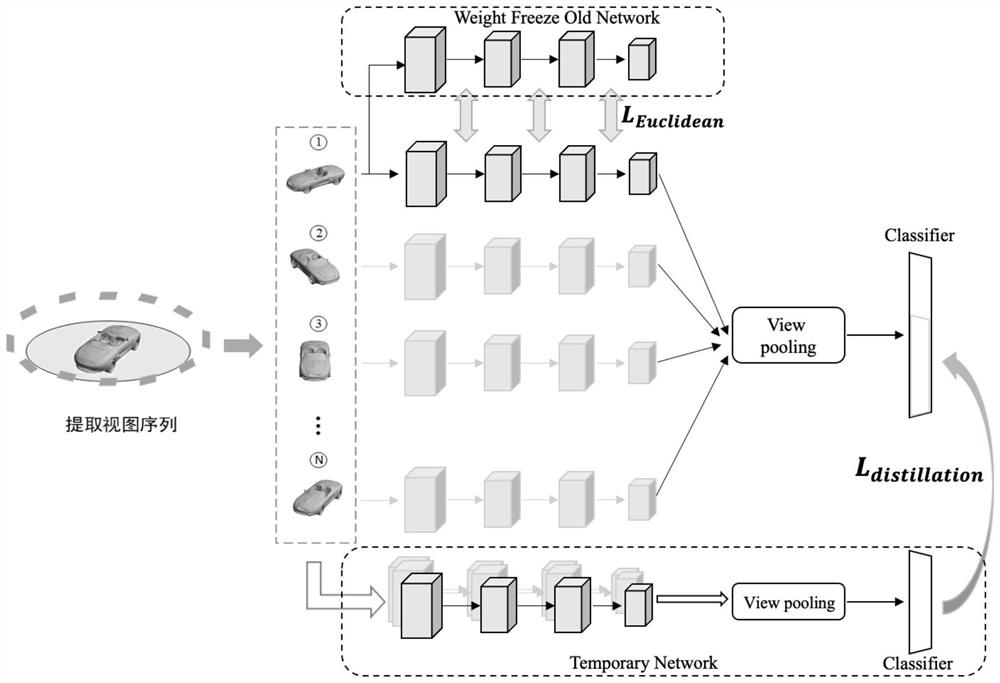

[0037] 103: Incremental training [5] Add a stability module based on characteristic distillation on the neural network for constraining the evolution of the old category target characteristics;

[0038] Where this stability module includes: old network Ω t-1 And new network Ω t And in contact with one space pool-chemical distillatio...

Embodiment 2

[0047] Next, a specific example is combined, the calculation formula further introduces the scheme in Example 1, as described below with reference:

[0048] 201: First use the virtual camera to virtualize the model in the three-dimensional model database, generate view sequences;

[0049] The above step 201 mainly includes:

[0050] A predefined set of viewpoints, the viewpoint is the viewpoint of observing the target object, in the embodiment of the present invention, setting 12 view points, ie, placed a virtual camera around the three-dimensional model, a virtual camera is placed every 30 degrees, and the viewpoint is completely uniform distribution in the target Around the object. By selecting different intervals, different angle views of the three-dimensional model will be obtained clockwise to generate a view sequence.

[0051] 202: Sequence of the three-dimensional model is divided into a plurality of task sequences in a single category or several categories, and the task se...

Embodiment 3

[0072] By the following, the specific tests are feasible to feasibility verification, as described below with reference to:

[0073] Examples of the present invention are in Shapenetcore due to lack of multi-targeted target data sets containing rich classes [4] SHREC2014 [7] On the basis, two new multi-view target datasets inor1 and inor2 were produced. Among them, inor1 includes 50 categories, 41063 three-dimensional models, each 3D model consists of 12 views; Inor2 contains 100 categories, 8559 three-dimensional models, each three-dimensional model consisting of 12 views.

[0074] In order to ensure fairness, other incremental learning comparison methods have also made the same modification (multi-view feature fusion [8] ) To accommodate new multi-view target data sets, the embodiments are experimented on two data sets inor1 and inor2. Among them, multi-view target identification evaluation index selection average incremental classification accuracy [5] , Retrieve the evaluation...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com