3D visual identification pick-and-place system and method

A visual recognition and 3D technology, applied in image data processing, instrumentation, computing, etc., can solve problems such as large matching errors, lack of visual features, high-speed and high-stability pick-and-place operations, and achieve the effect of improving high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

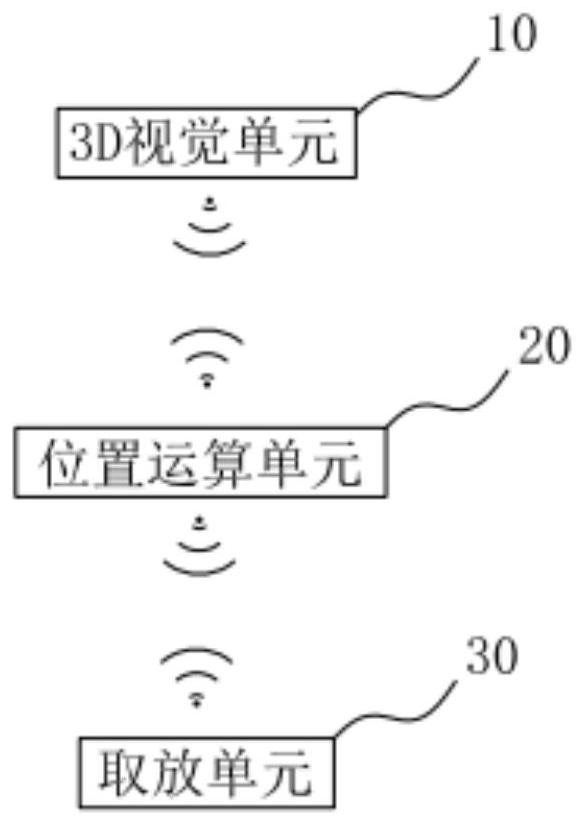

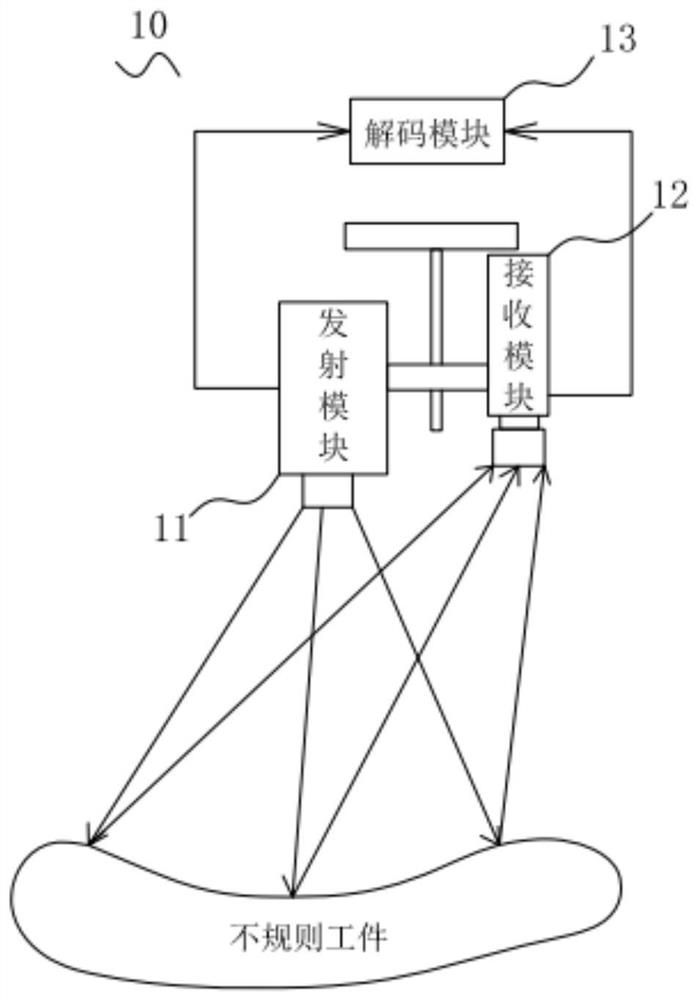

[0044] In Embodiment 1 of the present invention, as figure 1 As shown, a 3D visual recognition pick-and-place system is provided, which is applied to control the grabbing and placement of irregular workpieces. The irregular workpieces involved in the present invention can be 3C electronic products, metal processing parts, etc. Specifically, the system includes a 3D vision unit 10, a pose calculation unit 20, and a pick-and-place unit 30, wherein the 3D vision unit 10 is used to generate the scene point cloud of the identified irregular workpiece, the position The pose calculation unit 20 is used to acquire matching parameters and calculate the pose point cloud of the irregular workpiece, and the pick-and-place unit 30 is used to grab and place the irregular workpiece. In this embodiment, the stereo vision system composed of the 3D vision unit 10 can effectively solve the problem that the current 3D vision system adopts a binocular sensor and uses two cameras to obtain a 3D poi...

Embodiment 2

[0056] In the second embodiment of the present invention, as Image 6 As shown, a 3D visual recognition pick-and-place method is applied to realize the control of irregular workpiece grasping and placement, including the above-mentioned 3D visual recognition pick-and-place system; the 3D visual recognition pick-and-place method includes the following steps:

[0057] S101: The 3D vision unit receives the observed scene image of the irregular workpiece, and reconstructs the scene point cloud of the irregular workpiece based on the scene image;

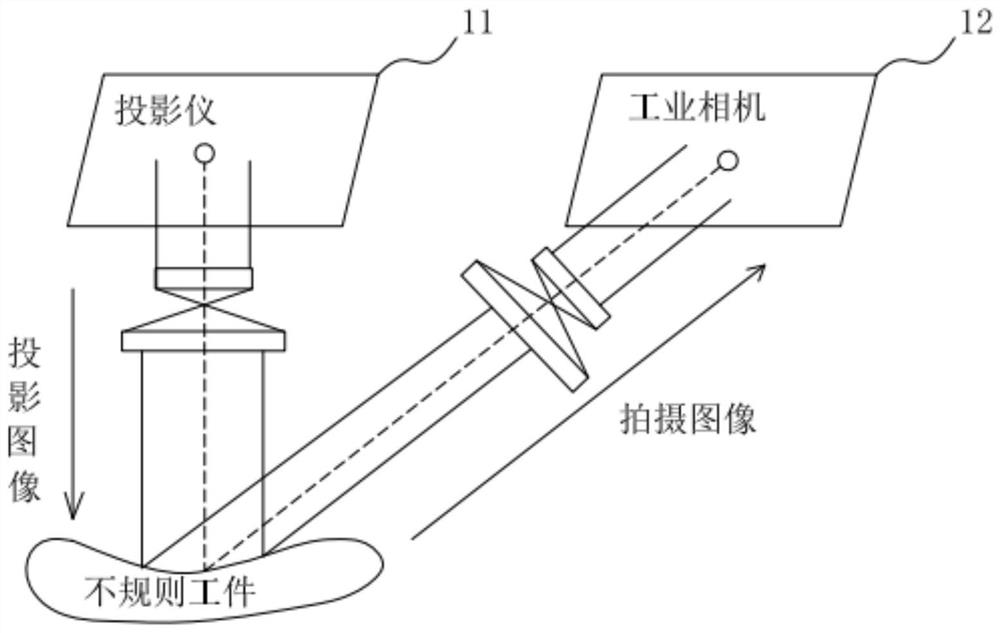

[0058] Wherein, the scene point cloud is the detailed point cloud data of the irregular workpiece, including the position and posture of the irregular workpiece; specifically, through the stereo vision system of this embodiment, that is, the coded pattern is projected by the projector, and the industrial The camera shoots the irregular workpiece and the captured image is sent to the host computer. The host computer processes the image an...

Embodiment 3

[0069] In the third embodiment of the present invention, as Figure 7 As shown, a 3D visual recognition pick-and-place method is applied to realize the control of irregular workpiece grasping and placement. The method of this embodiment is different from the method of Embodiment 2 in that: the 3D vision unit After receiving the observed scene image of the irregular workpiece, and reconstructing the scene point cloud of the irregular workpiece based on the scene image, the method further includes:

[0070] Filtering is performed on the scene point cloud.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com