Multi-modal intention reverse active fusion man-machine cooperation method and system

A human-machine collaborative, multi-modal technology, applied in reasoning methods, computer parts, character and pattern recognition, etc., can solve problems such as increasing interaction load, and achieve the effect of improving accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0050] Embodiment 1 of the present invention proposes a human-machine collaboration method for reverse active fusion of multimodal intentions. The goal of the present invention is to correctly understand the intentions expressed by users and assign collaborative interaction tasks. Human-computer collaborative interaction tasks require humans and robots. done together.

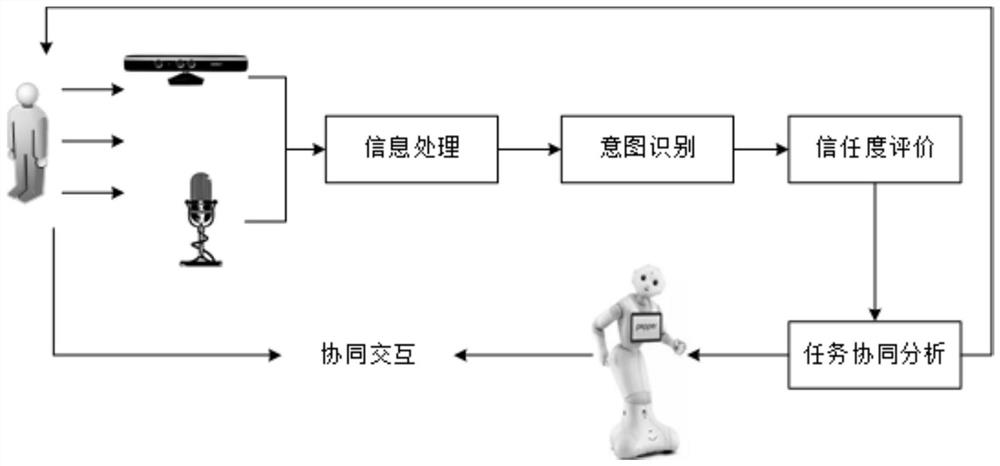

[0051] like figure 1 It is an overall block diagram of a human-computer collaboration method for reverse active fusion of multimodal intentions in Embodiment 1 of the present invention; the complete interaction process should be divided into 6 stages, namely, user input, information processing, intention recognition, trust evaluation, Task collaborative analysis, human-robot collaborative interaction.

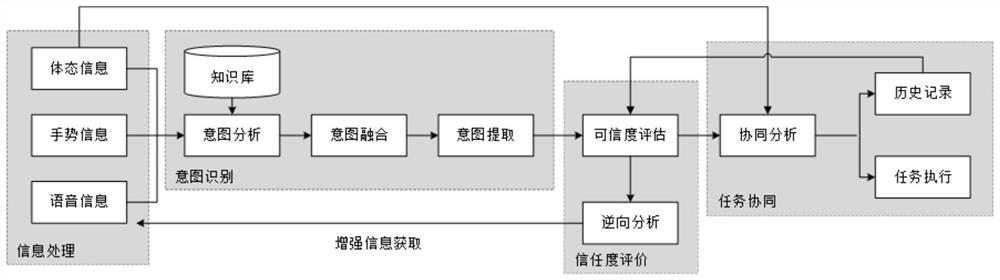

[0052] like figure 2 It is a detailed block diagram of a human-machine collaboration method for multi-modal intent reverse active fusion in Embodiment 1 of the present invention; the system framework consists...

Embodiment 2

[0086] Based on the human-machine collaboration method for reverse active fusion of multi-modal intentions proposed in Embodiment 1 of the present invention, Embodiment 2 of the present invention also proposes a human-machine collaborative system for reverse active fusion of multi-modal intentions, as shown in Figure 5 It is a schematic diagram of a human-machine collaboration system for reverse active fusion of multimodal intentions in Embodiment 2 of the present invention, the system includes an acquisition module, an analysis module, an evaluation module and an assignment module;

[0087] The obtaining module is used to obtain the modality information of the user; the modality information includes voice information, gesture information and body posture information;

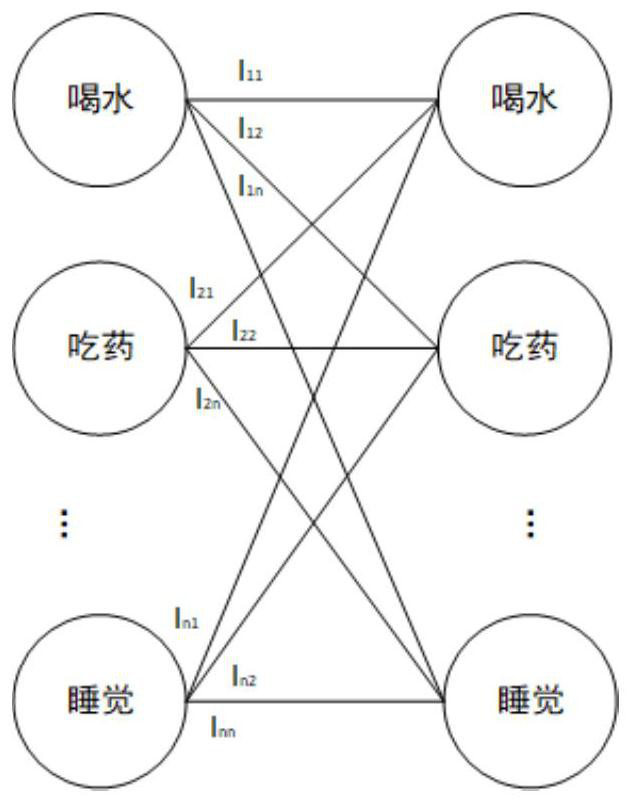

[0088] The analysis module is used to analyze the intention based on the modal information and deduce the direct intention of the user, and the direct intention obtains the indirect intention of the user throug...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com