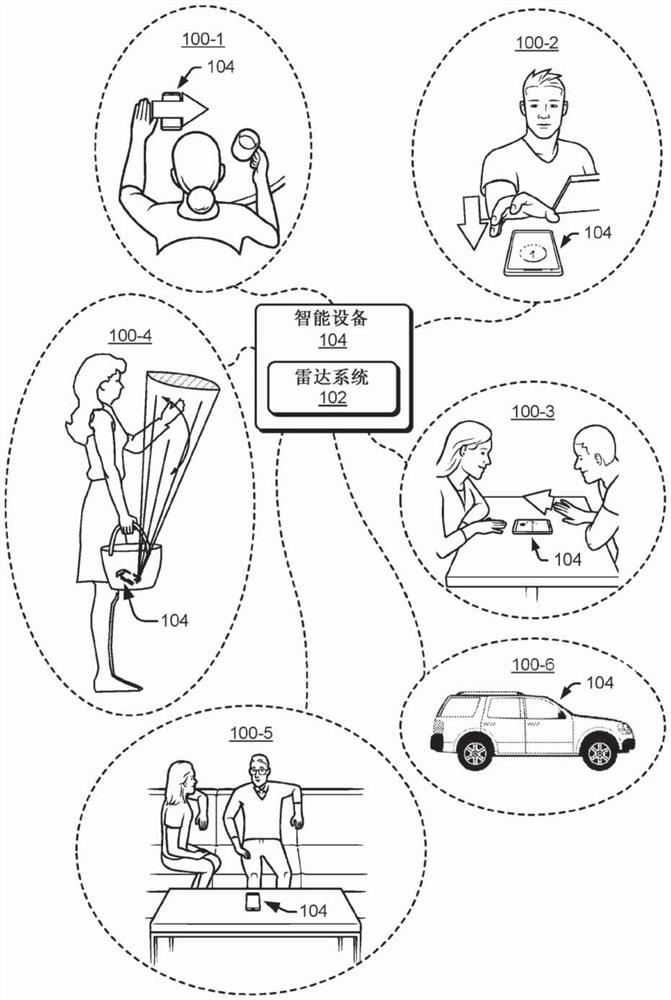

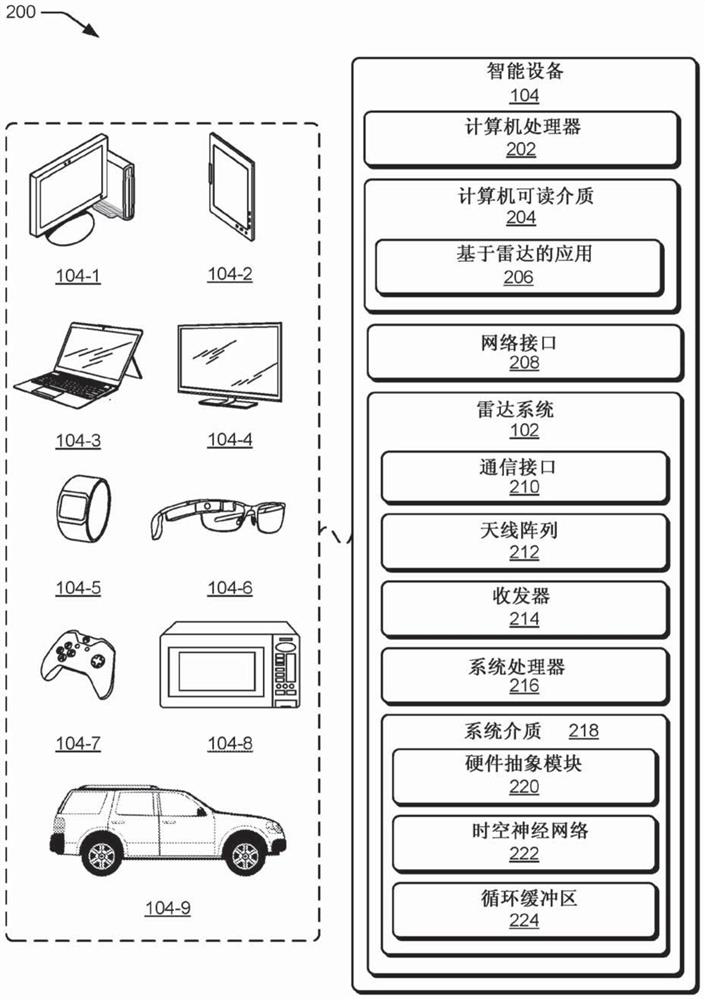

Smart device-based radar system for performing gesture recognition using spatio-temporal neural network

A technology of radar system and neural network, applied in the direction of biological neural network model, neural architecture, neural learning method, etc., to achieve the effect of saving power, increasing size, saving power and memory

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

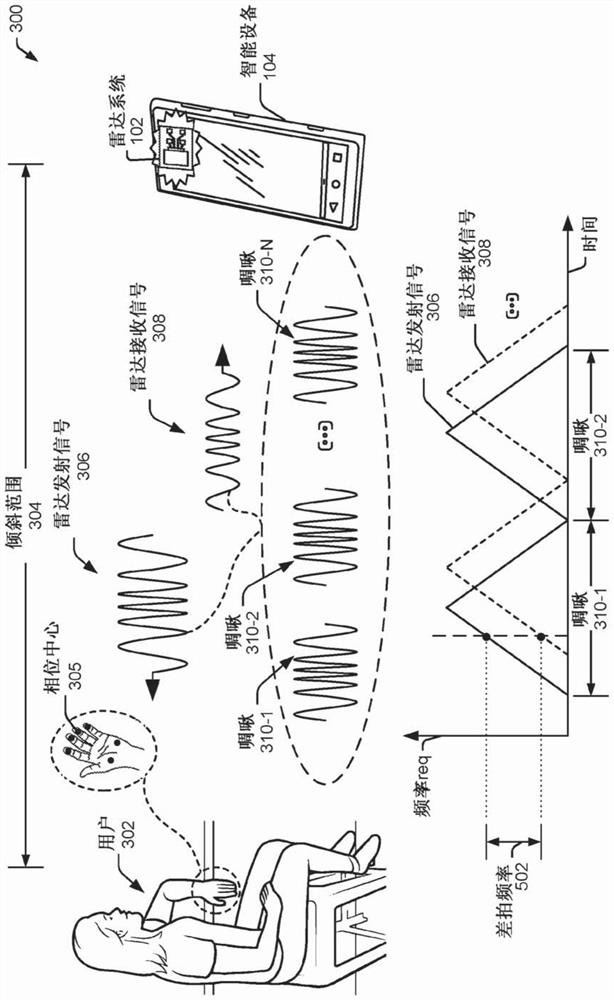

[0132] Example 1: A method performed by a radar system comprising:

[0133] transmitting a radar transmission using an antenna array of the radar system;

[0134] receiving a radar receive signal comprising a version of a radar transmit signal reflected by at least one user using the antenna array;

[0135] generating composite radar data based on the radar received signal;

[0136] providing the composite radar data to a spatio-temporal neural network of the radar system, the spatio-temporal neural network comprising a multi-stage machine learning architecture; and

[0137] The composite radar data is analyzed using the spatio-temporal neural network to identify gestures performed by the at least one user.

example 2

[0138] Example 2: The method of Example 1, wherein:

[0139] Analysis of the composite radar data includes analyzing both magnitude and phase information of the composite radar data using machine learning techniques to identify the attitude.

example 3

[0140] Example 3: The method of Example 1 or 2, wherein:

[0141] The multi-stage machine learning architecture includes a spatially recurrent network and a temporally recurrent network; and

[0142] Wherein, the analysis to described compound radar data comprises:

[0143] analyzing the composite radar data in the spatial domain using the spatial recurrent network to generate feature data associated with the pose; and

[0144] The feature data is analyzed in the temporal domain using the temporal recurrent network to identify the pose.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com