Human body action recognition method and system

A technology for human action recognition and human coordinate system, which is applied in the field of human action recognition methods and systems, can solve problems such as the inability to meet the needs of anti-fall, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

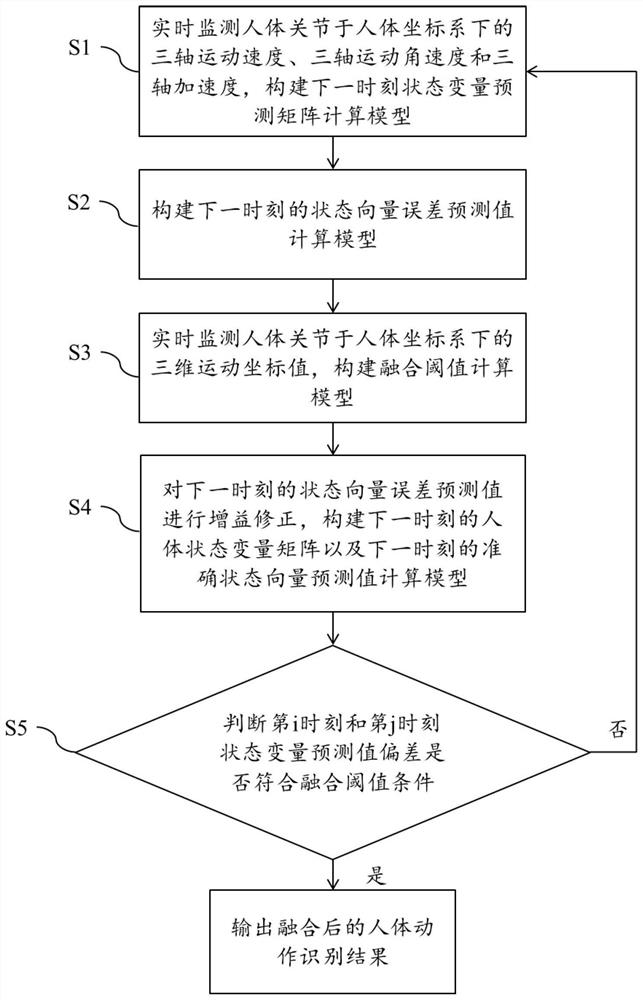

[0072] like figure 1 As shown, the human body motion recognition method provided by this embodiment, the method converts the human body motion recognition result into feature information, and the feature information is 3D key point information, including the following steps:

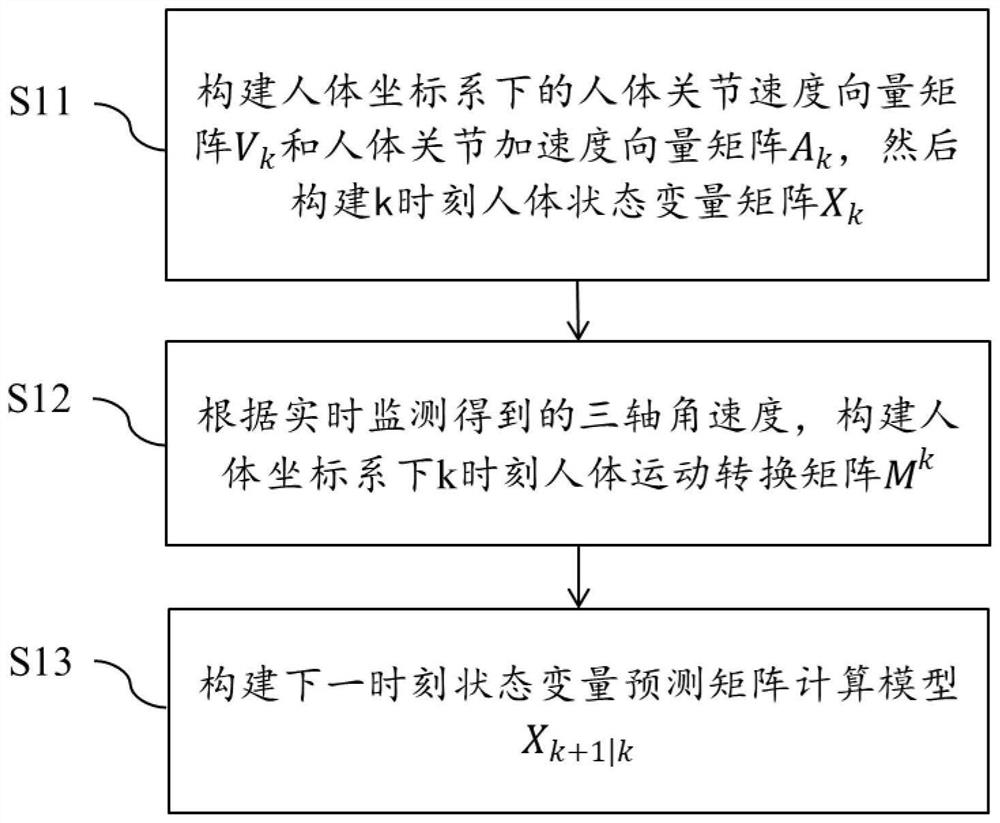

[0073] S1: Monitor the three-axis motion velocity, three-axis motion angular velocity and three-axis acceleration of human joints in the human coordinate system in real time, and construct a state variable prediction matrix calculation model X at the next moment k+1|k ;

[0074] S2: Build the state vector error prediction value calculation model P at the next moment k+1|k ;

[0075] S3: Monitor the three-dimensional motion coordinate values of human joints in the human coordinate system in real time, and construct a fusion threshold calculation model;

[0076] S4: the next moment state variable prediction model matrix X constructed according to the step S1 k+1|k and the state vector error predictio...

Embodiment 2

[0079] like figure 1 As shown, the human body motion recognition method provided by this embodiment, the method converts the human body motion recognition result into feature information, and the feature information is 3D key point information, including the following steps:

[0080] S1: Monitor the three-axis motion velocity, three-axis motion angular velocity and three-axis acceleration of human joints in the human coordinate system in real time, and construct a state variable prediction matrix calculation model X at the next moment k+1|k ;

[0081] S2: Build the state vector error prediction value calculation model P at the next moment k+1|k ;

[0082] S3: Monitor the three-dimensional motion coordinate values of human joints in the human coordinate system in real time, and construct a fusion threshold calculation model;

[0083] S4: the next moment state variable prediction model matrix X constructed according to the step S1 k+1|k and the state vector error predictio...

Embodiment 3

[0115] On the basis of Embodiment 2, as a preferred embodiment of the present invention, the fusion threshold condition in step S4 is 0.01i , X j )i , X j ) ≥ 0.3, then repeat steps S1-S4 to ensure smooth fusion of the human body motion parameters at each moment, so that the final output of the human body motion state parameter results at the ith moment and the human body motion state parameter results at the jth moment are smooth and uninterrupted. Fusion to form accurate human motion state data from the i-th time to the j-th time;

[0116] The fusion threshold D (X i , X j ) is calculated as follows:

[0117]

[0118] where τ q is the i-th state variable matrix X i to the jth state variable matrix X j The weight coefficient of the qth joint in all state variable matrices of , |X i | is the state variable matrix X at the i-th time i rank of , |X j | is the state variable matrix X at the jth time j rank.

[0119] As a preferred embodiment of the present invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com