Method for detecting false information based on multi-modal fusion mechanism of common attention

A false information and multi-modal technology, applied in computer parts, character and pattern recognition, biological neural network models, etc., can solve problems such as loss, failure to consider, and failure to consider the impact of images on false information classification. Achieve the effect of enhancing robustness and solving limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

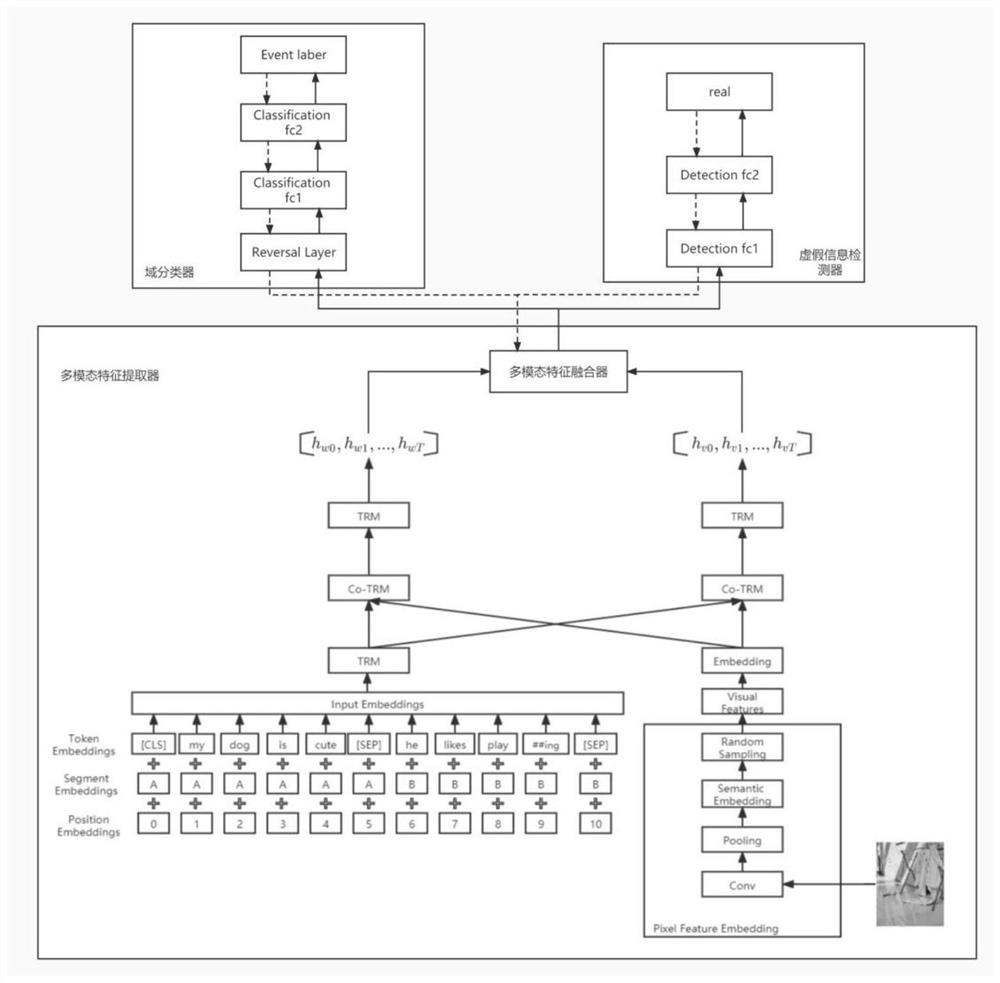

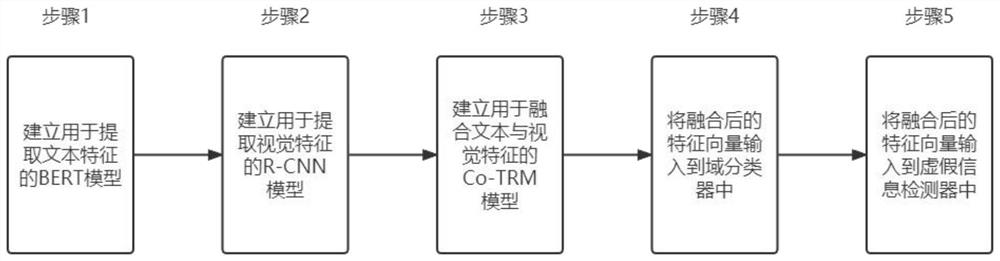

[0032] see figure 1 and figure 2 , this example provides a method for detecting false information based on a multi-modal fusion mechanism based on common attention of the present invention, comprising the following steps:

[0033] Step S1. Build a BERT model for extracting text features;

[0034] Step S2. Build an R-CNN model for extracting visual features;

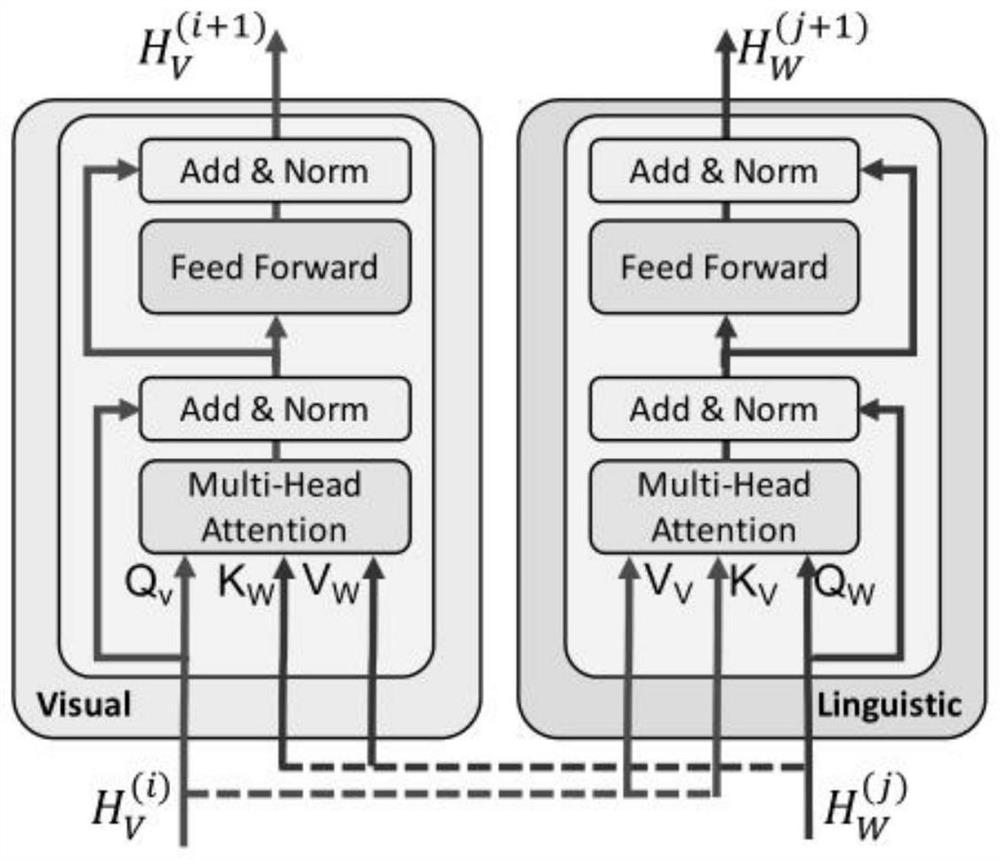

[0035] Step S3. constructing a Co-TRM model of a co-attention converter layer that fuses text and visual features;

[0036] Step S4. Input the fused feature vector into the domain classifier, map the multimodal features of different information to the same feature space, classify the text into different events, and delete the special features of the event;

[0037] Step S5. Input the fused feature vector into the false information detector, and use the potential multimodal features to judge the authenticity of the information.

[0038] Step S1 specifically includes: in order to better extract text features and effect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com