Human hand trajectory prediction and intention recognition method based on multi-feature fusion

A multi-feature fusion and trajectory prediction technology, applied in the field of visual recognition, can solve problems such as complex working environment, long hand travel, and unstable efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

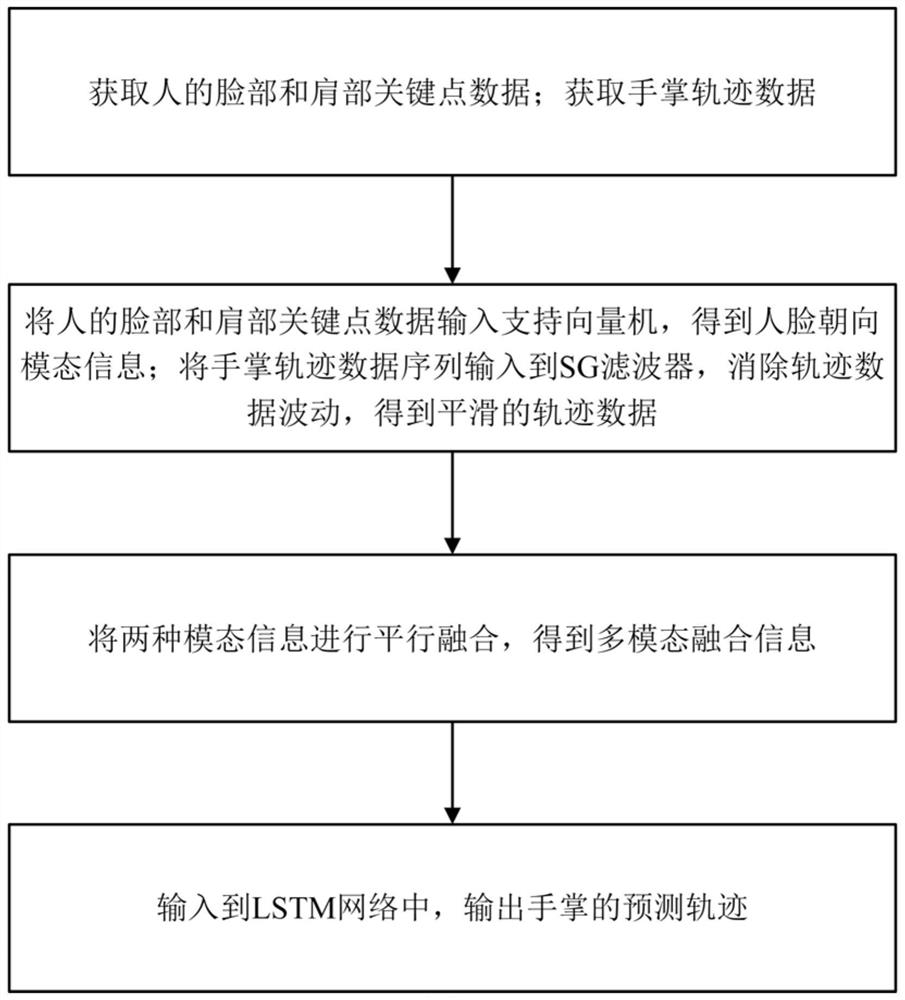

[0028] like figure 1 A schematic flowchart of the method of the present invention: this multi-feature fusion-based method for human hand trajectory prediction and intention recognition provided by the present invention includes the following steps:

[0029] S1. Obtain the key point data of the face and shoulder of the person; obtain the palm trajectory data;

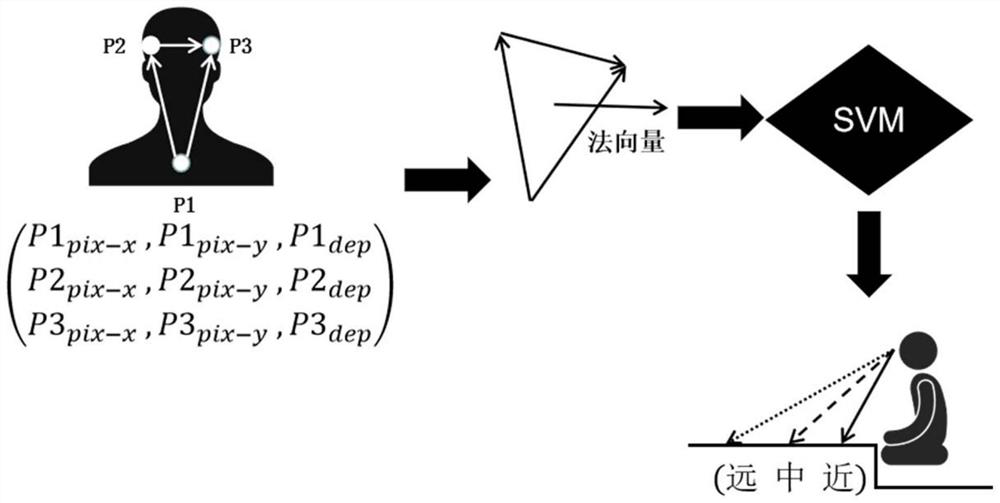

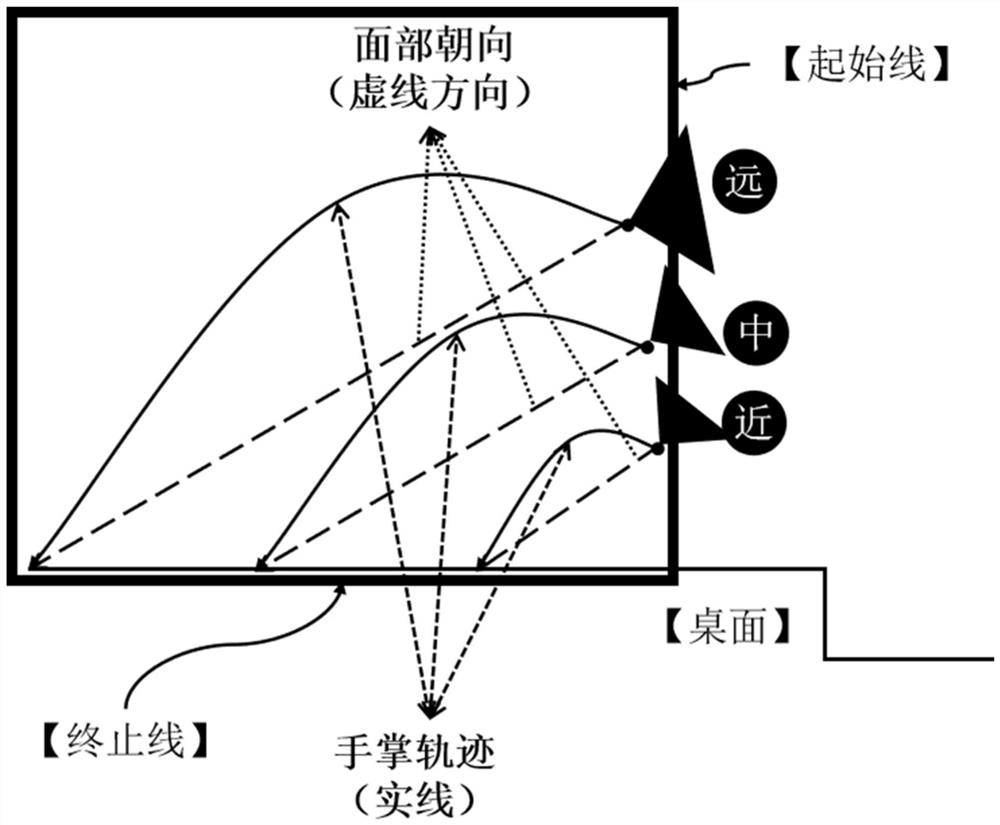

[0030] S2. Input the human face and shoulder key point data into the support vector machine (SVM) to obtain the face orientation modal information; input the palm trajectory data sequence into the Savitzky-Golay filter (SG filter) to eliminate the trajectory data fluctuate to obtain smooth trajectory data;

[0031] S3. Perform parallel fusion of the two modal information to obtain multi-modal fusion information;

[0032] S4. Input into the LSTM (Long Short-Term Memory) network, and output the predicted trajectory of the palm.

[0033] like figure 2 It is a schematic diagram of the position of key points in the metho...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com