Feature fusion motion video key frame extraction method based on human body posture recognition

A technology of video key frame and fusion feature, which is applied in the field of motion video key frame extraction of fusion feature, can solve the problems of a large amount of image information, information transmission and calculation burden, and achieve the effect of reducing the amount of calculation and avoiding missed detection and false detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

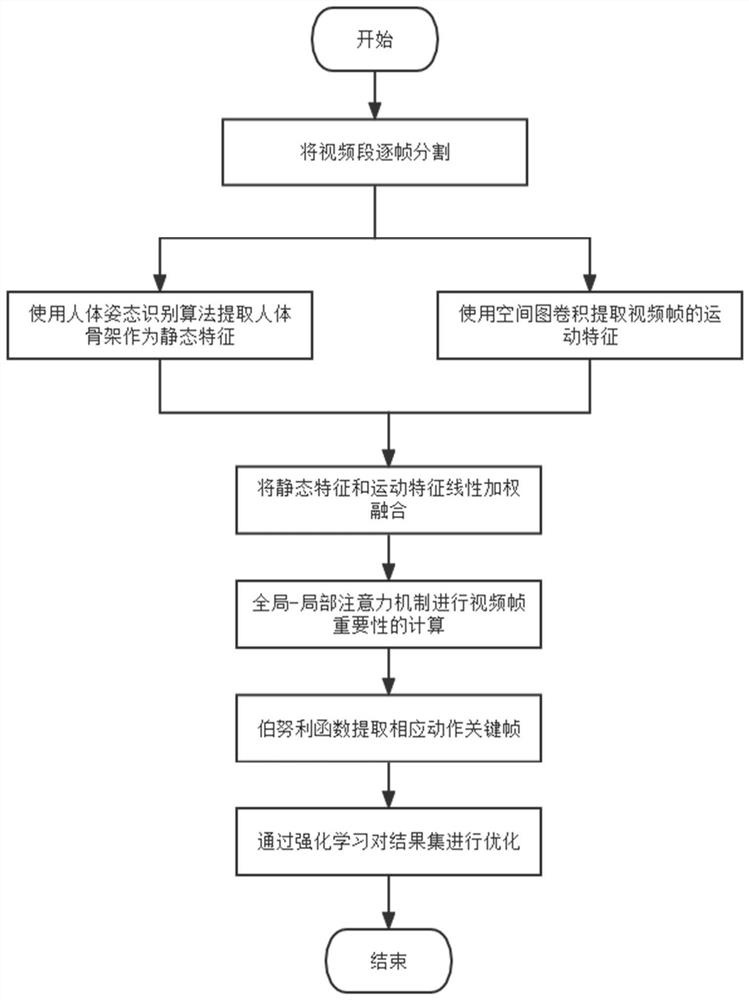

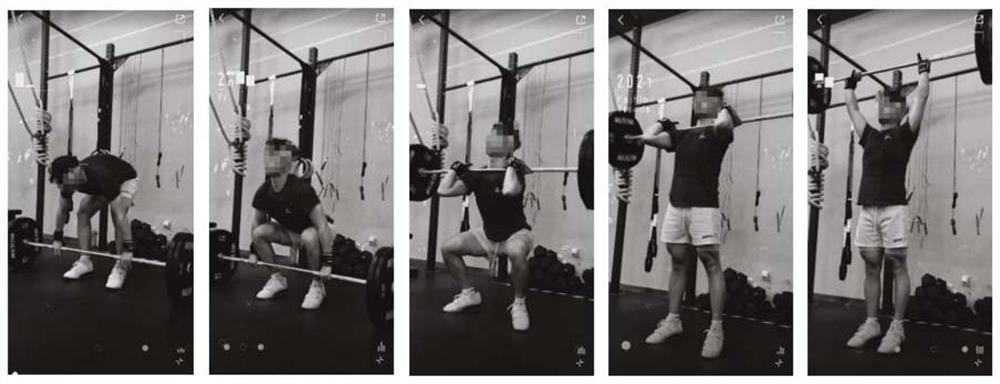

[0040] The present invention provides a method for extracting key frames of motion video with fusion features, such as figure 1 As shown in the figure, this method fuses the static features extracted by the lightweight human gesture recognition algorithm and the motion features extracted by the spatial graph convolution to improve the accuracy and completeness of key frame detection. The specific examples are as follows:

[0041] (1) The target video segment is segmented frame by frame, and the video is divided into a series of video frames.

[0042] (2) In order to better preserve the original information in the input image and reduce the loss, the residual network ResNet50 is used for static feature extraction, and the data dimension is reduced to 256 dimensions, and the static feature of the obtained video frame is represented as S s =[S s1 ,S s2 ,…,S sT ].

[0043] (3) Abstract the skeleton data of the human body in the three-dimensional space, use the lightweight HRNe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com