Apparatus and methods for haptic rendering using a haptic camera view

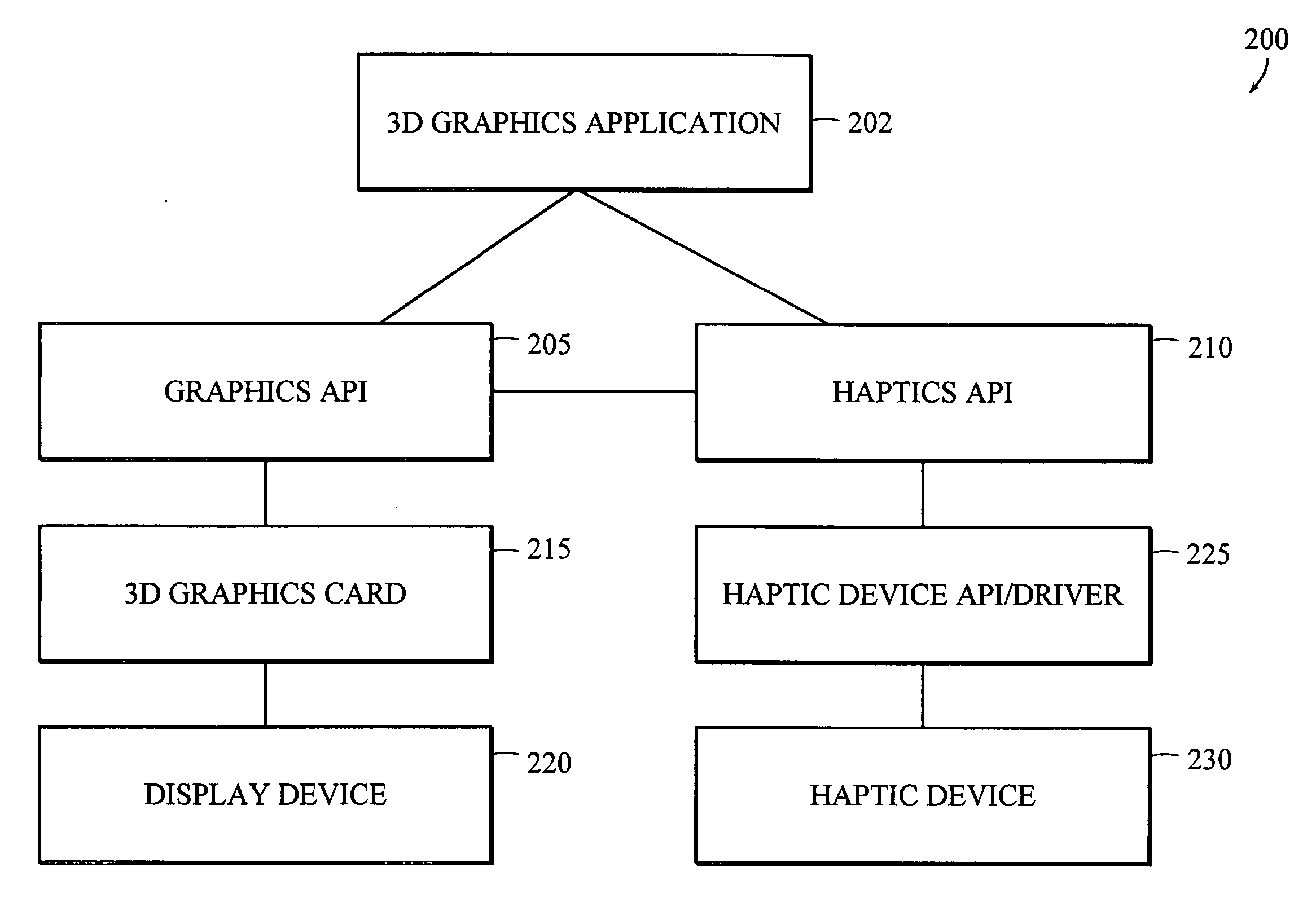

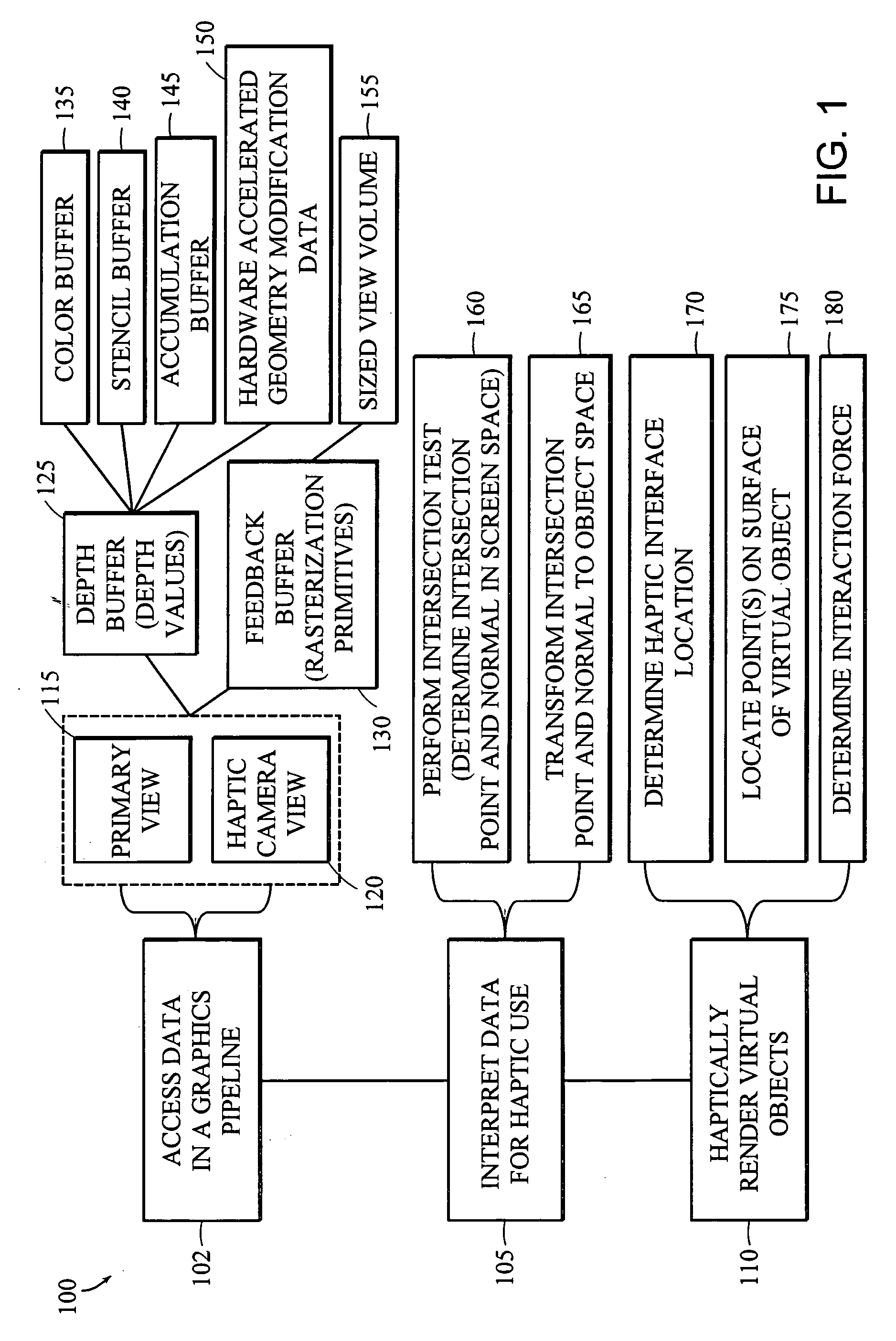

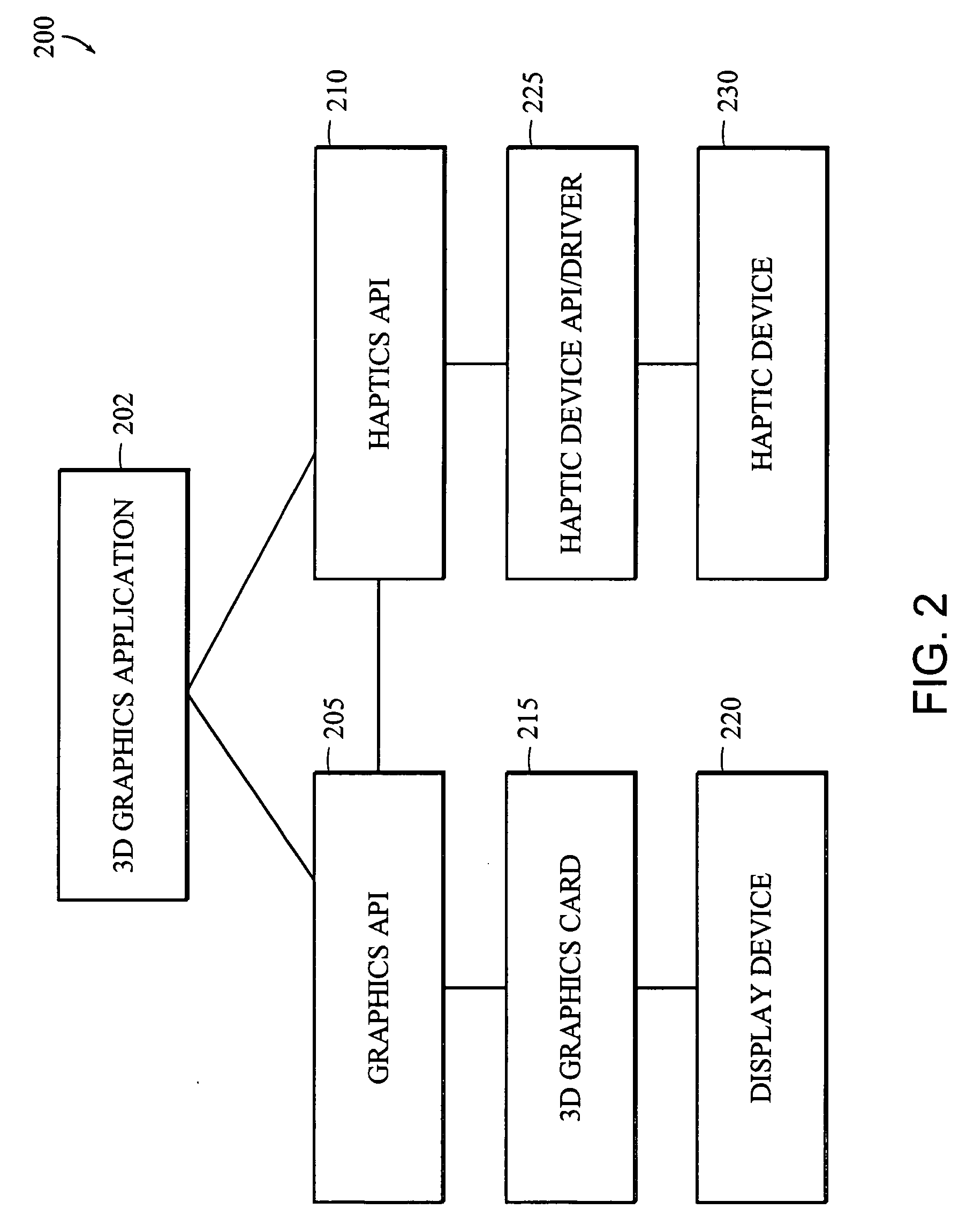

a camera view and haptic rendering technology, applied in the field of haptic rendering of virtual environments, can solve the problems of haptic rendering process generally computation-intensive, 3d graphics applications are incompatible with haptic systems, and haptic rendering of 3d objects in virtual environments is a relatively inefficient process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] Throughout the description, where an apparatus is described as having, including, or comprising specific components, or where systems, processes, and methods are described as having, including, or comprising specific steps, it is contemplated that, additionally, there are apparati of the present invention that consist essentially of, or consist of, the recited components, and that there are systems, processes, and methods of the present invention that consist essentially of, or consist of, the recited steps.

[0044] It should be understood that the order of steps or order for performing certain actions is immaterial so long as the invention remains operable. Moreover, two or more steps or actions may be conducted simultaneously.

[0045] A computer hardware apparatus may be used in carrying out any of the methods described herein. The apparatus may include, for example, a general purpose computer, an embedded computer, a laptop or desktop computer, or any other type of computer ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com