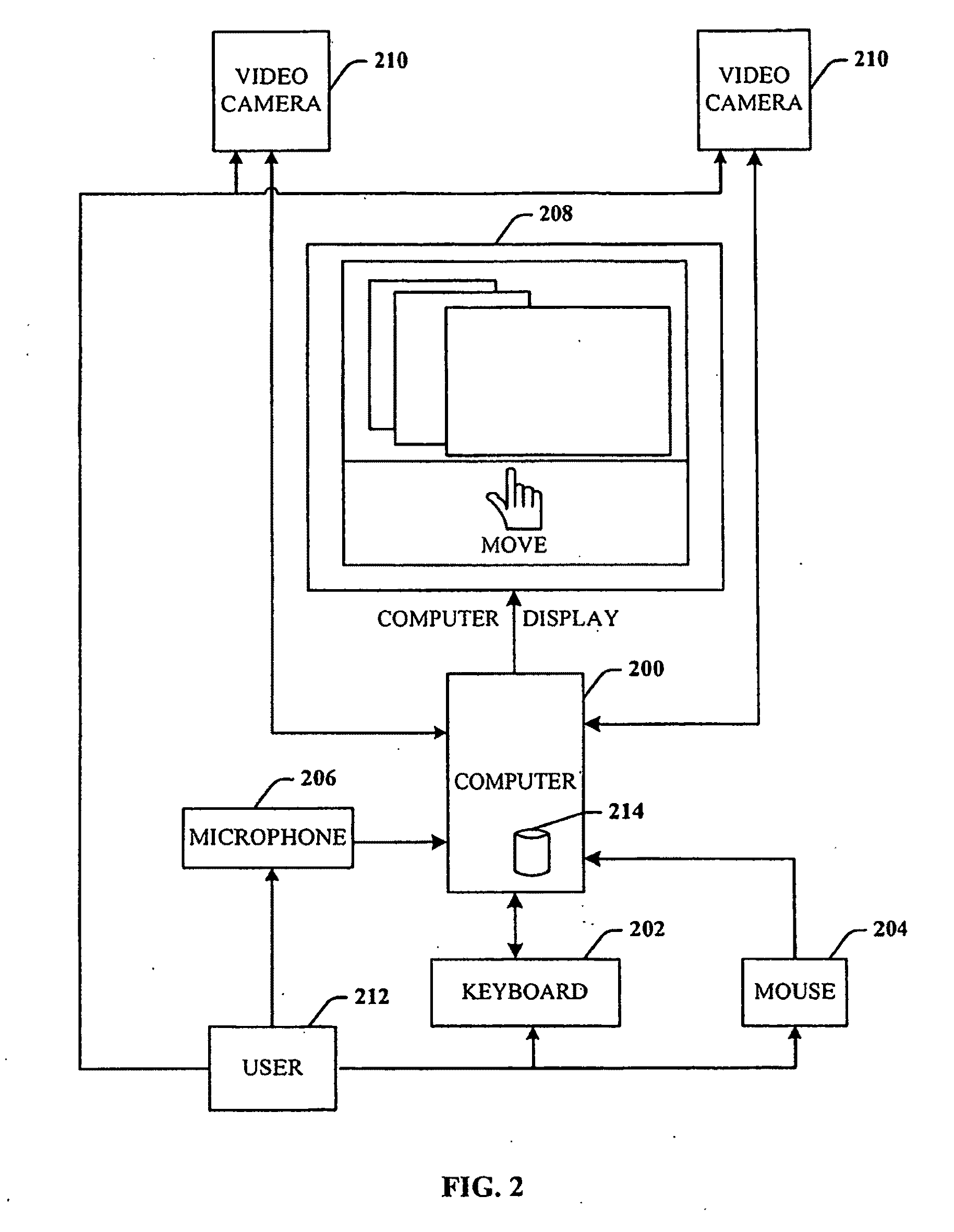

There are, however, many applications where the traditional

user interface is less practical or efficient.

The traditional computer interface is not ideal for a number of applications.

Manipulation of the presentation by the presenter is generally controlled through use of awkward remote controls, which frequently suffer from inconsistent and less precise operation, or require the cooperation of another individual.

Switching between sources, advancing fast fast-forward, rewinding, changing chapters, changing volume, etc., can be very cumbersome in a professional

studio as well as in the home.

Similarly, traditional interfaces are not well suited for smaller, specialized electronic gadgets.

Additionally, people with motion impairment conditions find it very challenging to cope with traditional user interfaces and

computer access systems.

These conditions and disorders are often accompanied by tremors, spasms, loss of coordination,

restricted range of movement, reduced

muscle strength, and other motion impairing symptoms.

As people age, their motor skills decline and

impact the ability to perform many tasks.

It is known that as people age, their cognitive, perceptual and motor skills decline, with negative effects in their ability to perform many tasks.

The requirement to position a cursor, particularly with smaller graphical presentations, can often be a significant barrier for elderly or afflicted

computer users.

However, at the same time, this shift in the user interaction from a primarily text-oriented experience to a point-and-click experience has erected new barriers between people with disabilities and the computer.

For example, for older adults, there is evidence that using the mouse can be quite challenging.

It has been shown that even experienced older

computer users move a cursor much more slowly and less accurately than their younger counterparts.

In addition, older adults seem to have increased difficulty (as compared to younger users) when targets become smaller.

For older

computer users, positioning a cursor can be a severe limitation.

One solution to the problem of decreased ability to position the cursor with a mouse is to simply increase the size of the targets in computer displays, which can often be counter-productive since less information is being displayed, requiring more navigation.

Previous studies indicate that users find gesture-based systems highly desirable, but that users are also dissatisfied with the recognition accuracy of gesture recognizers.

Furthermore, experimental results indicate that a user's difficulty with gestures is in part due to a lack of understanding of how

gesture recognition works.

However, examples of perceptual user interfaces to date are dependent on significant limiting assumptions.

Proper operation of the system is dependent on proper lighting conditions and can be negatively impacted when the system is moved from one location to another as a result of changes in lighting conditions, or simply when the lighting conditions change in the room.

The use of such devices is generally found to be distracting and intrusive for the user.

Thus perceptual user interfaces have been slow to emerge.

The reasons include heavy computational burdens, unreasonable calibration demands, required use of intrusive and distracting devices, and a general lack of robustness outside of specific laboratory conditions.

For these and similar reasons, there has been little advancement in systems and methods for exploiting perceptual user interfaces.

However, as the trend towards smaller, specialized electronic gadgets continues to grow, so does the need for alternate methods for interaction between the user and the electronic device.

Many of these specialized devices are too small and the applications unsophisticated to utilize the traditional input keyboard and mouse devices.

Login to View More

Login to View More  Login to View More

Login to View More