Four-dimensional polynomial model for depth estimation based on two-picture matching

a two-picture matching and depth estimation technology, applied in the field of camera depth estimation, can solve the problems of poor focusing decision of passive systems in low contrast or low, and many focusing systems perform poorly when the subject is present, and achieve the effect of accurate estimating subject distance and accurate estimation of subject distan

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

embodiment 10

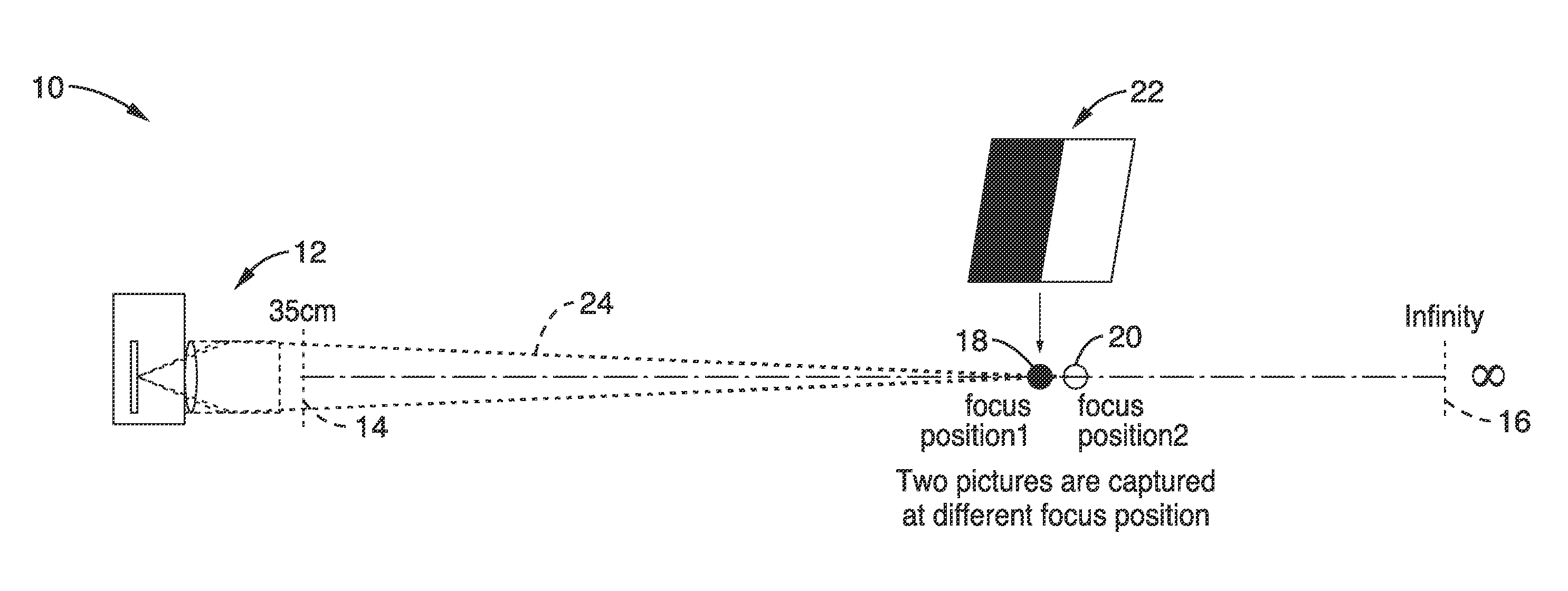

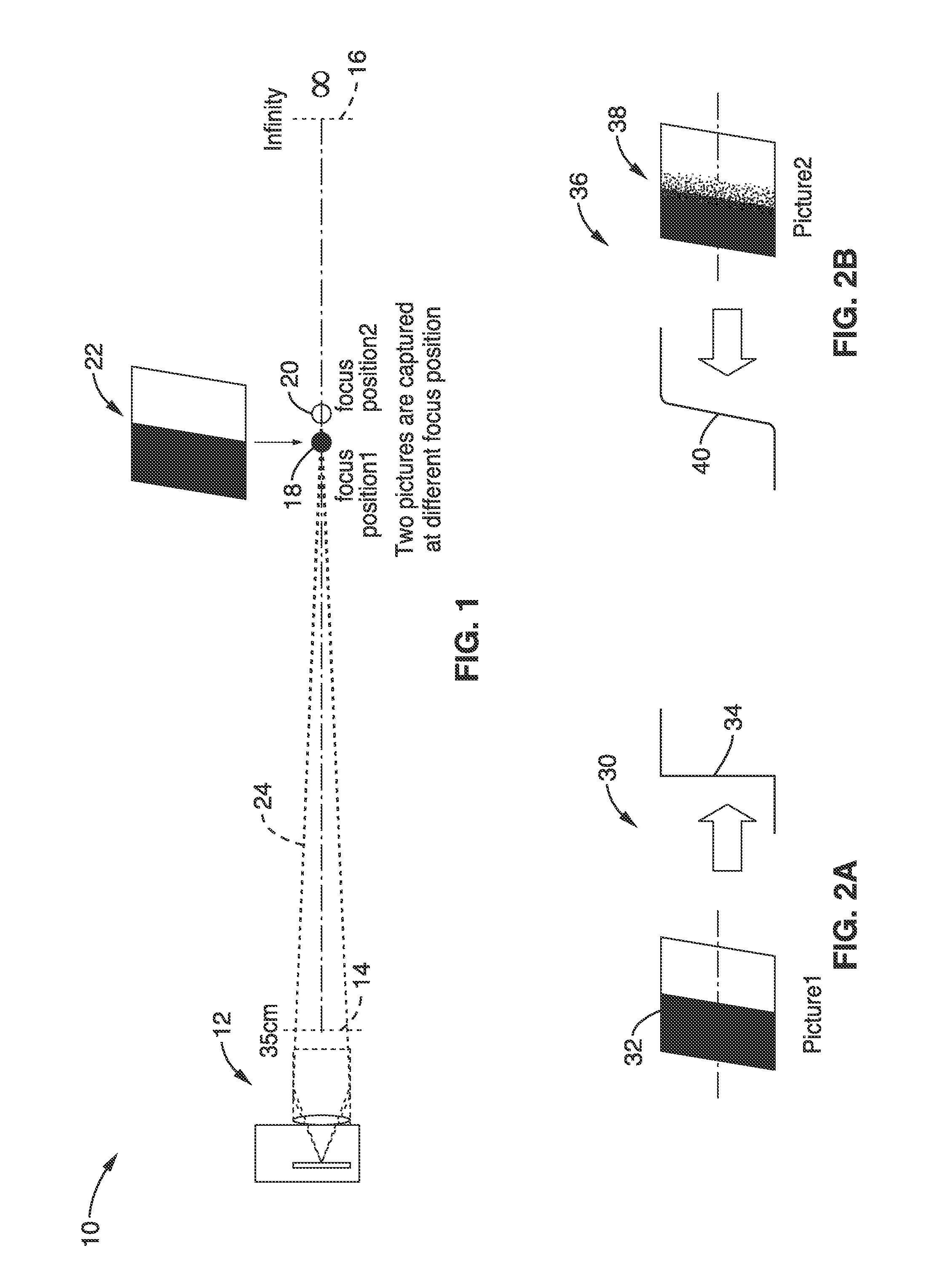

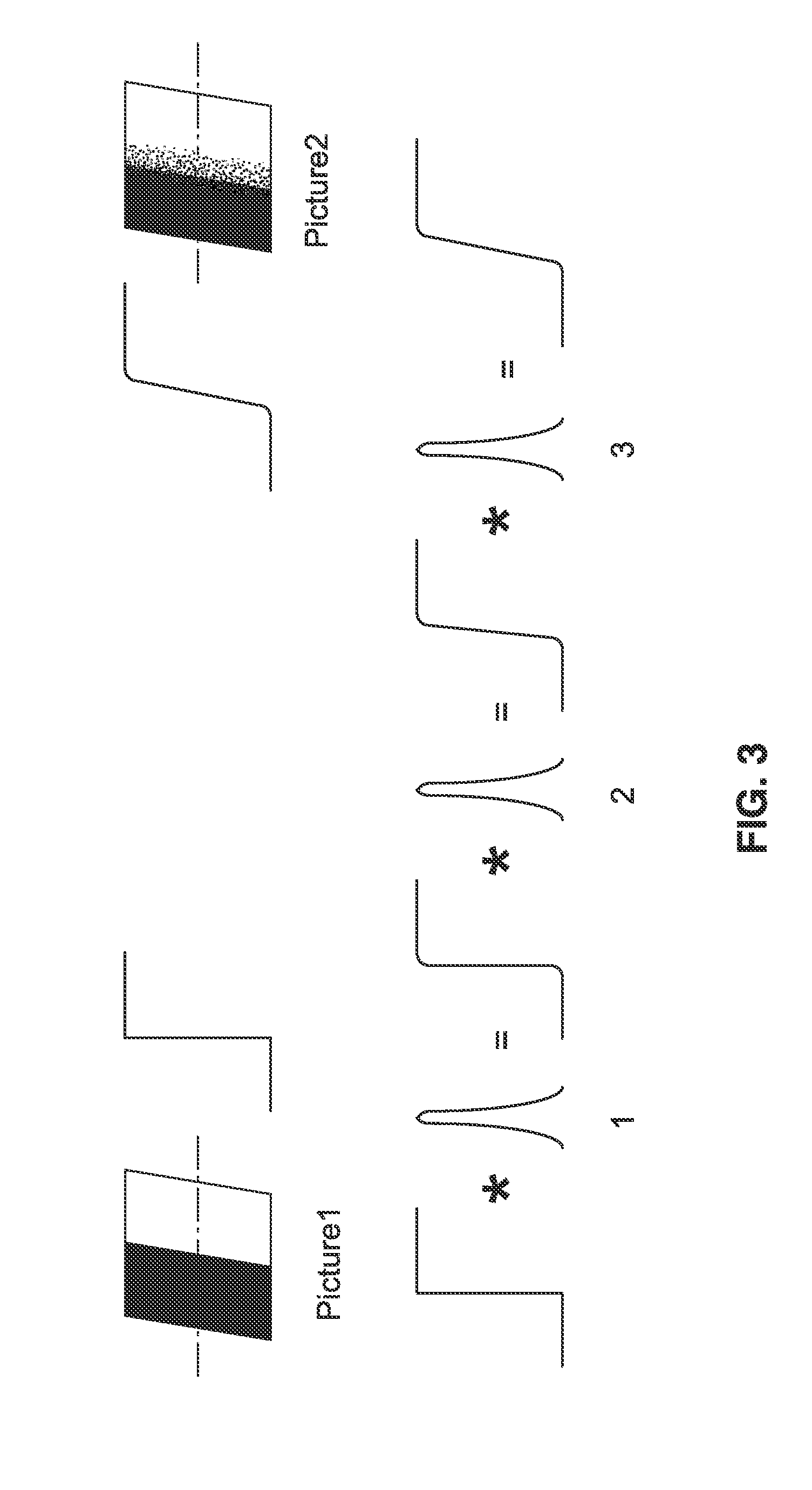

[0065]FIG. 1 illustrates an embodiment 10 of capturing images in the process of creating a set of matching curves to characterize a given camera-lens system, hereafter referred to simply as a camera. Multiple images are captured of a calibration target (or calibration subject), at different focus positions (subject-distances) when collecting a data set for a given imaging apparatus (e.g., specific embodiment, make or model of camera, or a family of cameras using the same / similar optical imaging elements). Collecting the data set comprises a characterization process for the camera-lens system at a given magnification setting (e.g., lens at a fixed focal length—“zoom” setting). An imaging device (camera) 12 is shown which can focus from a minimum focal distance 14 on out to infinity 16. Minimum focal distance 14 (e.g., in this case 35 cm) is shown as well as focus at infinity 16. According to the invention, the focus converges to a first focus position 18 and then to a second focus po...

embodiment 1

[0132]2. An apparatus as recited in embodiment 1, wherein said multiple object images comprise at least two images captured at different focus positions using an identical aperture setting and focal length.

[0133]3. An apparatus as recited in embodiment 1, further comprising programming executable on said computer processor for automatically adjusting focus of said apparatus in response to said estimation of subject distance.

[0134]4. An apparatus as recited in embodiment 1, wherein during said compensating for motion at least one block from the first image is located as a fit within the second image.

[0135]5. An apparatus as recited in embodiment 1, wherein said compensating for motion is configured for being performed in response to one or more convolutions by a blur kernel to determine blur difference.

[0136]6. An apparatus as recited in embodiment 1, wherein said compensating for motion is performed according to,

(x^v,y^v)=argmin(xv,yv)fi(x,y)-fj(x-xv,y-yv)

in which two images fi and ...

embodiment 6

[0137]7. An apparatus as recited in embodiment 6, wherein blur difference is determined in response to whether image fi or fj is sharper, and determined in response to,

I1=argminIfi *K *K …*KIconvolutions-fjV,andI2=argminIfjV *K *K …*KIconvolutions-fi,

in which I1 and I2 are first and second blur difference values, fi and fj are the two images captured, fjV is the captured images in response to motion compensation, and K are blur kernels; wherein if I1 is larger than I2, then fi is sharper than fj, and the blur difference will be given by I1, otherwise if I2 is larger than I1, then I2 is sharper and the blur difference will be given by −I2; and wherein the sign of blur difference values indicates which image is sharper.

[0138]8. An apparatus as recited in embodiment 1, wherein said blur difference IA—B is computed as,

IA_B=min(xv,yv)[argminIfA(x,y) *K(x,y) *K(x,y) *…*K(x,y)Iconvolutions-fB(x-xv,y-yv)]

in which K are convolution operations, (x,y) is amount of pixel location shift, (xV,yV)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com