Interactive time-space accordant video matting method in digital video processing

A digital video and interactive technology, applied in digital video signal modification, image data processing, television and other directions, it can solve the problems of discontinuous video results and long processing time in frame-by-frame processing.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

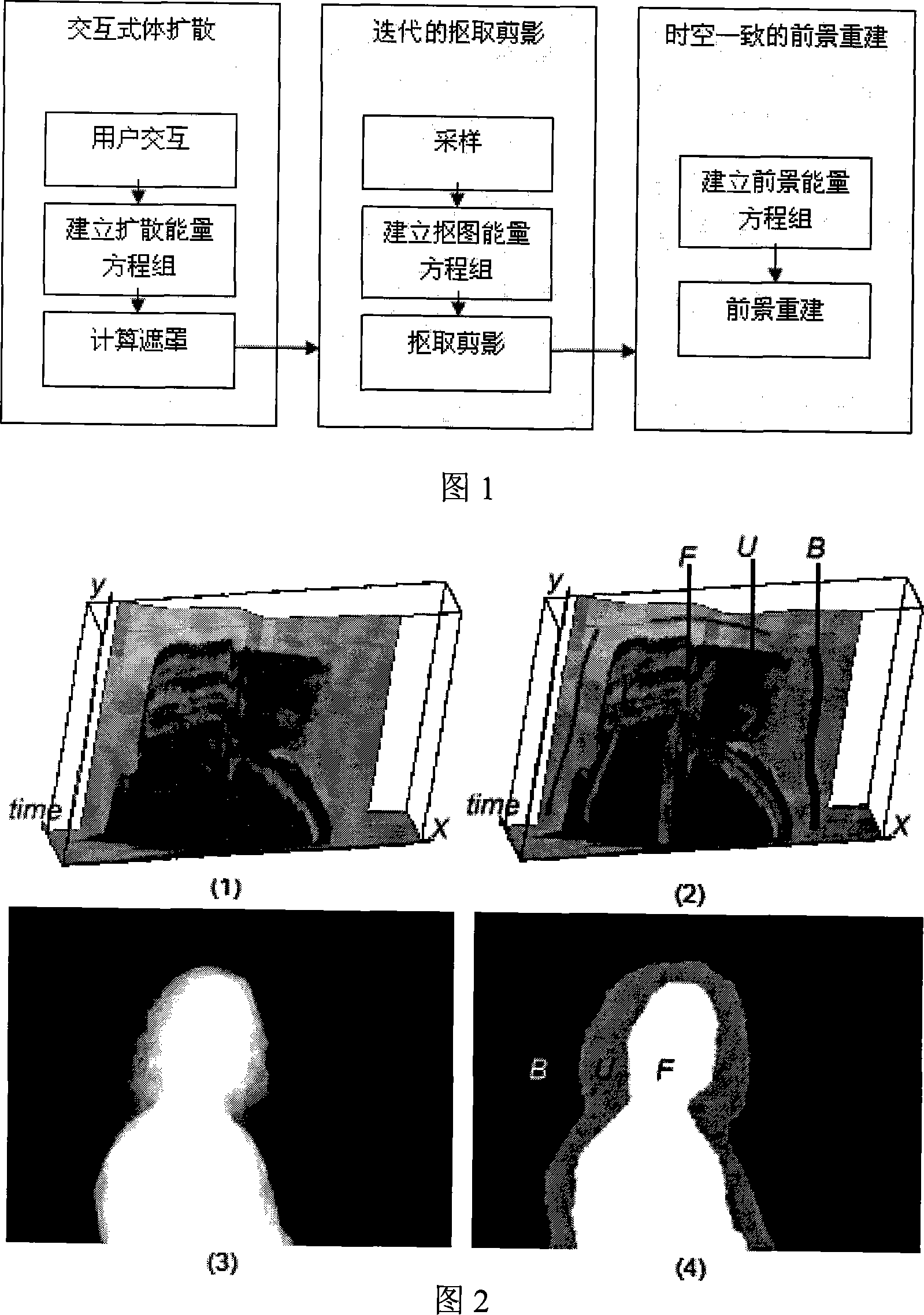

[0043] The flow chart of the fast video matting method of interactive spatiotemporal consistency in the digital video processing of the present invention is shown in Fig. 1, comprises the following steps:

[0044] The first step is interactive volume diffusion: the interaction is carried out on the 3D video volume. The interaction of the video volume is shown in Figure 2. The 3D video volume is composed of each frame of the video in time sequence, including the x-axis, y-axis and time axis Time .

[0045] 1) The user interacts on the 3D video volume. The user can rotate, slice and segment the video volume arbitrarily, and mark and divide the foreground area, background area and unknown area on the video volume with strokes of different colors. Figure 2(1) is a slice in the video volume, and Figure 2(2) is marking on the slice. In the figure, F is the foreground area, B is the background area, and U is the unknown area.

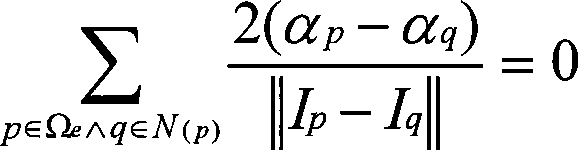

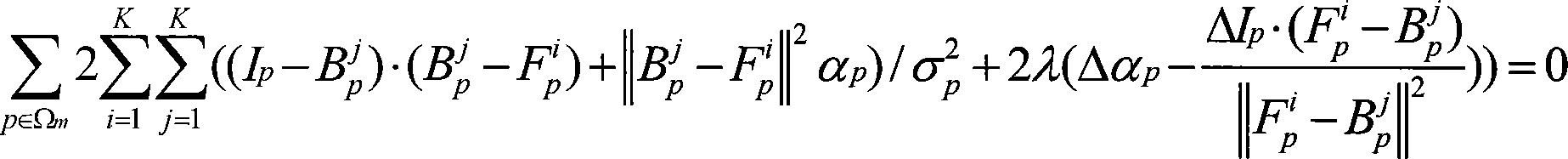

[0046] 2) Record the set of points in the undetermined ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com