Multimedia human-computer interaction method based on cam and mike

A human-computer interaction and camera technology, applied in the field of human-computer interaction, can solve the problems of high cost of synchronous action display, cumbersome control of computer display image actions, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

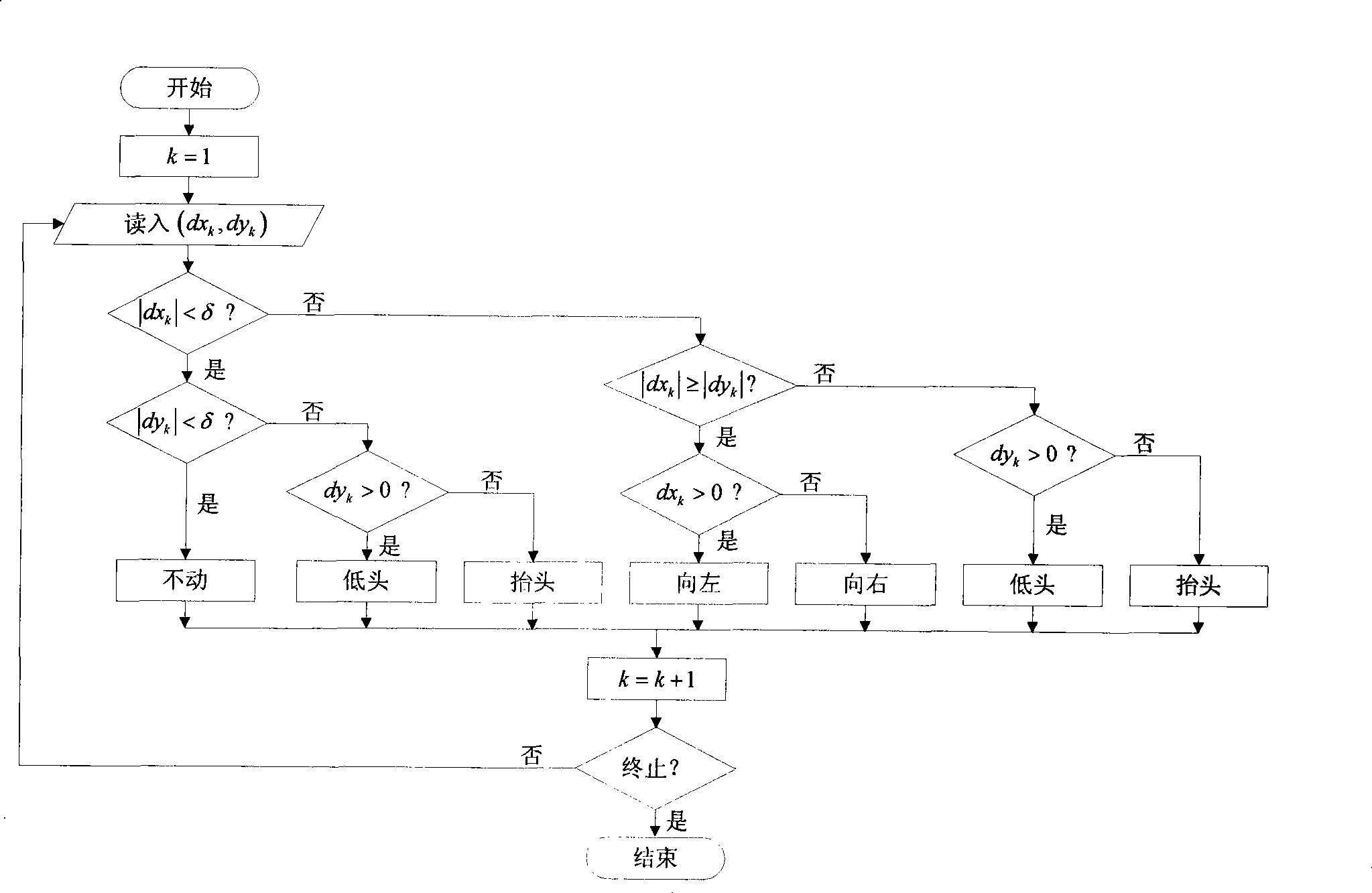

[0038] The specific implementation of the method of the present invention is described below.

[0039] Assuming that the computer display image is a cartoon anthropomorphic image, taking the Logitech QuickCam Messenger camera as an example, there are three video sequence formats: ①640×480, 10 frames / second; ②320×240, 15 frames / second; ③160×120, 15 frames / second. frames per second.

[0040] Firstly, the color image acquired by the camera is used by the brightness formula

[0041] Y=0.299R+0.587G+0.114B

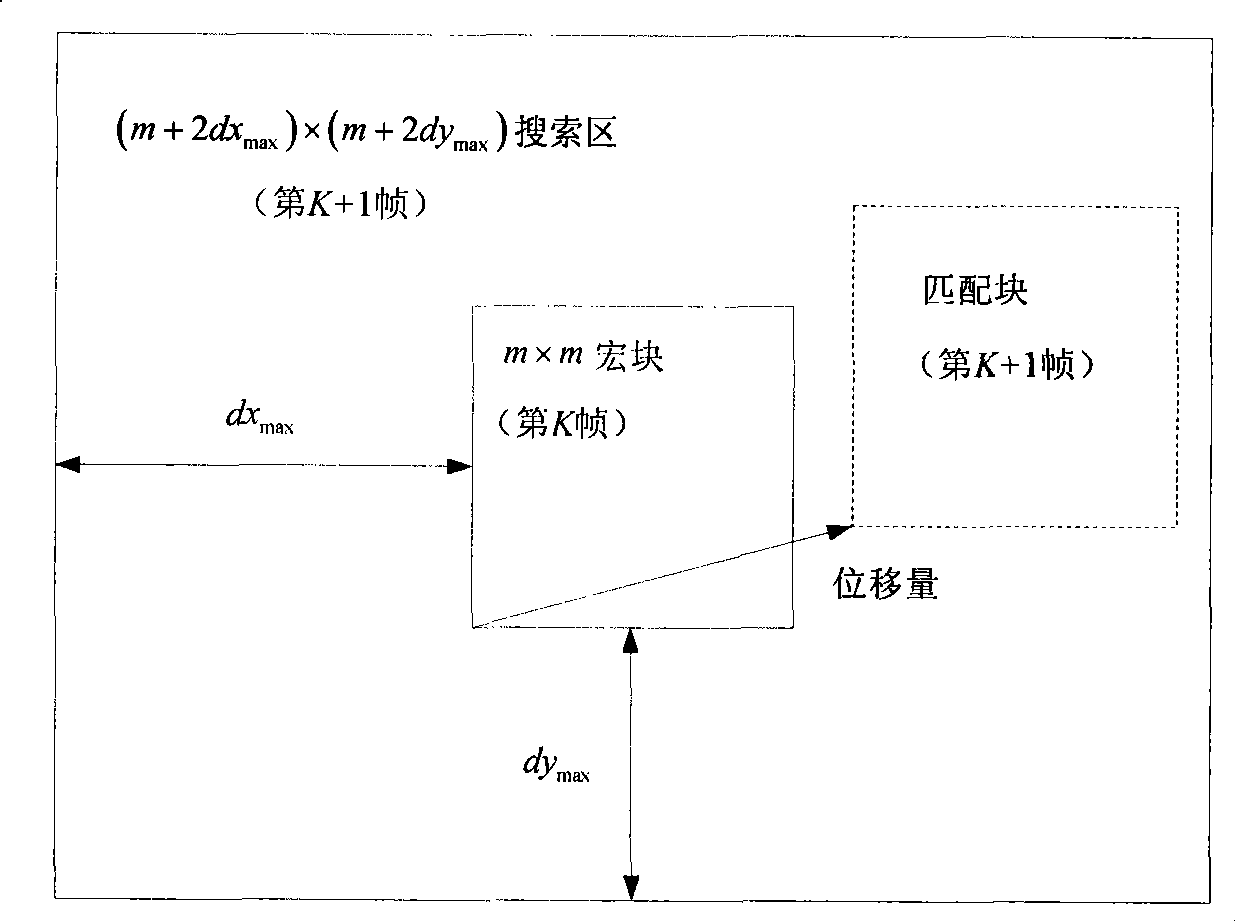

[0042] Convert to a grayscale image, and divide each frame of image into m×m pixel macroblocks. Taking the 320×240 sequence as an example, m=16 is desirable, then each frame of image has 20×15 macroblocks, such as figure 1 shown. For a macroblock in the kth frame image, the (m+2dx max )×(m+2dy max ) to search for the block that best matches it, dx max and dy max is the maximum displacement of the preset macroblock in the horizontal and vertical directions, such as fig...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com