Method for demarcating camera and device thereof

A camera calibration and camera technology, applied in the field of image processing and computer vision, can solve problems such as many restrictions, complex processes, and inability to use calibration reference objects, achieving the effect of fast solution process and stable solution results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

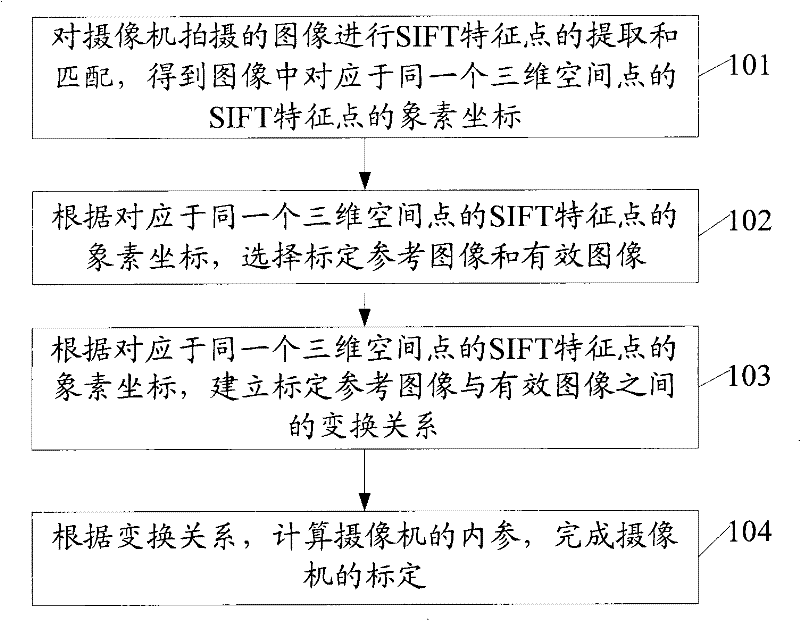

[0044] See figure 1 The embodiment of the present invention provides a method for camera calibration, including:

[0045] 101: Extract and match the scale-invariant feature SIFT feature points of the image taken by the camera, and obtain the pixel coordinates of the SIFT feature points corresponding to the same three-dimensional space point in the image. The image is the same image obtained by rotating the camera around the optical center. At least two images of the scene;

[0046] Among them, the specific implementation 101 includes: extracting the SIFT feature points of the image; performing SIFT feature point matching between the images, where the Euclidean distance of the feature vector of the feature point is used as the basis for judging whether the SIFT feature points in the image match, The nearest neighbor ratio method is used as the judgment rule for matching SIFT feature points in the image, and the sequential loop matching method is used for matching; according to the o...

Embodiment 2

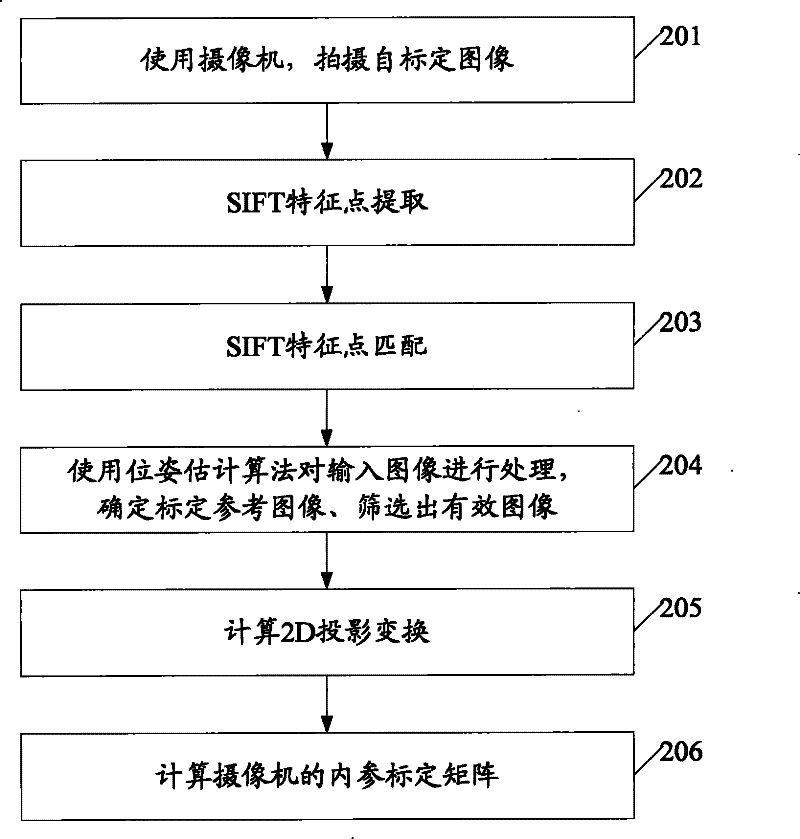

[0055] See figure 2 , The second embodiment of the present invention provides a camera calibration method, including:

[0056] 201: Use a camera to shoot calibration images;

[0057] Among them, the camera is fixed at a position in the 3D world space, and the image sequence of the same scene is taken by rotating around the optical center to different directions, and at least two images of the same scene are taken. In the process of shooting an image sequence, the internal parameter K of the camera is kept unchanged, that is, the focal length of the camera remains unchanged. Suppose that the image sequence taken to the end includes images I0, I1...IN-1, including N images (N≥3). Such as Figure 3a to Figure 3e Shows five images of different angles for calibration in a scene; Figure 4a to Figure 4c Shows another scene with three different angles for calibration.

[0058] 202: SIFT feature point extraction;

[0059] The feature points are extracted for each calibration image. The ex...

Embodiment 3

[0181] See Figure 5 , An embodiment of the present invention provides a camera calibration device, including:

[0182] The feature point processing module 501 is used to extract and match the scale-invariant feature SIFT feature points of the image taken by the camera to obtain the pixel coordinates of the SIFT feature points corresponding to the same three-dimensional space point in the image. The image is the camera surrounding light At least two images of the same scene captured by heart rotation;

[0183] The selection module 502 is configured to select a calibration reference image and a valid image according to the pixel coordinates of the SIFT feature points corresponding to the same three-dimensional space point;

[0184] The establishment module 503 is used to establish the transformation relationship between the reference image and the effective image according to the pixel coordinates of the SIFT feature points corresponding to the same three-dimensional space point;

[01...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com