Movement perception model extraction method based on time-space domain

A motion perception and motion model technology, applied in color TV parts, TV system parts, image data processing and other directions, can solve the complex content of video objects, there is no segmentation method, and the computer does not have the ability to observe, recognize, and understand. image and other problems to achieve the effect of improving the motion perception model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

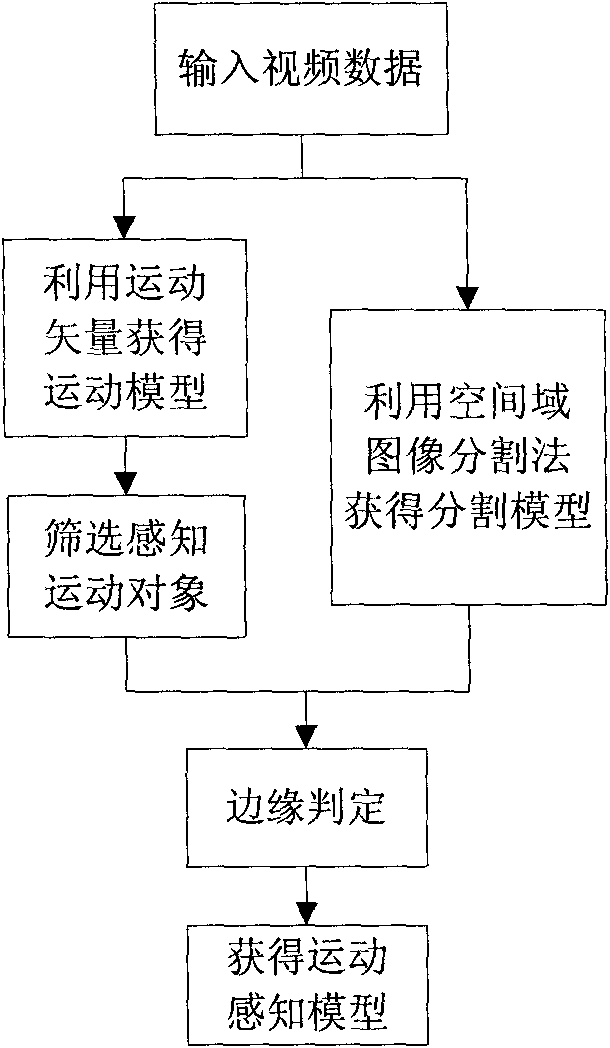

[0078] Embodiment 1: The present invention is based on the time-space domain motion perception model extraction method according to figure 1 The program block diagram shown is programmed on a PC test platform with Athlon x22.0GHz CPU and 1024M memory. Image 6 It is a motion perception model diagram of a frame obtained by inputting the mother-daughter sequence on the JM10.2 verification model.

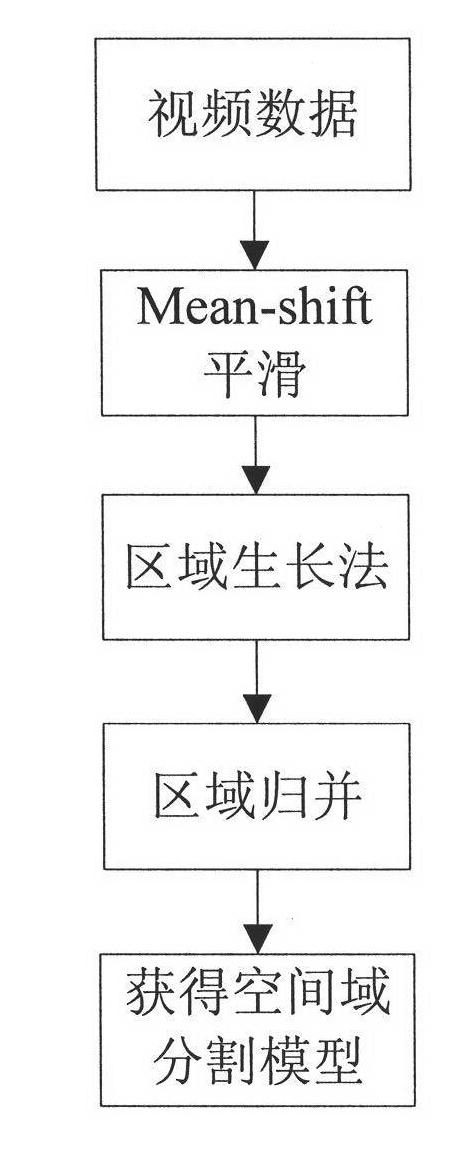

[0079] see figure 1 , the present invention is based on the time-space domain motion perception model extraction method, and extracts the initial motion model by analyzing the motion vector generated in the encoding process. At the same time, the video image segmentation model is obtained by using the brightness information in the spatial domain. On the basis of the above two models, the final motion perception model is obtained by using the edge judgment principle. The motion perception model obtained by this method combines the characteristics of the space domain and the time doma...

Embodiment 2

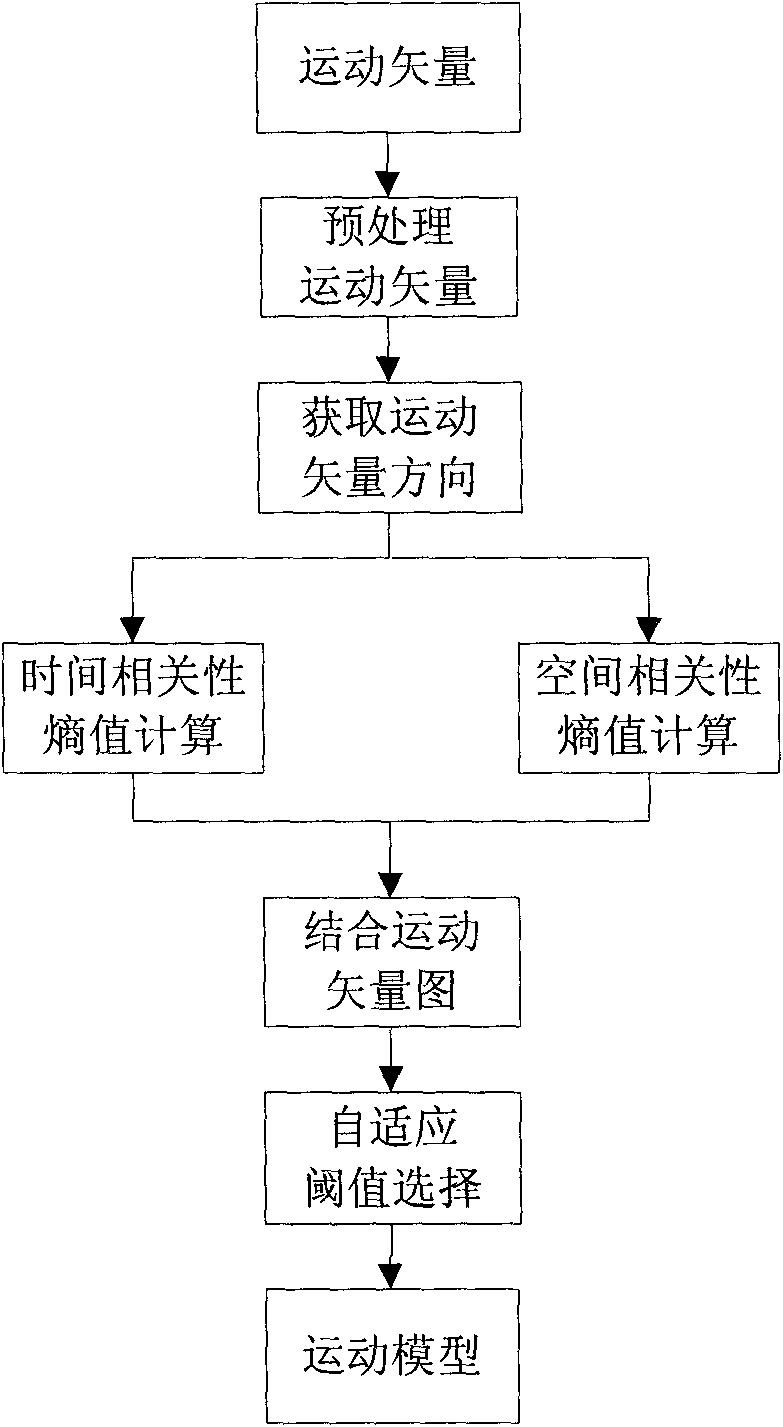

[0086] Embodiment two: the present embodiment is basically the same as embodiment one, and the special features are as follows: the motion model building process of the above-mentioned steps (2) is as follows:

[0087] ① Perform mean value filter processing of 3×3 mask on the motion vector generated in the encoding process;

[0088] ②Assuming that the motion vector of the (i, j) macroblock in the nth frame is denoted as PV (i, j)=(x n,i,j ,y n,i,j ), the motion vector of each pixel of the macroblock is PV(i, j), and the motion direction of the vector is θ n,i,j =arctan(y n,i,j / x n,i,j )

[0089] ③ Calculate the probability histogram distribution function of the motion vector direction of the current pixel point and its surrounding eight points Where SH() is the direction value θ of the motion vector of the current pixel point and its surrounding eight points n,i,j The formed histogram, m is the space size of the histogram, and w represents the search window size of N*N...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com