Layered resource reservation system under cloud computing environment

A cloud computing environment and resource reservation technology, applied in transmission systems, electrical components, etc., can solve problems such as good support for reservation, failure to provide QoS guarantee, and failure to consider the dynamics of grid applications, etc., to ensure normal stability Run, remove effects that interact with each other

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The multi-level resource reservation system under the cloud computing environment of the present invention is based on the linux 2.6CPU31set technology, dynamically sets the user process scheduling domain on the resource node, and realizes the reservation of CPU31 computing resources. The present invention will be described in further detail below in conjunction with the accompanying drawings.

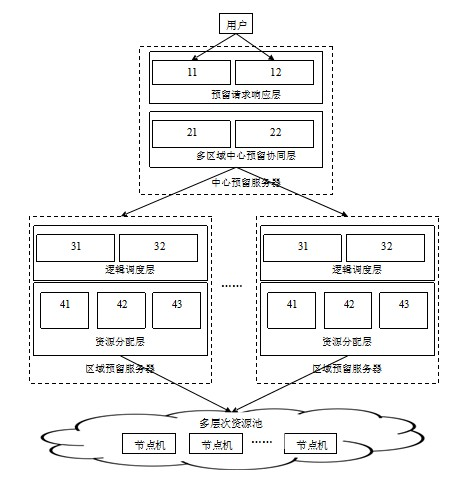

[0016] Such as figure 1 As shown, the system of the present invention is composed of a central reservation server, an area reservation server and multi-level resource pools. The system of the present invention is composed of a plurality of areas, and each area has an independent area reserved server, and the independent area reserved server is managed upwardly by the central reserved server, and resources are distributed downwardly to multi-level resource pools. Among them, the central reservation server is divided into two layers: the reservation request response layer and the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com