Wink action-based man-machine interaction method and system

A human-computer interaction and action technology, applied in the field of human-computer interaction, can solve the problems of human eye injury, human burden, increased cost, etc., and achieve the effect of simple and convenient operation, low cost, and easy implementation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

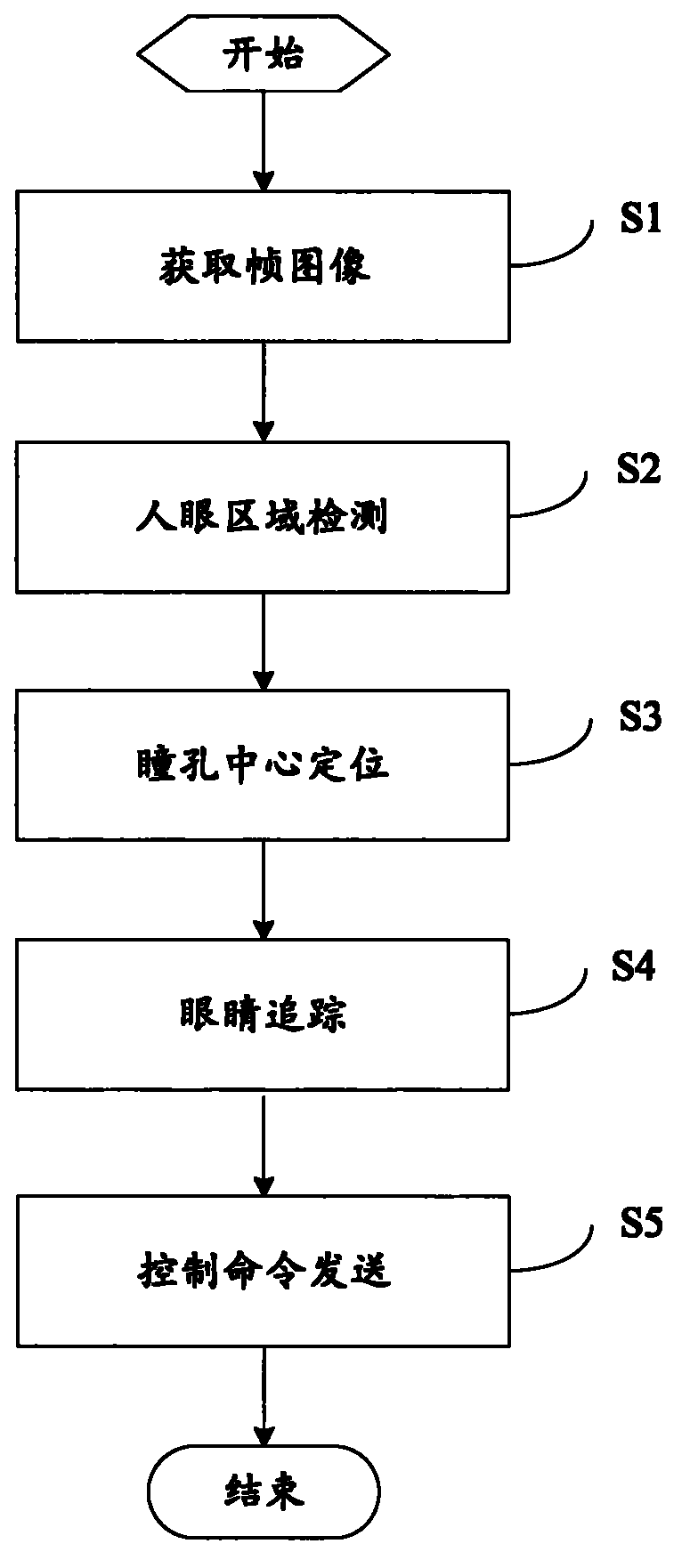

[0072] see figure 1 As shown, it is a flow chart of a blinking-based human-computer interaction method in the present invention. The method comprises the steps of:

[0073] Step S1: Acquire frame images.

[0074] In this step, the face image (image resolution is width×height) can be acquired in real time through the front camera of the mobile phone.

[0075] Step S2: Human eye region detection.

[0076] Considering that when using a mobile phone, the distance between the human eye and the camera is generally kept between 10 and 30 centimeters, and within this range the face will occupy the entire image area, so this method does not need the steps of face detection, and directly performs human detection. Eye area detection is enough. The initial positioning of the human eye area is not required to be very accurate, so there are many methods that can be used, such as histogram projection method, Haar (Haar) detection method, frame difference method, template matching method ...

Embodiment 2

[0131] Compared with Embodiment 1, Embodiment 2 is different in the specific implementation of step S4, and only the different parts will be described below, and other similar parts will not be repeated.

[0132] see Figure 6 As shown, it is a flow chart of eye tracking in the blinking-based human-computer interaction method according to Embodiment 2 of the present invention. After the rectangular search box is defined by initializing the search window, the rectangular search box may exceed the range of the image in the next image frame. At this time, the excess part needs to be filtered out to ensure that the search range does not exceed the image size. In this embodiment, after the next frame of image is acquired in step S402, step S403 is executed: determining whether the rectangular search box exceeds the range of the next frame of face image. When the rectangular search box exceeds the range of the next frame of human face image, perform step S404: filter out the part ...

Embodiment 3

[0134] Embodiment 3 is a blinking-based human-computer interaction method of the present invention applied to reading e-books. The specific implementation manner of sending the control command in the method is described below, and the implementation manners of Embodiment 1 or Embodiment 2 can be adopted for other steps not described.

[0135] see Figure 7 As shown, it is a flow chart of sending control commands in the method of Embodiment 3 of the present invention. The control command sending method comprises the following steps:

[0136] Step S501: Detect blinking action.

[0137] When the number of pupil centers changes, it can be judged that a blink has occurred.

[0138] Step S502: Determine whether a single eye blinks.

[0139] When it is detected that the number of pupil centers is 1, it means that a single eye is blinking, and it can be considered that it is preparing to issue a control command. If only one eye blinks, execute step S503; if not, end this process,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com