Distributed cache automatic management system and distributed cache automatic management method

A distributed cache and automatic management technology, applied in transmission systems, electrical components, etc., can solve problems such as complex cache management methods, program defects, and complex cache management protocols, to simplify cache management protocols, reduce difficulty, and reduce errors. the effect of the possibility of

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

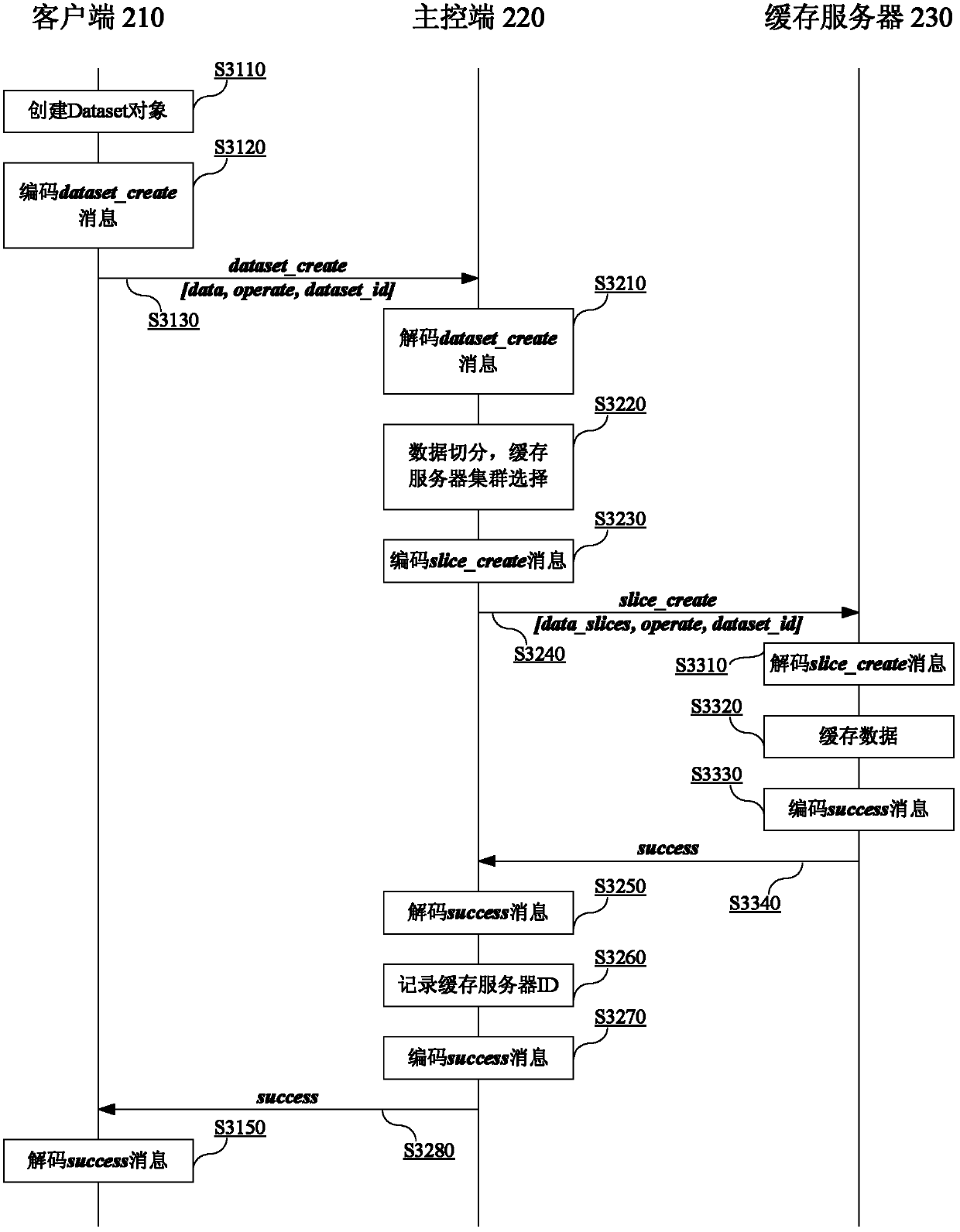

[0025] The preferred embodiments of the present invention will be described in detail below with reference to the accompanying drawings, and unnecessary details and functions for the present invention will be omitted during the description to prevent confusion in the understanding of the present invention.

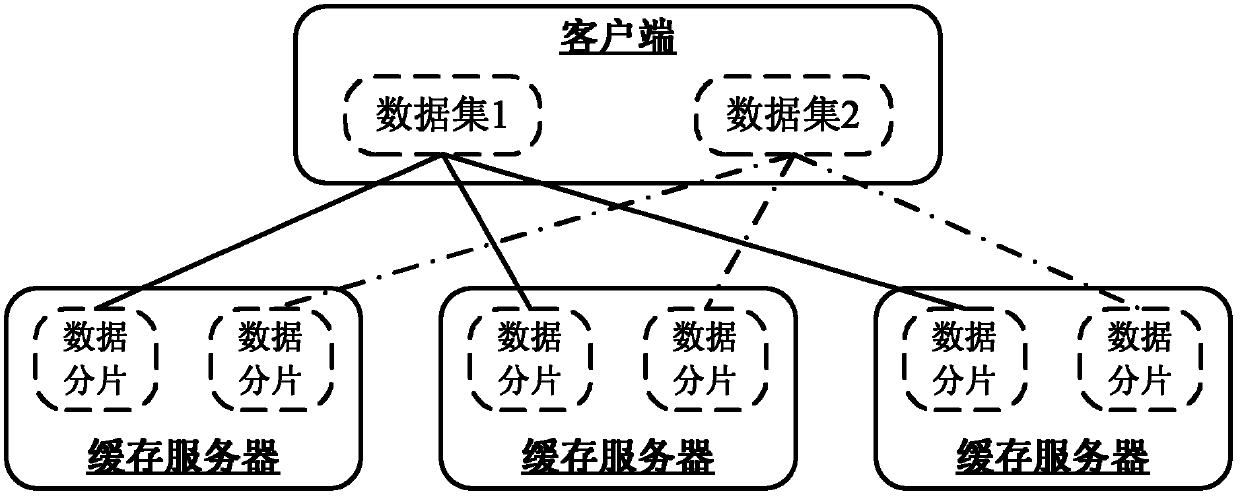

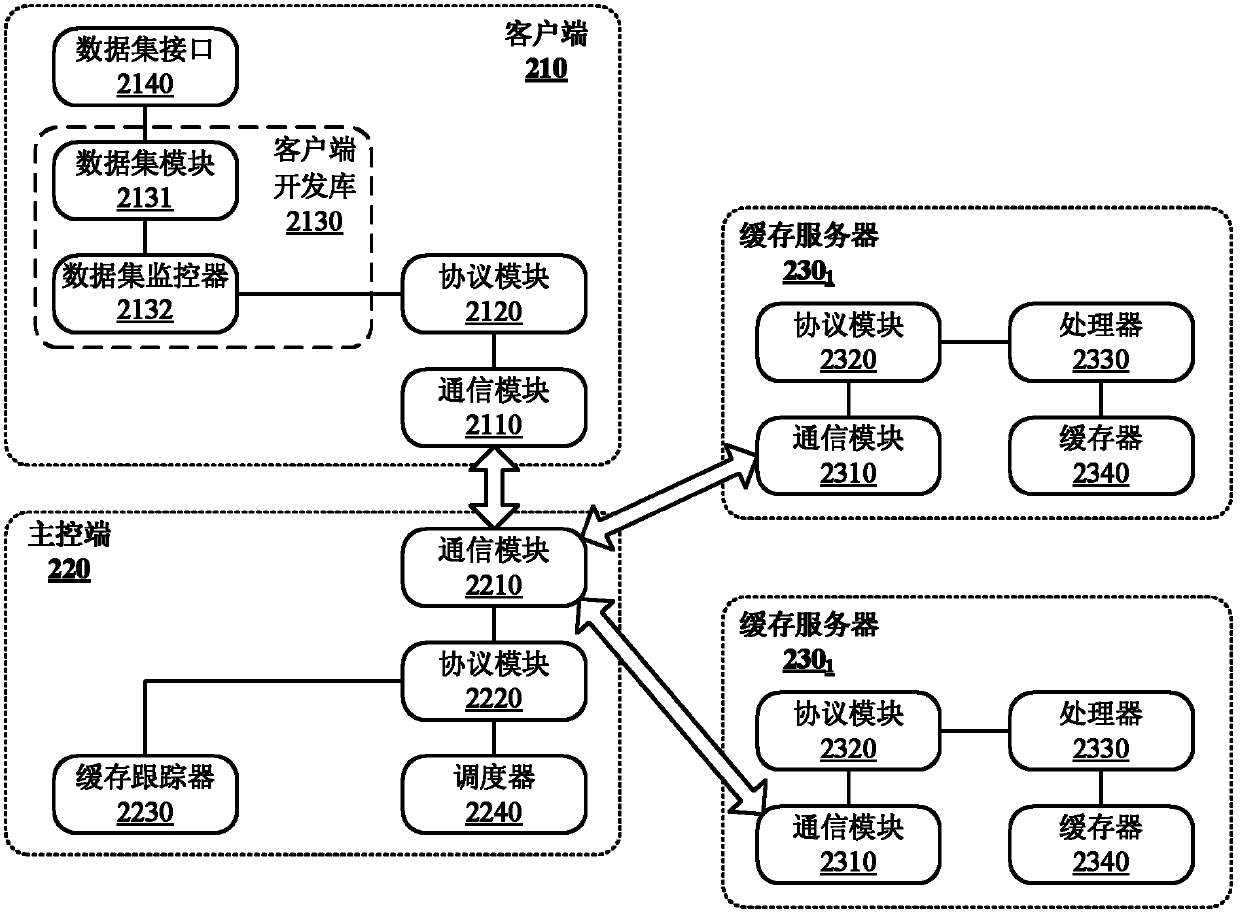

[0026] figure 2 is a schematic diagram for illustrating the distributed cache automatic management system 200 according to the present invention.

[0027] Such as figure 2 As shown, the distributed cache automatic management system 200 includes three parts: a client (Client) 210, a master control terminal (Master) 220 and a cache server cluster (Cache Servers) 230 (for simplicity of description, figure 2 Only two cache servers 230 are shown in 1 and 230 2 , but the present invention is not limited to the specific number of cache servers, any number of cache servers 230 can be arranged as required 1 ~230 N ). The programs running on the client 210 are written by ap...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com