Indoor robot vision hand-eye relation calibration method based on 3D image sensor

An image sensor and indoor robot technology, applied in the field of robot vision, can solve problems such as complex calculations, achieve high measurement accuracy, simplify the calibration process, and meet the needs of hand-eye calibration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

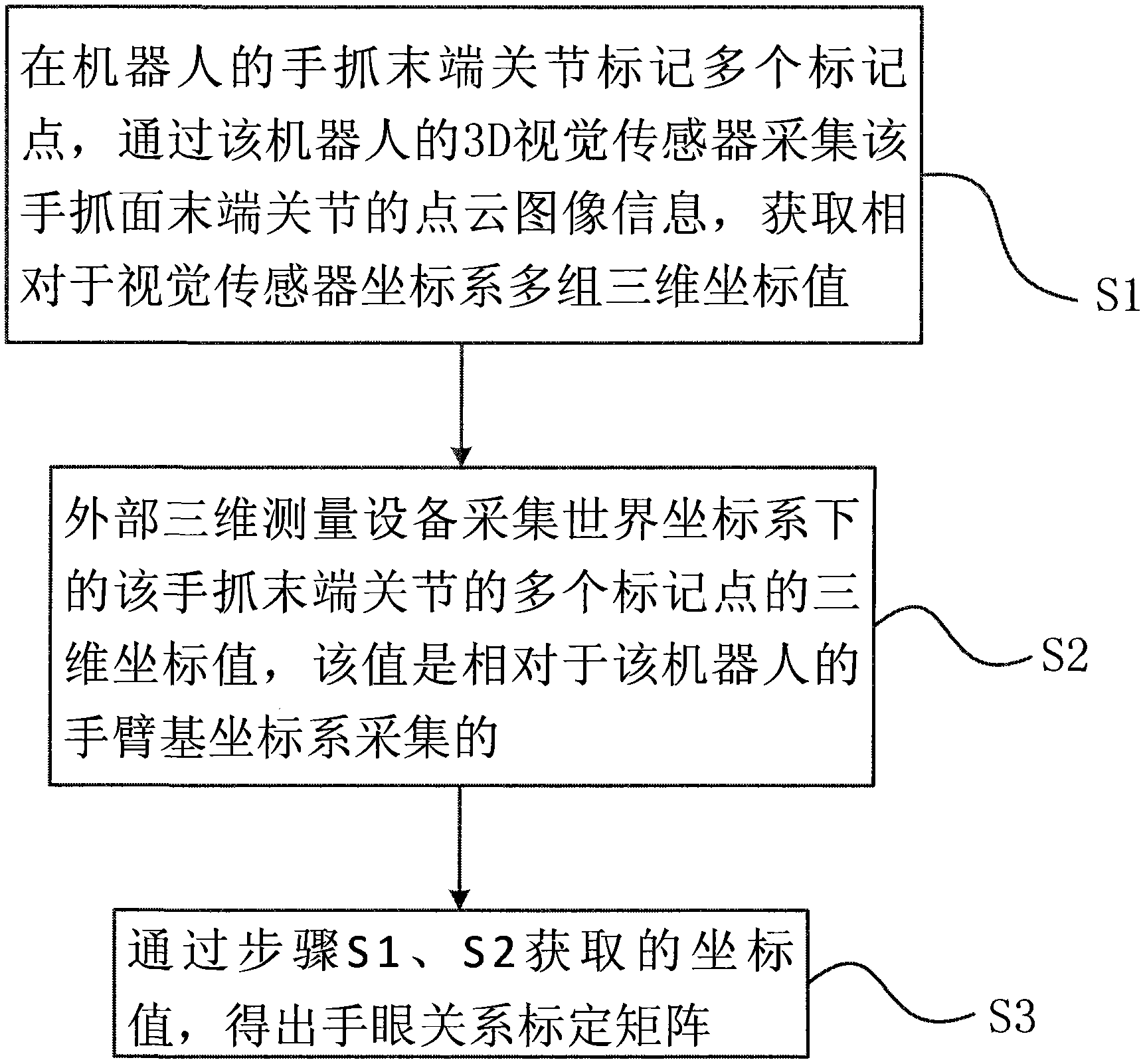

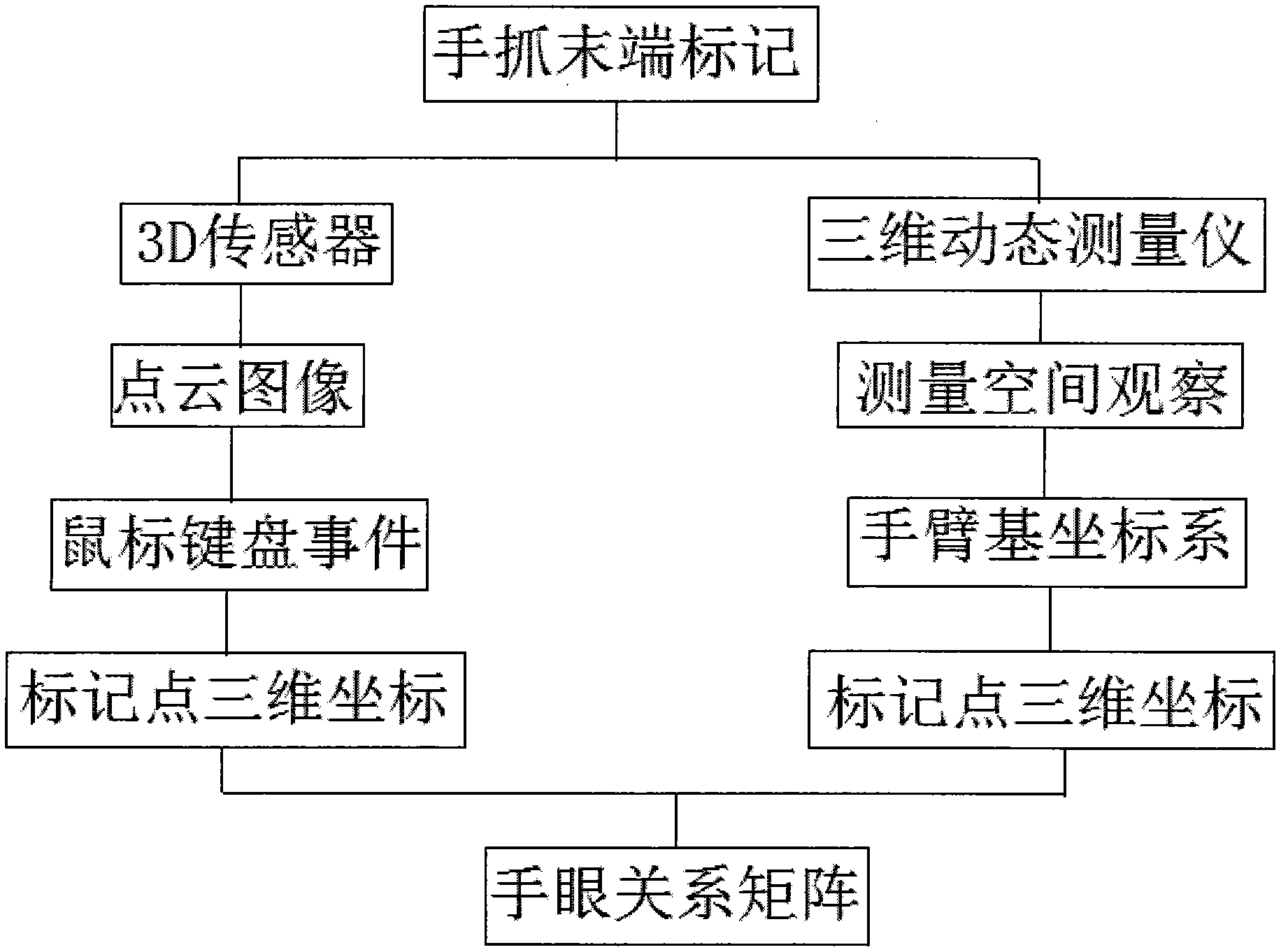

Method used

Image

Examples

Embodiment Construction

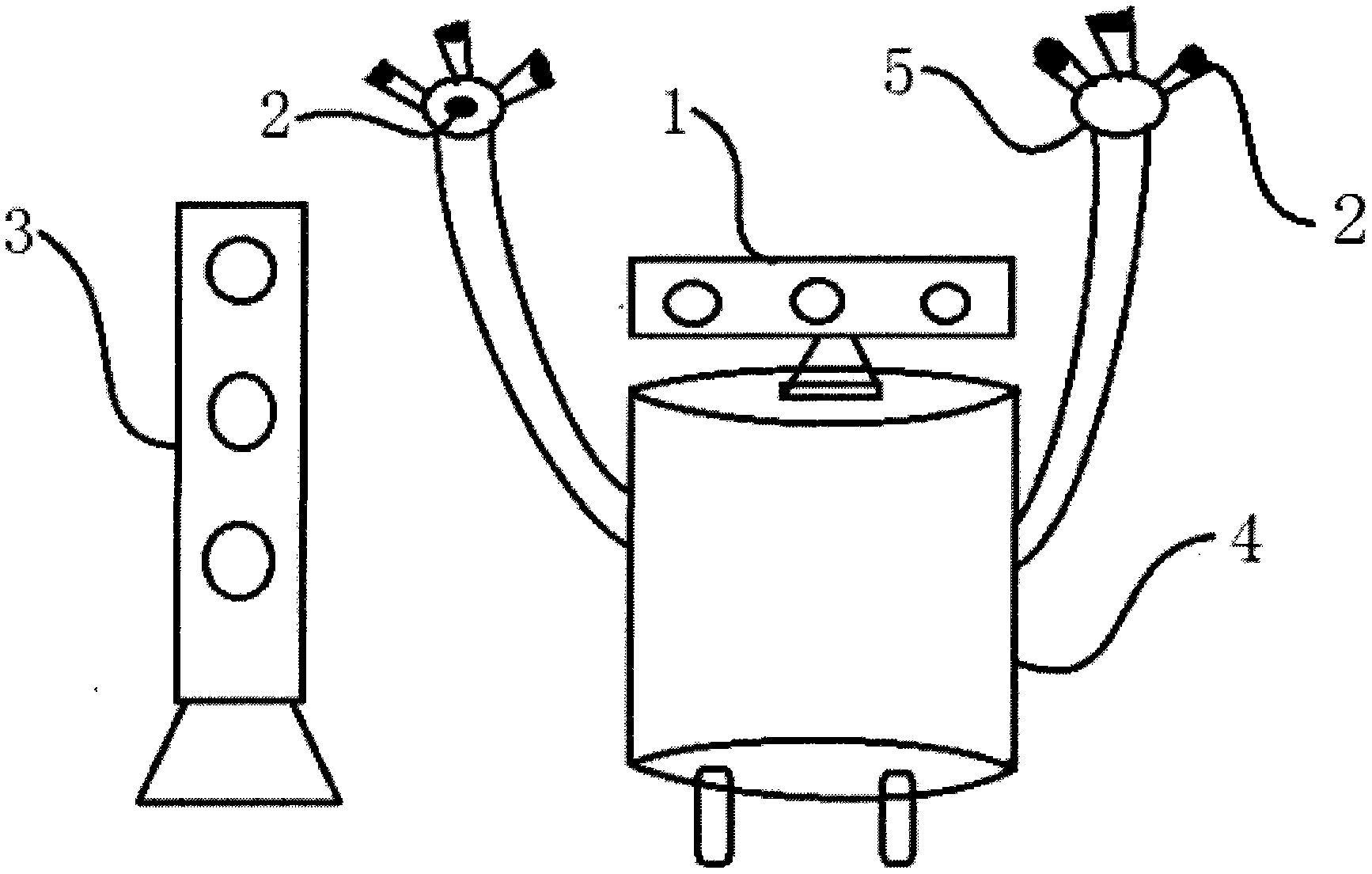

[0015] Please refer to figure 1 , figure 1 It is a schematic diagram of the structure of the robot and the external three-dimensional measuring equipment of the embodiment of the present invention. The indoor mobile robot 4 is provided with a 3D sensor Kinect, and four black dots 2 are marked on the end joint 5 of the robot's hand grasping, as marking points, respectively located at the end of the left and right hand grasping At the end of the three fingers of the joint and in the palm of the hand, an NDI three-dimensional dynamic measuring instrument 3 is erected beside the indoor mobile robot. When measuring, enable the robot to enable the joints of the arm and the end of the hand, so that the end of the hand is in a suitable position in front of the robot, ensure that it is within the field of view of Kinect, and make the four marking points at the end of the finger related to each other as three points Four points that are not collinear are not coplanar. The robot termin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com