CPU (central processing unit) and GPU (graphic processing unit) on-chip cache sharing method and device

A cache and shared chip technology, applied in the field of CPU and GPU shared on-chip cache, can solve the problems of CPU and GPU performance impact, delay sensitivity, GPU cannot process images in real time, etc., to achieve the effect of improving program performance and high performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

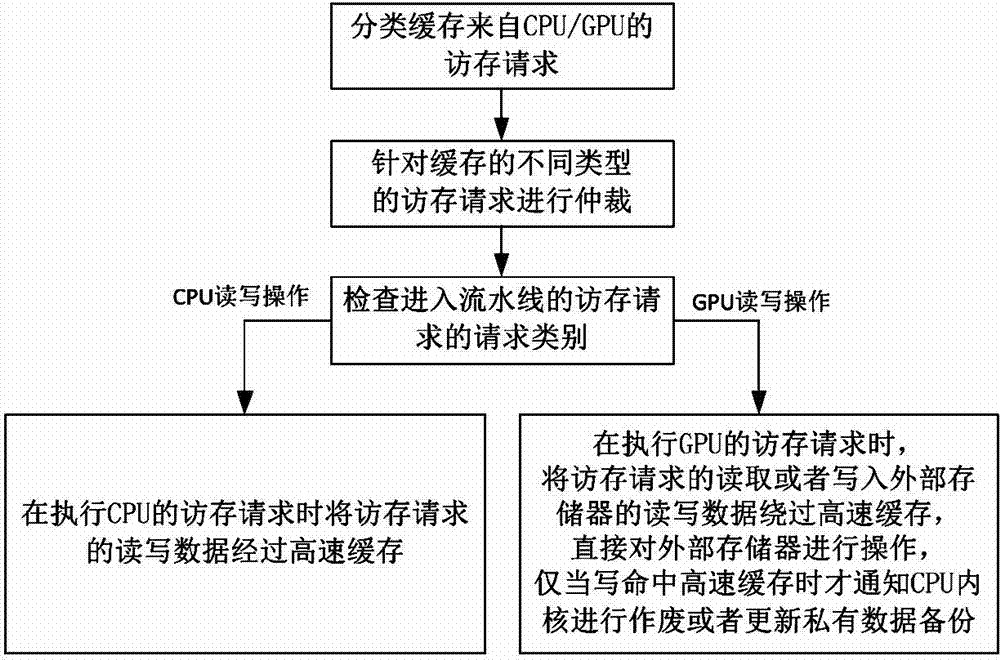

[0057] Such as figure 1 As shown, the implementation steps of the method for sharing the on-chip cache between the CPU and the GPU in this embodiment are as follows:

[0058] 1) Classify and cache memory access requests from the CPU and memory access requests from the GPU;

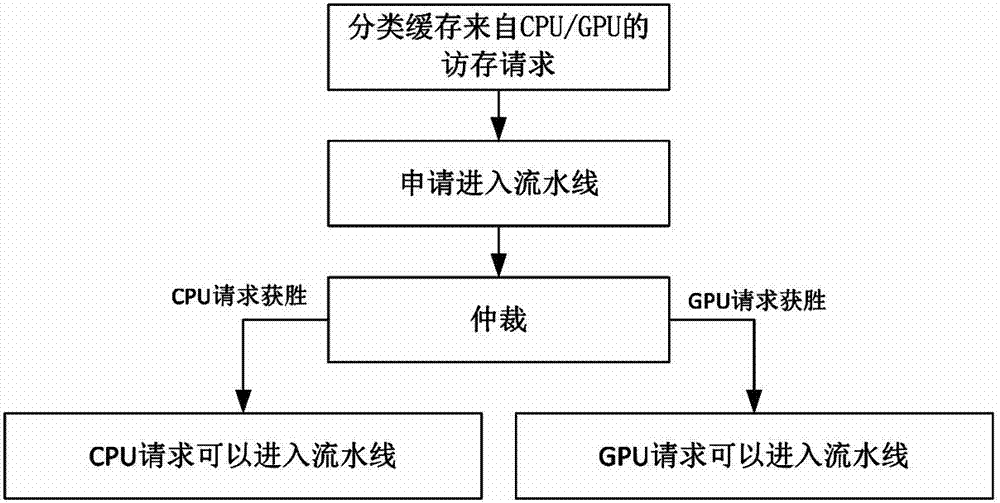

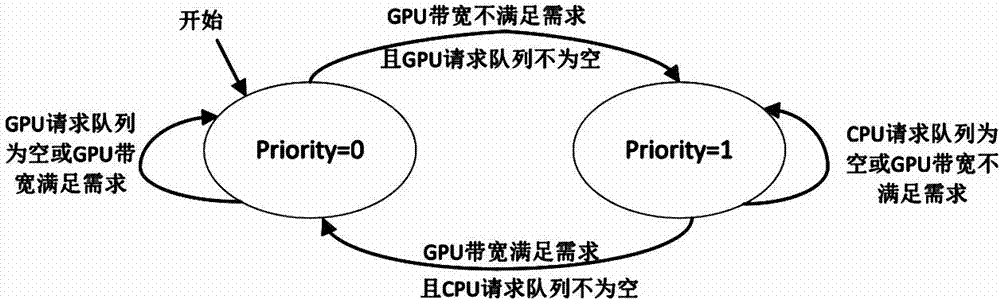

[0059] 2) Arbitrate for different types of cached memory access requests, and the memory access requests that win the arbitration enter the pipeline;

[0060] 3) Check the request type of the memory access request entering the pipeline. If the memory access request is a memory access request from the CPU, the read and write data of the memory access request will be cached when the CPU memory access request is executed; if the memory access request If it is a memory access request from the GPU, when the memory access request of the GPU is executed, the read and write data of the memory access request or written to the external memory will bypass the cache and directly operate on the external memory. Only w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com