Data center task scheduling method based on dynamic temperature prediction model

A prediction model and task scheduling technology, applied in the direction of resource allocation, multi-programming device, energy-saving calculation, etc., can solve the problem of reducing the prediction accuracy of the temperature model, and achieve the effect of improving the cooling efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] Key concepts, definitions and symbols of the present invention:

[0018] 1. Stable state: In computing-intensive cluster applications, calculations take a long time. After a long enough period of time, the ambient temperature and airflow of the cluster will tend to stabilize, and this state becomes a steady state.

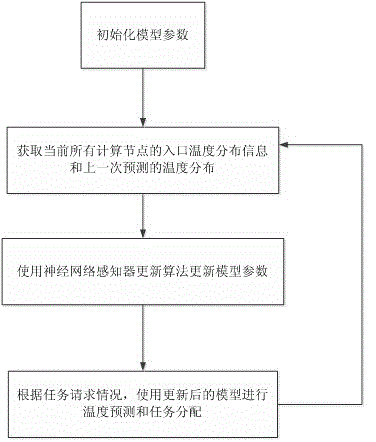

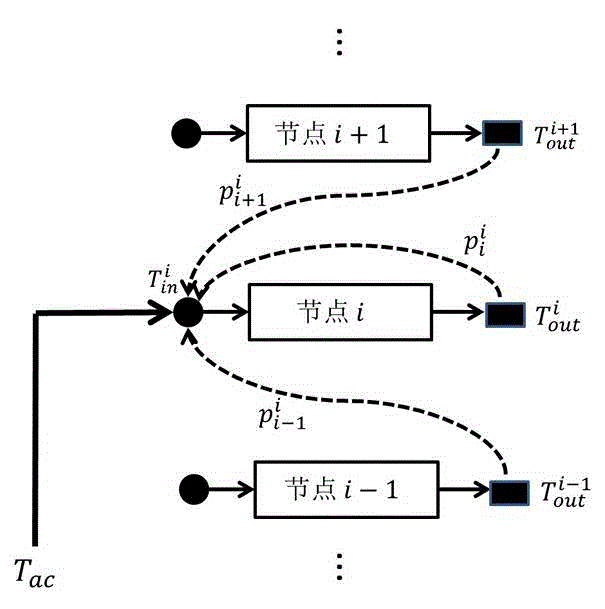

[0019] , Temperature prediction model: a two-dimensional matrix , which can be distributed according to the current CPU usage of all computing nodes , predict the inlet temperature distribution of all computing nodes in steady state by calculating Ac+b, where b is a constant parameter.

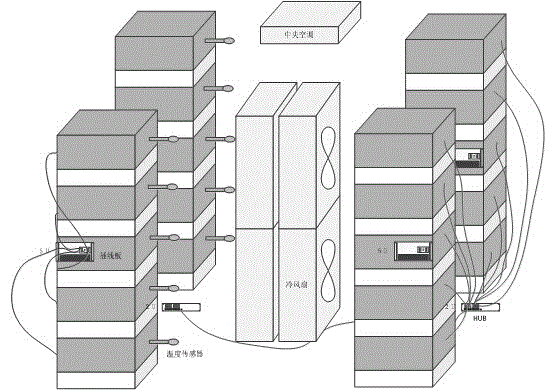

[0020] as figure 1 The system environment shown is taken as an example: use 4 cabinets, each cabinet has 5 layers of racks, and each cabinet uses a power strip for power supply; the horizontal blowing of the cooling fan represents the cold aisle blowing mechanism; the ventilation inlet of each cabinet Configure a temperature sensor at the location to detect the tem...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com