Excavating method of topic actions of man-machine interaction for video analysis

A technology of human-computer interaction and video analysis, applied in the field of image processing, can solve problems such as difficult to find topics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The human-computer interaction theme action mining method for video analysis proposed by the present invention comprises the following steps:

[0075] (1) Extract the feature matrix V of the video sequence to be analyzed, the specific process is as follows:

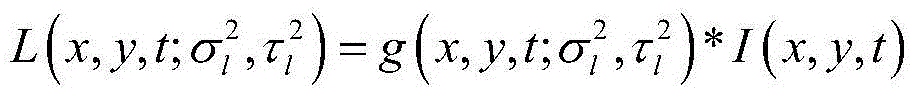

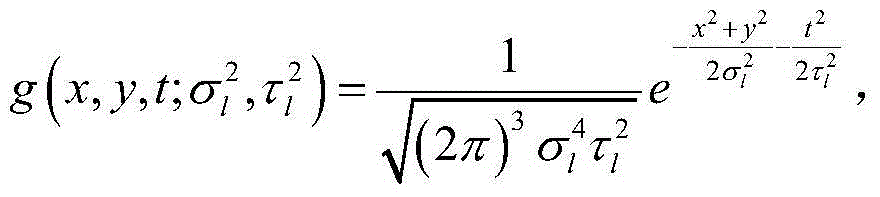

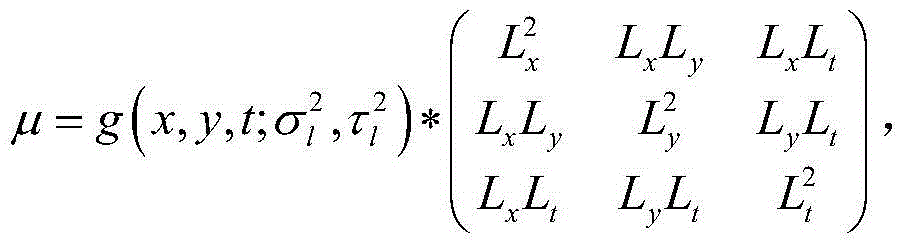

[0076] (1-1) Suppose the video sequence to be analyzed is I(x, y, t), where x, y are the coordinates of the pixels in the t-th frame image in the image, and Gaussian convolution is performed on the video sequence I to obtain Video image sequence L after Gaussian convolution:

[0077] L ( x , y , t ; σ l 2 , τ l 2 ) = g ( x , y , t ; ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com