Free-scene egocentric-vision finger key point detection method based on depth convolution nerve network

A deep convolution and neural network technology, applied in the research fields of computer vision and machine learning, to achieve the effect of training variability and enriching image features

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0046] Such as Figure 4 As shown, the finger key point detection method based on the first perspective of the free scene of the deep convolutional neural network includes the following steps:

[0047] S1. Obtain the training data, assuming that the area containing the hand (foreground area) has been obtained through a suitable positioning technology, manually mark the coordinates of the key points of the finger, including fingertips and finger joints;

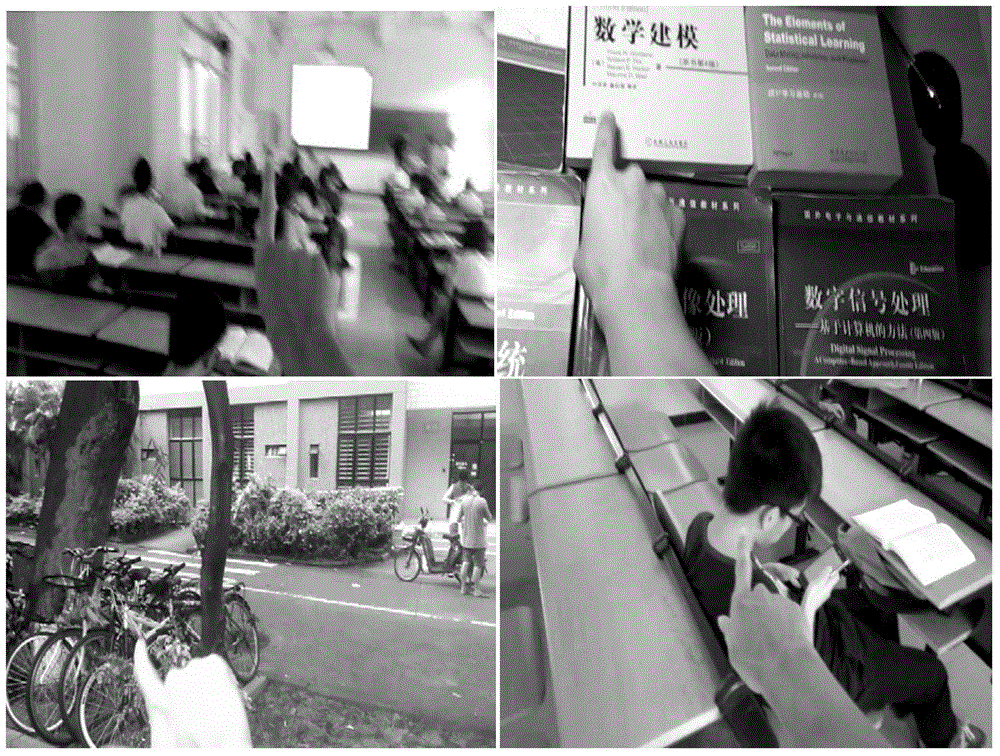

[0048] S1.1 Collect a large number of actual scene samples, and use the camera at the glasses as the first perspective simulation (such as Figure 1(a)-Figure 1(b) Shown), make a large number of video recordings and make each frame of video gesture gestures, data samples need to cover different scenes, lighting, and postures. Then, cut out a rectangular foreground image containing the hand region;

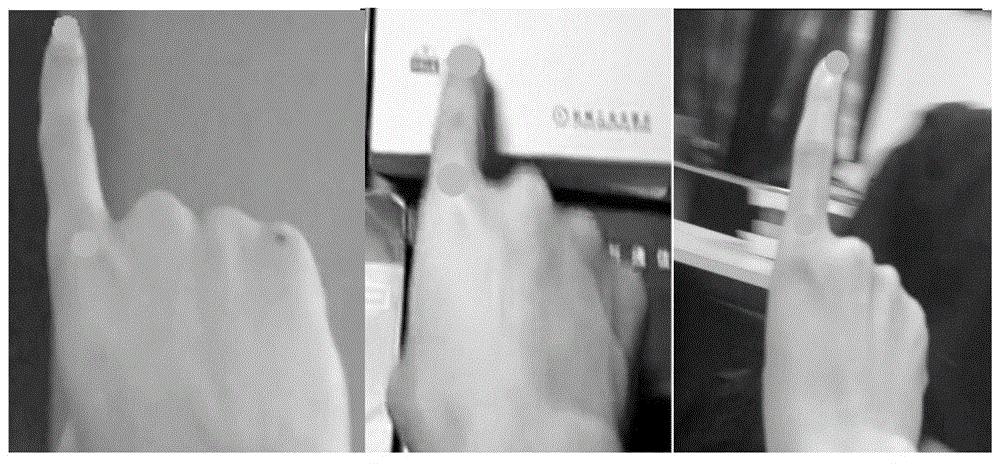

[0049] In step S1.1, the gesture gesture is a single-finger gesture gesture, the coordinates are manually marked, and the finger...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com