Horizontal visual angle estimation and calibration method based on depth camera

A technology of depth camera and horizontal viewing angle, applied in the field of eye tracking, which can solve the problems of inability to promote, inflexible operation, and large head restrictions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

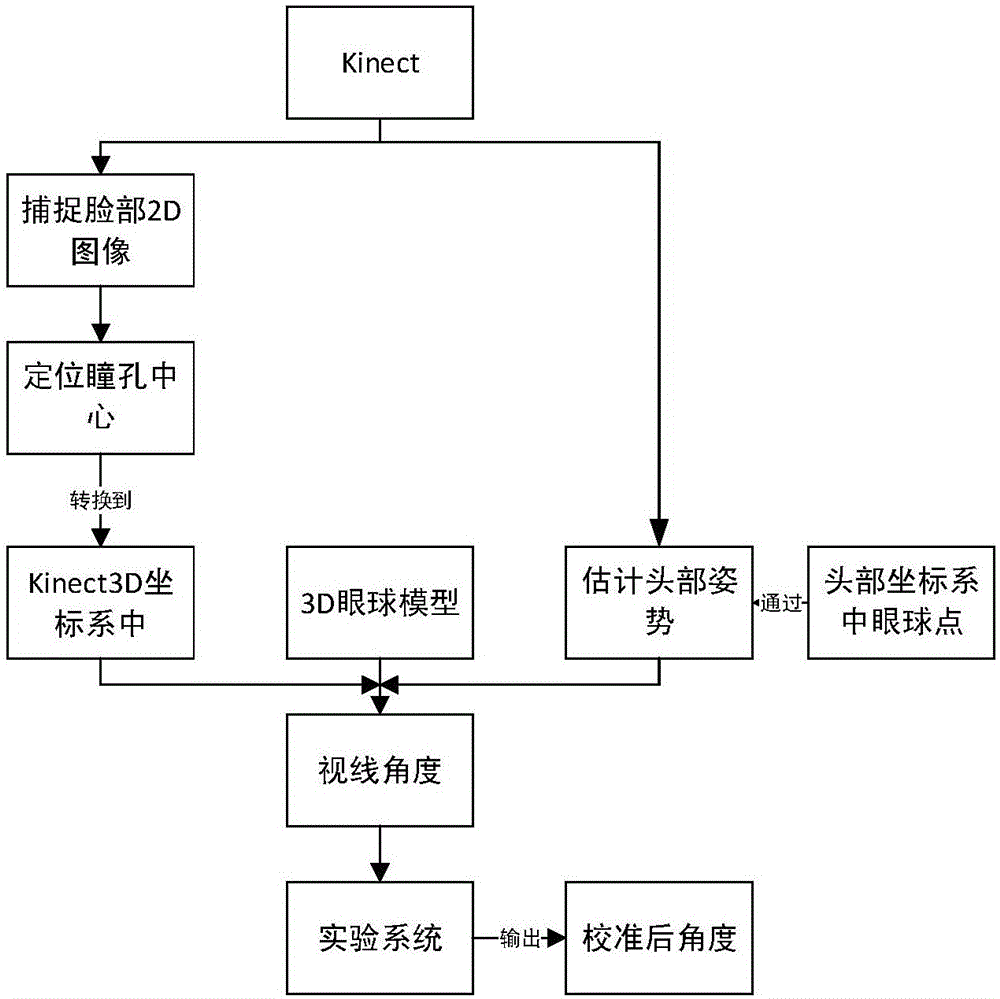

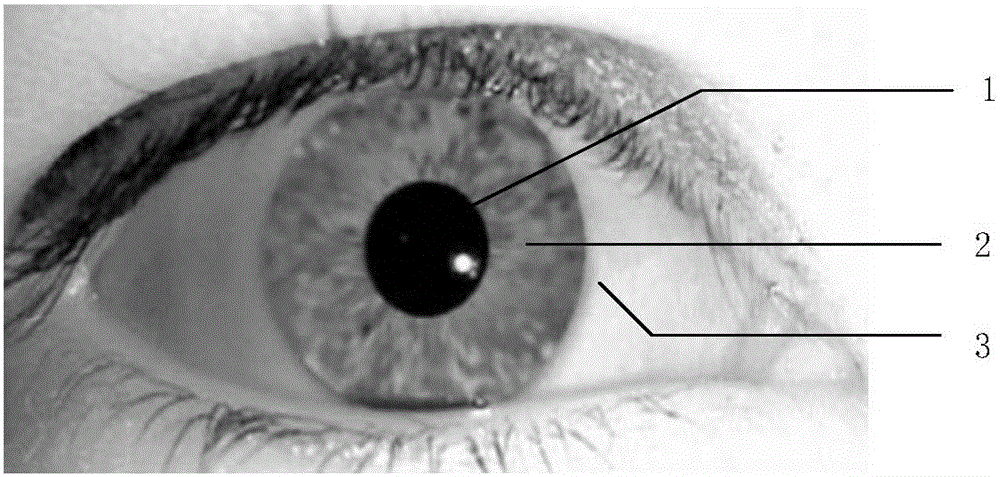

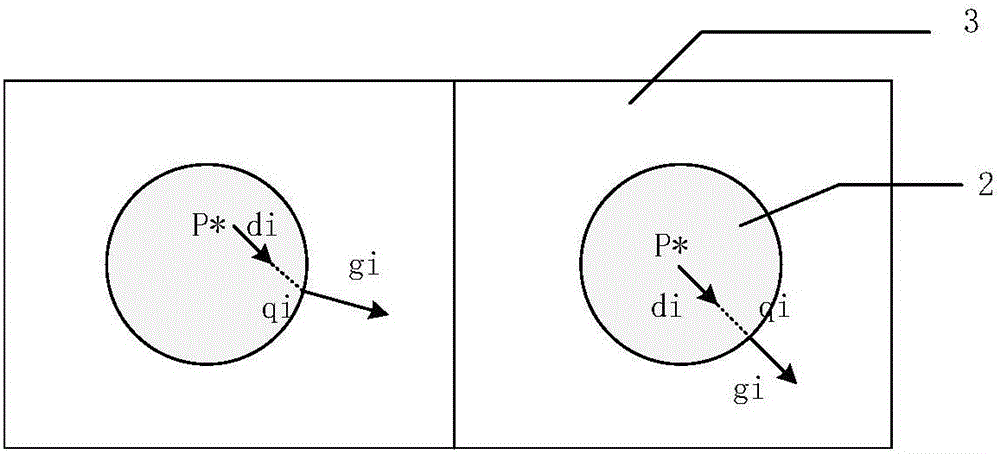

[0049] The present invention is based on the horizontal viewing angle estimation and calibration method of the depth camera 5. The method adopts the Kinect depth camera 5 to obtain the 3-dimensional coordinates of facial feature points, and uses a pupil 1 positioning algorithm, a 3D eyeball model and a preset eyeball center point. , to get the horizontal line-of-sight angle. The resulting line-of-sight angles are then calibrated through an experimental system.

[0050] The present invention will be further described below in conjunction with the accompanying drawings. The following examples are only used to illustrate the technical solution of the present invention more clearly, but not to limit the protection scope of the present invention.

[0051] Such as figure 1 Shown, is the flowchart of the inventive method, comprises the steps:

[0052] Step 1: Use the Kinect depth camera 5 to capture a 2D image of the face and estimate the head pose.

[0053] The present invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com