Method for parallelly processing data in extensible manner for network I/O (input/output) virtualization

A technology for expanding data and processing methods, which is applied in the fields of electrical digital data processing, software simulation/interpretation/simulation, program control design, etc. Handle computing resource bottlenecks, packet loss, and performance degradation to achieve dynamic and efficient scaling, parallelization and scalability, and improve efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

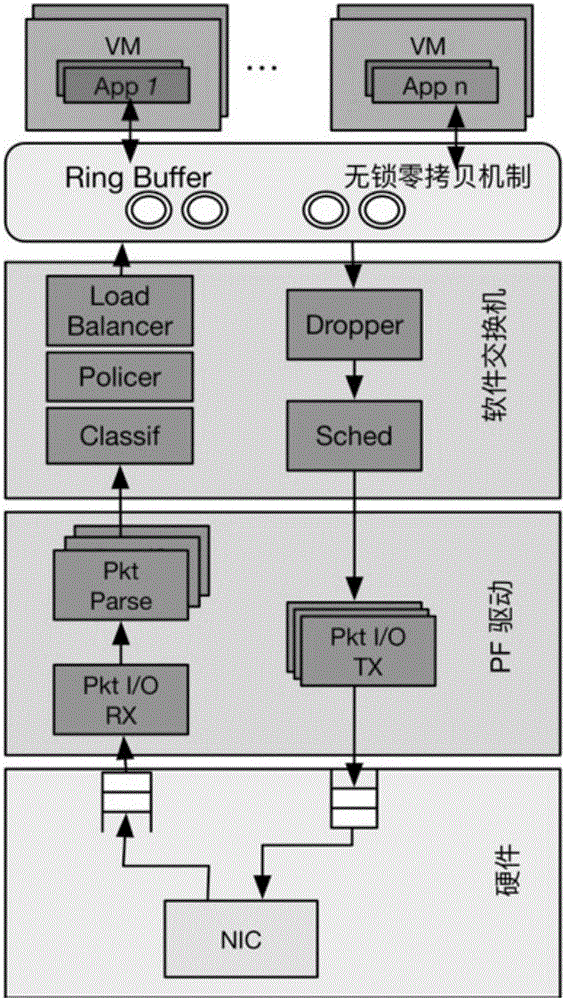

[0020] The embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings. This embodiment is implemented on the premise of the technical solution of the present invention, and a detailed implementation manner and specific operation process are given, but the application scenario is not limited to this embodiment.

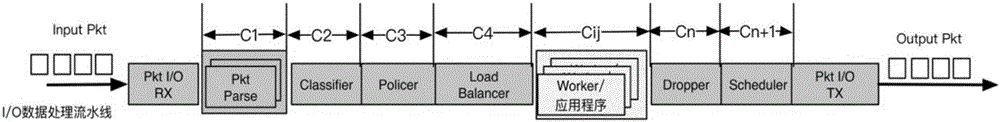

[0021] In this embodiment, I / O para-virtualization is taken as an example, the data packet protocol analysis driven by the network card is symmetrically parallelized, and the software switch in the VMM is pipelined asymmetrically parallelized.

[0022] The present invention proposes a parallel and scalable data processing method for network I / O virtualization, which includes the following steps:

[0023] Step 1: For I / O paravirtualization, such as figure 1 As shown, the data packet protocol analysis driven by the network card and the data processing flow involved in the software switch in the VMM are decomposed, includ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com