Interface operating method and system

A technology of interface operation and operation target, applied in character and pattern recognition, input/output process of data processing, input/output of user/computer interaction, etc. Recognition accuracy rate and other issues, to eliminate misuse and improve the accuracy of recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

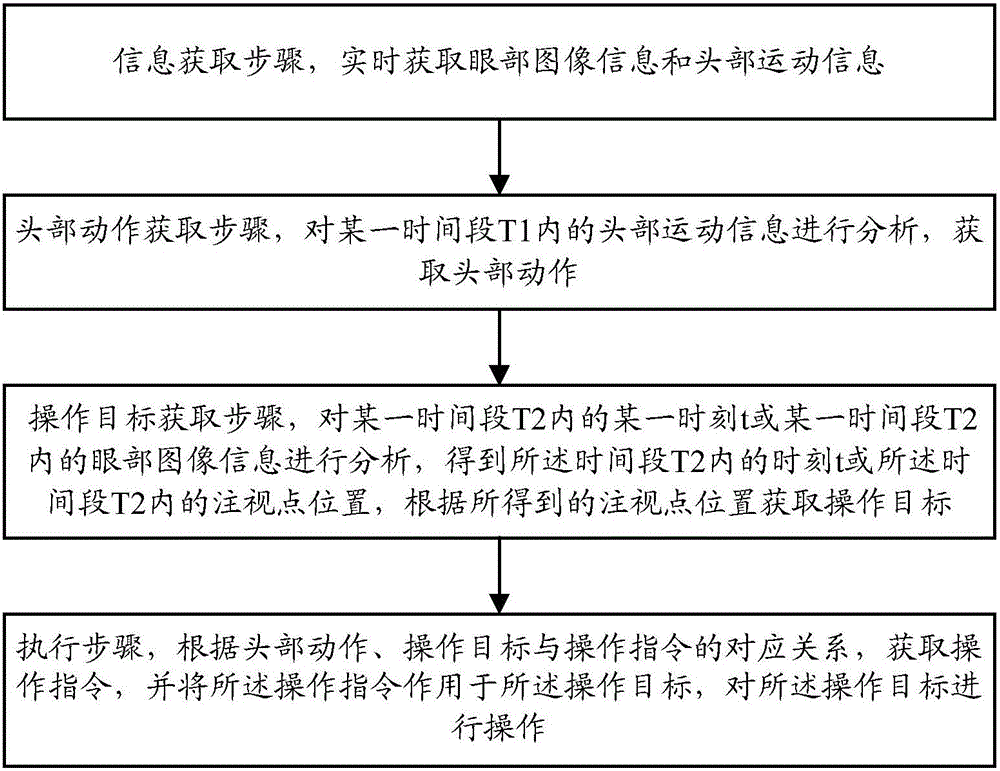

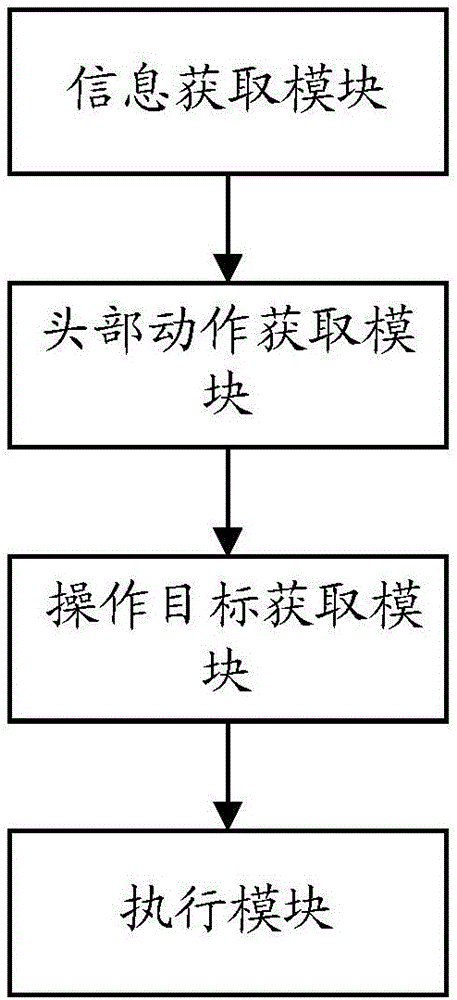

[0106] combine figure 1 , the present invention provides a method for interface operation, comprising the following steps:

[0107] (1) The information acquisition step is to acquire eye image information and head motion information in real time;

[0108] According to the different sources of eye image information and head motion information, this step specifically distinguishes the following two situations:

[0109] Case 1: No head motion sensor, only image acquisition device

[0110] In this case, both the eye image information and the head motion information are obtained through the image acquisition device. The image acquisition device can be an infrared camera, and the infrared camera is equipped with an infrared lamp and a related control circuit, which is used to collect the user's eye image and head movement information in milliseconds.

[0111] Wherein, the eye image information is obtained by the following method: obtaining the eye image information through real-t...

Embodiment 2

[0151] This embodiment provides a method for performing interface operations by combining gaze points and head motions, which is applied to a head-mounted display device, and combines gaze points and head nodding motions to achieve the effect of manipulating the gaze target; by shaking the head once, the cancellation is achieved. (and return).

[0152] The specific method is as follows:

[0153] (1) Hardware modification on head-mounted display devices

[0154] Mainstream head-mounted display devices are equipped with head motion sensors. Therefore, the head motion can be directly recognized by the original equipment of the head-mounted display device, that is, the head motion sensor. Wherein, the head motion sensor may be a gyroscope attached to the head and / or a head somatosensory patch and / or an infrared lidar base station and / or an ultrasonic locator and / or a laser locator and / or an electromagnetic tracker, etc. In head-mounted display devices, implementing gaze recognit...

Embodiment 3

[0175] This embodiment achieves the effect of the operation interface by combining the gaze point and the head movement in the use of the PC.

[0176] (1) Additional hardware required for PC to use this system

[0177] Eye tracking equipment is required, which is mainly composed of a camera, a control circuit and / or an infrared light source, and can be connected to a computer through USB, wireless, etc.

[0178] (2) Real-time calculation of user viewpoints

[0179] This is basically the same as step (2) of the second embodiment.

[0180] (3) Monitor the user's head posture in real time, and determine whether head movement occurs

[0181] Monitor the user's head posture in real time, analyze the head feature information in the T1 time period, and obtain head posture change information; determine whether the head posture change information is a head movement, wherein the head movement includes: nodding Head movements, head movements of shaking left or right. The judgment met...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com