A Simultaneous Visual Servo and Adaptive Depth Recognition Method for Mobile Robots

A mobile robot, visual servo technology, applied in two-dimensional position/channel control and other directions, can solve the problems of increasing system complexity and cost, and achieve the goal of increasing system complexity and cost, solving the problem of global stability of the system, and achieving good perception. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

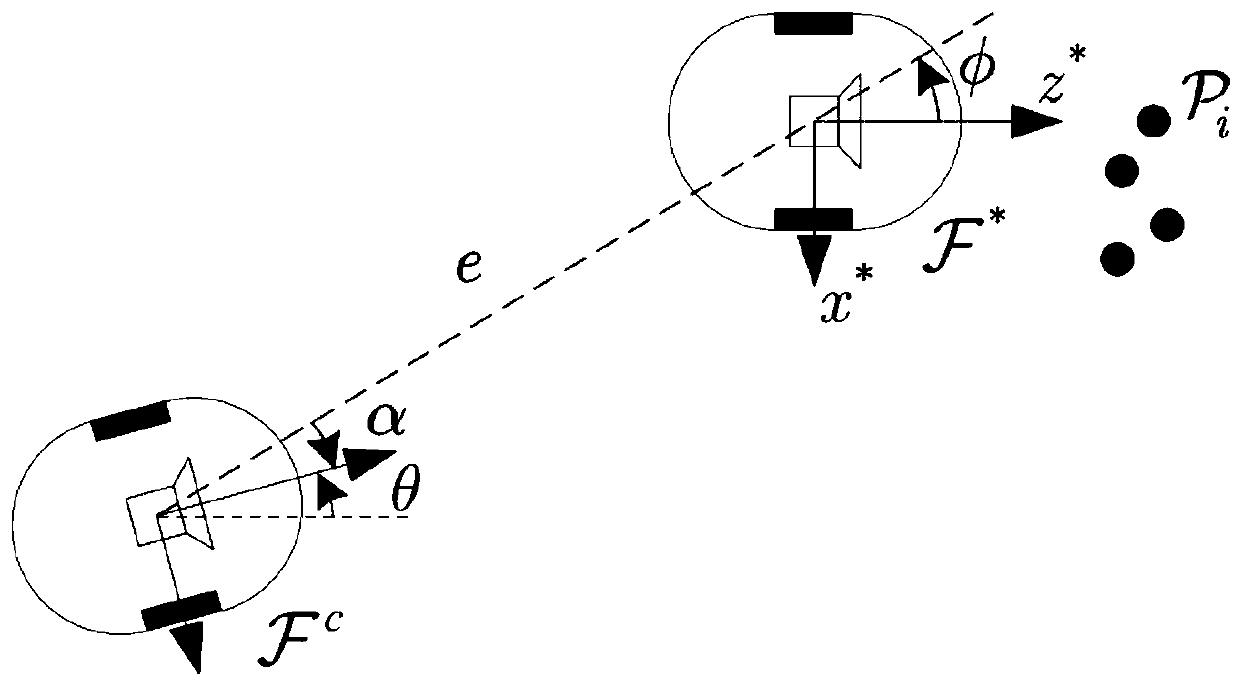

[0073] First, define the system coordinate system

[0074] Section 1.1, System Coordinate System Description

[0075] Define the coordinate system of the on-board camera to be consistent with the coordinate system of the mobile robot. by Represents the Cartesian coordinate system of the expected pose of the robot / camera, where The origin of is at the center of the wheel axis, which is also the optical center of the camera. z * The axis coincides with the optical axis of the camera lens, and also coincides with the forward direction of the robot; x * The axis is parallel to the axis of the robot; y * axis perpendicular to x * z * plane (mobile robot motion plane). by Indicates the current pose coordinate system of the camera / robot.

[0076] Let e(t) represent the distance between the desired position and the current position; θ(t) represents compared to The rotation angle of the robot; α(t) represents the current pose of the robot and the

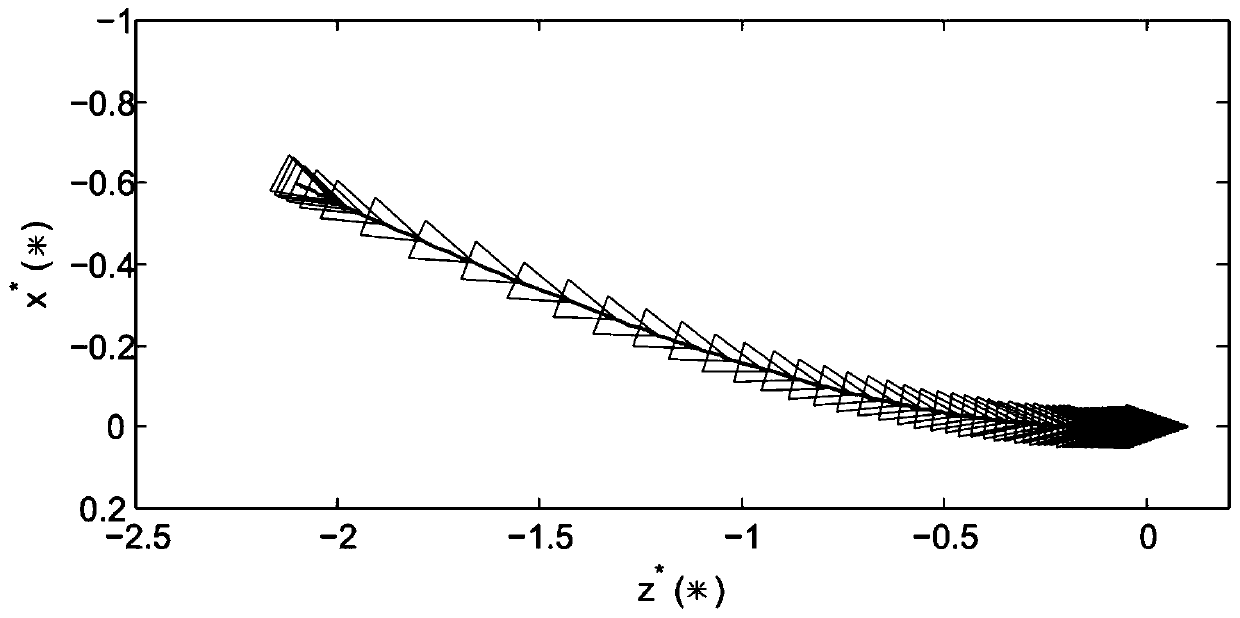

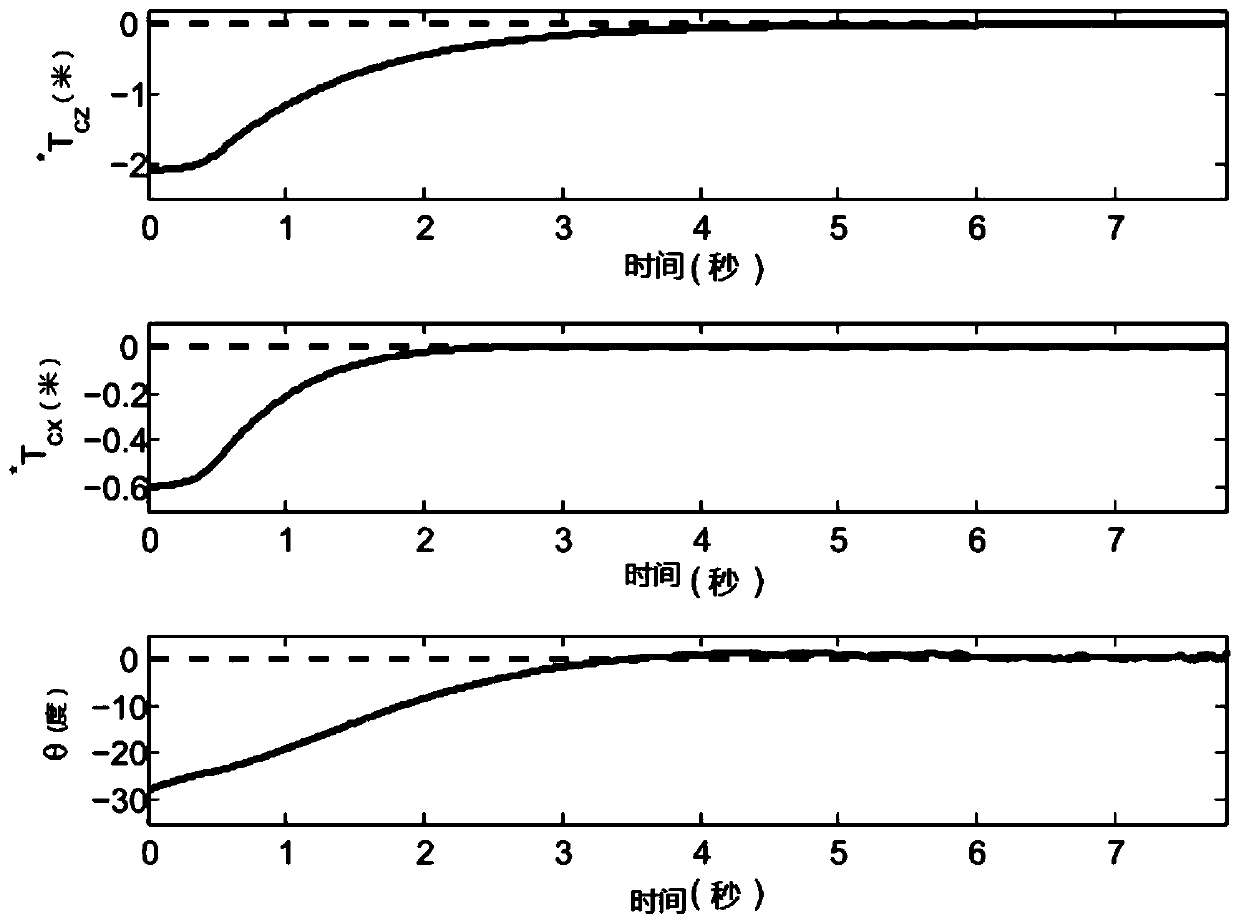

[0077] arrive T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com