A Prediction Method of Target Capture Point Based on Online Confidence Discrimination

A prediction method and a technology of confidence, applied in the fields of image processing and computer vision, can solve problems such as the inability to effectively improve the capture success rate, the inability to guarantee the capture success rate, and increase the difficulty of capture, so as to achieve real-time capture and easy Realize the effect of good arrest conditions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

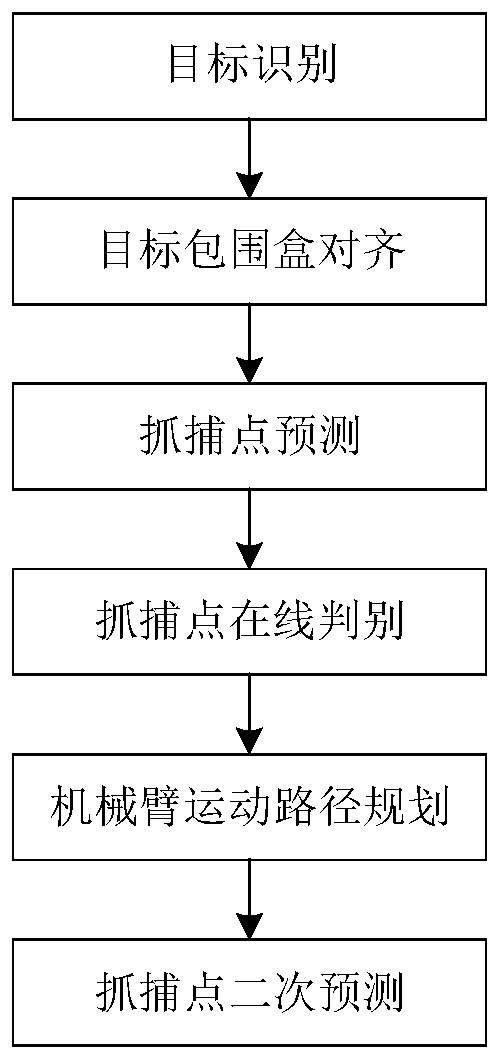

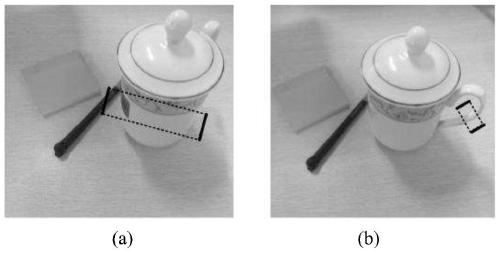

[0037] The present invention is a target capture point prediction method based on online discrimination, which mainly consists of target recognition, target bounding box alignment, capture point prediction, capture point online discrimination, mechanical arm motion path planning and capture point secondary prediction It consists of six parts.

[0038] The method specifically includes steps as follows:

[0039] 1. Target recognition

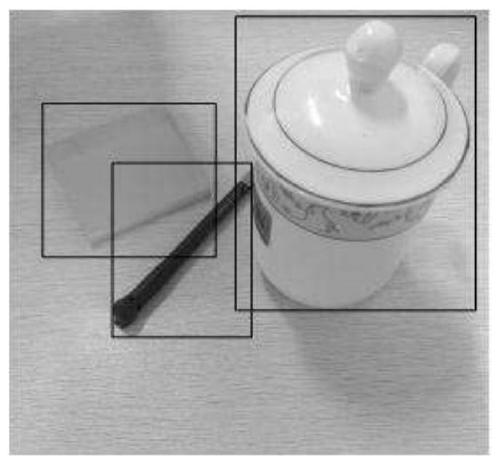

[0040] In order to avoid the low time efficiency of the traditional algorithm based on sliding window search, a target recognition method based on object-like sampling is adopted. Firstly, the real-time imaging results of the hand-eye camera are extracted. For the current image, the EdgeBoxes method [1] is used to predict all areas that may contain objects in the image.

[0041] [1] C.L.Zitnick and P.Dollár, "Edge boxes: Locating ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com