Face feature point coding and decoding method, device and system

A codec method and technology of face features, applied in the codec method of face feature points, equipment and system fields, can solve the problems of slow change of model parameter vector in time domain, reduce data volume, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

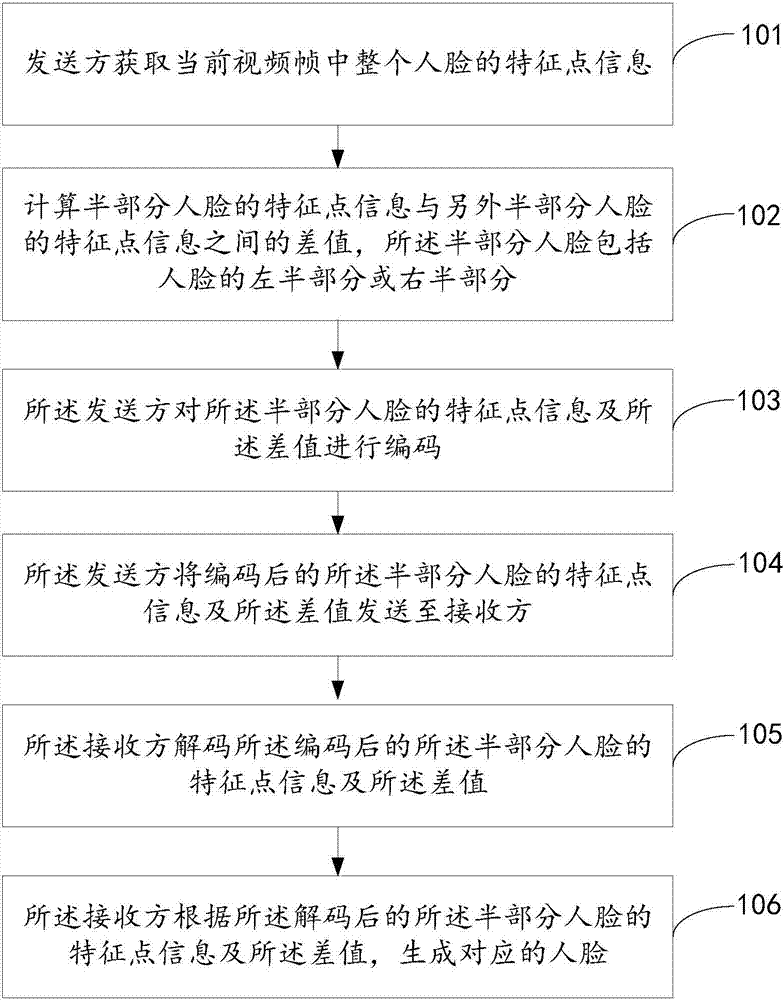

[0097] The embodiment of the present invention provides a method for encoding and decoding human face feature points, referring to figure 1 As shown, the method includes:

[0098] 101. The sender acquires feature point information of the entire face in the current video frame.

[0099] Wherein, the feature point information is used to describe at least one of the outline, eyebrows, eyes, nose and mouth of the human face.

[0100] 102. Calculate the difference between the feature point information of half of the face and the feature point information of the other half of the face, where the half of the face includes the left half or the right half of the face.

[0101] Specifically, a difference between each feature point information of the half of the faces and corresponding feature point information of the other half of the faces is calculated.

[0102] 103. The sender encodes the feature point information of the half part of the face and the difference.

[0103] 104. The ...

Embodiment 2

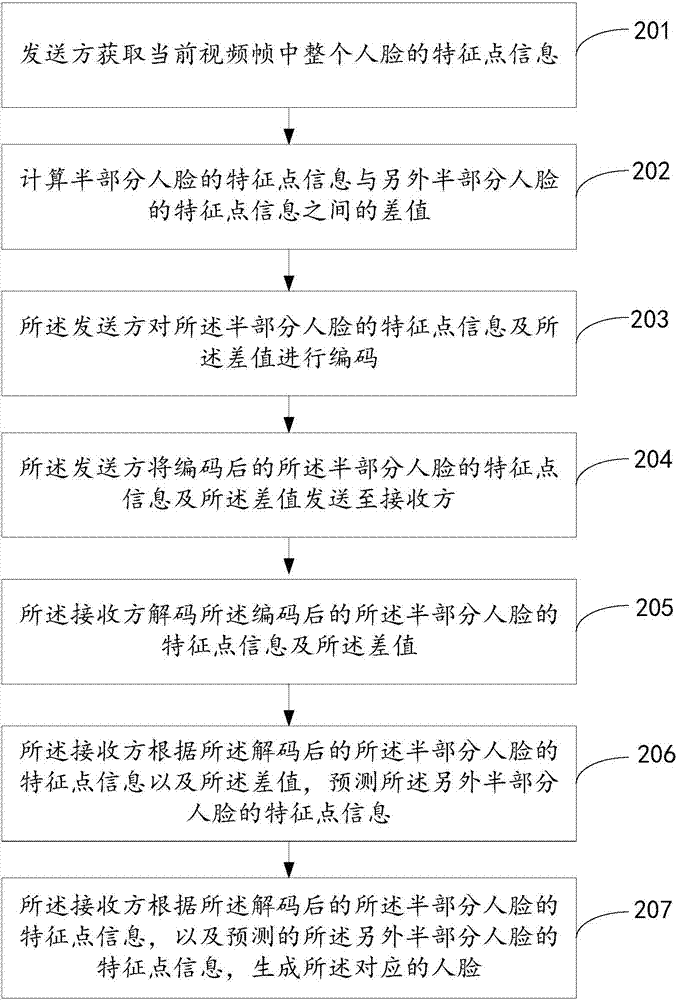

[0110] The embodiment of the present invention provides a method for encoding and decoding human face feature points, referring to figure 2 As shown, the method includes:

[0111] 201. The sender acquires feature point information of the entire face in the current video frame.

[0112] Wherein, the feature point information is used to describe at least one of the outline, eyebrows, eyes, nose and mouth of the human face.

[0113] The method includes a sender electronic device.

[0114] The entire human face can include the outline, eyebrows, eyes, nose and mouth of the entire human face, or it can include the outline of the entire human face, eyes and mouth, or it can be the outline of the entire human face, eyes, nose and mouth. It can be eyes and mouth, and can also be eyes, nose and mouth. The whole human face can also be other, and the embodiment of the present invention does not limit the specific human face; The acquired real face of the user may also be a face in wh...

Embodiment 3

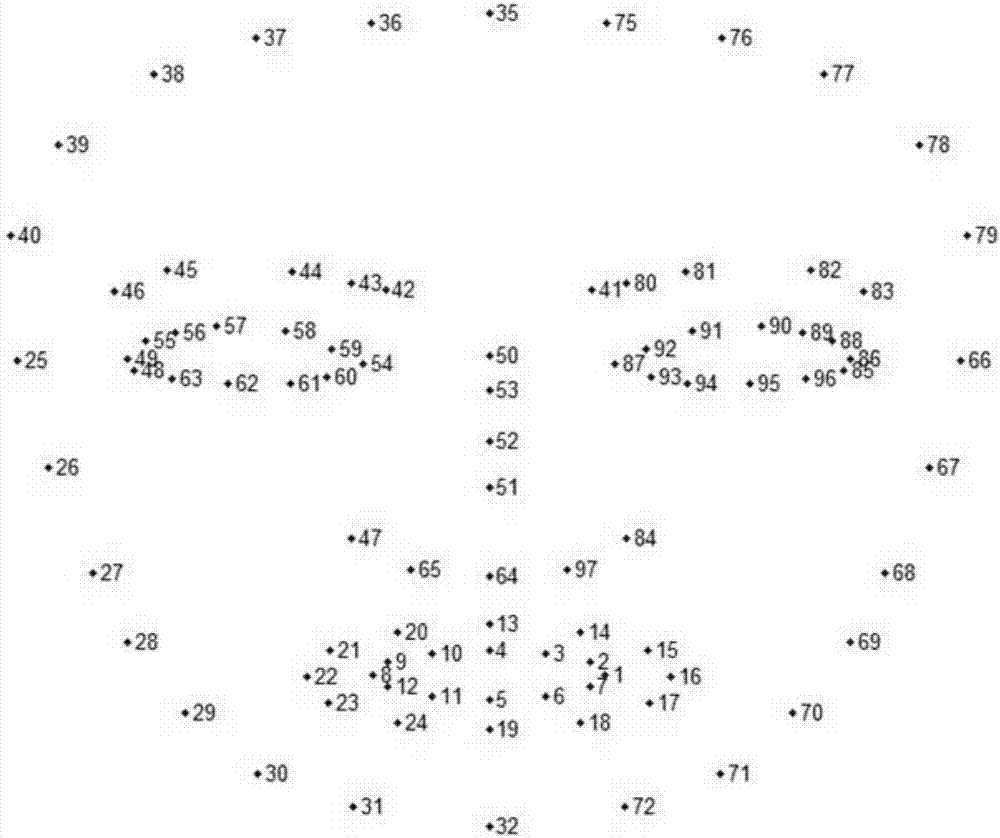

[0144] The embodiment of the present invention provides a kind of encoding method of human face feature point, refer to image 3 As shown, the method includes:

[0145] 301. Acquire feature point information of the entire human face in the current video frame.

[0146] Wherein, the feature point information is used to describe at least one of the outline, eyebrows, eyes, nose and mouth of the human face.

[0147] Specifically, the steps are similar to step 201 in Embodiment 2, and will not be repeated here, wherein, the subject of obtaining the feature point information of the entire face in the current video frame may be the electronic device of the sender, or may It is the receiving electronic device, and may also be other electronic devices, which are not limited in this embodiment of the present invention.

[0148] 302. Calculate the difference between the feature point information of half of the faces and the feature point information of the other half of the faces.

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com