Specific modal semantic space modeling-based cross-modal similarity learning method

A technique for modal semantics, learning methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

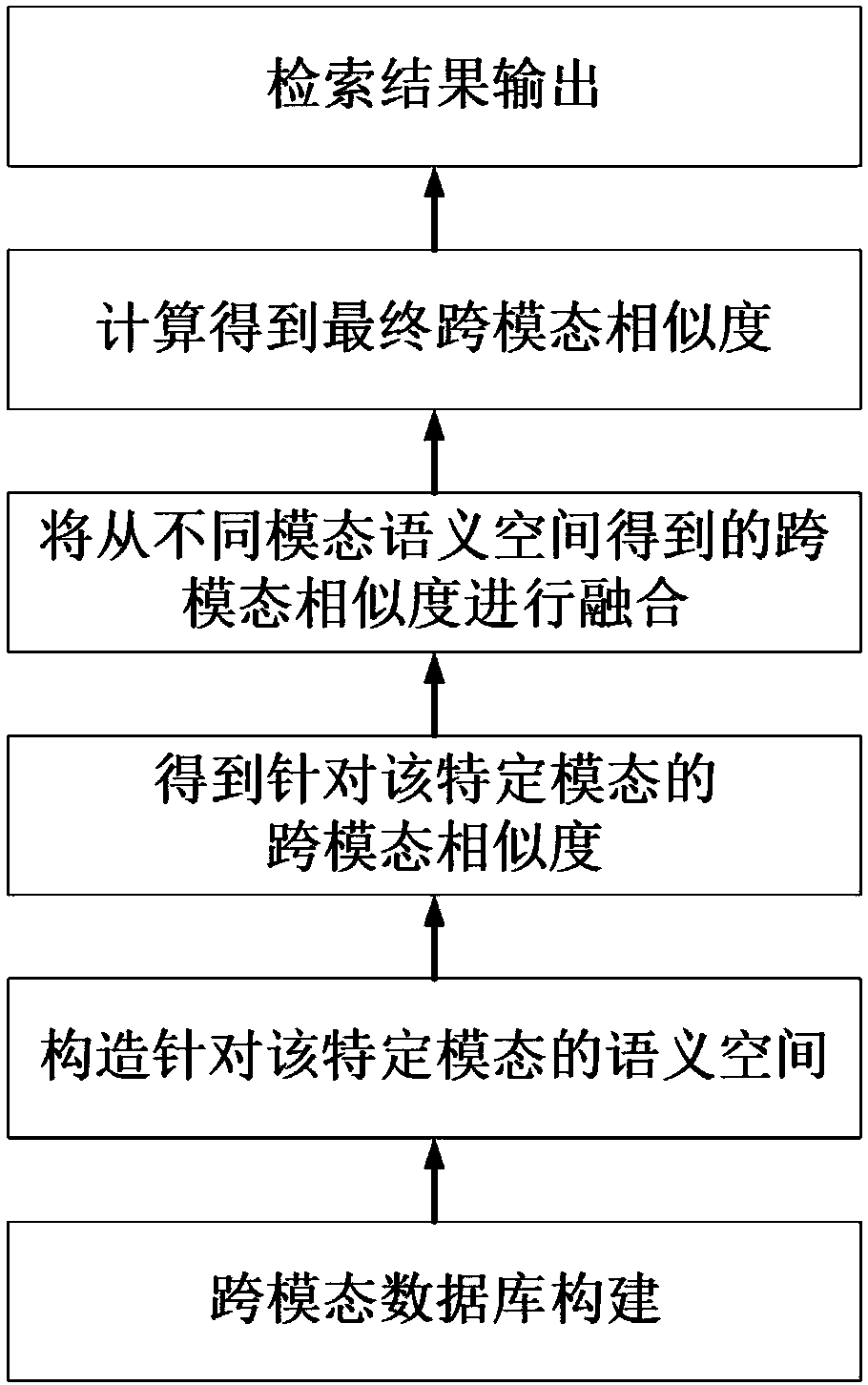

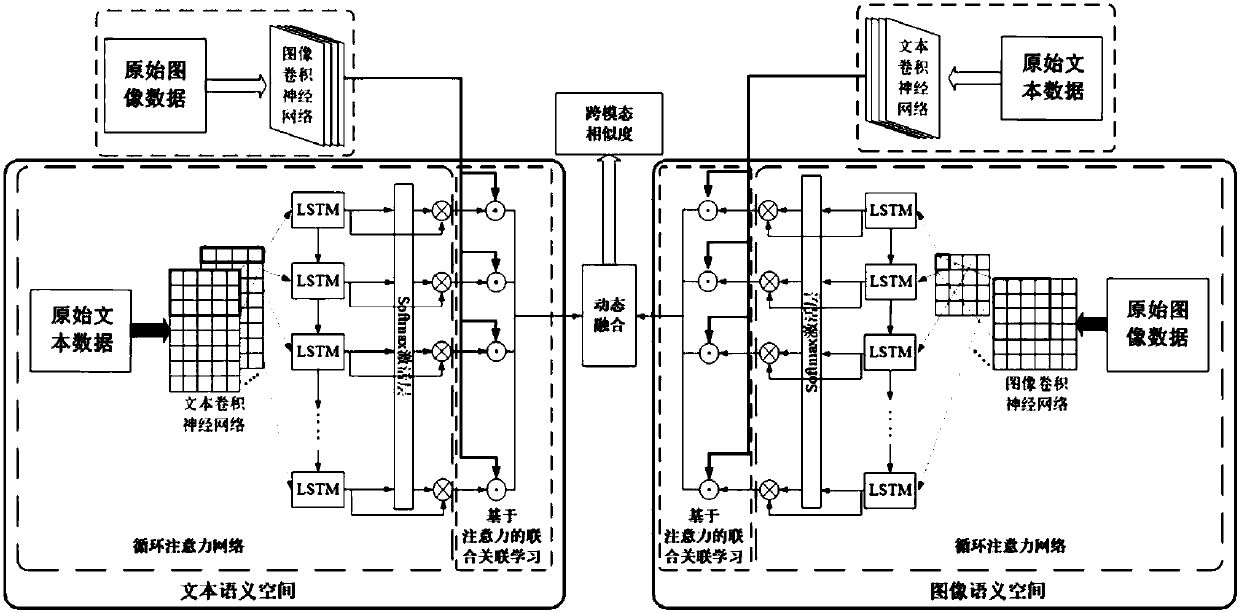

[0022] A cross-modal similarity learning method based on specific modality semantic space modeling of the present invention, its process is as follows figure 1 shown, including the following steps:

[0023] (1) Establish a cross-modal database, which contains data of multiple modality types, and divide the data in the database into training set, test set and validation set.

[0024] In this embodiment, the cross-modal database may contain multiple modality types, including images and texts.

[0025] Let D denote the cross-modal data set, D={D (i) ,D (t)},in

[0026] For media type r, where r=i, t (i means image, t means text), define n (r) for the number of data. Each data in the training set has one and only one semantic category.

[0027] definition is the feature vector of the pth data in the media type r, and its repres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com