Neural network training method and three-dimensional gesture posture estimation method

A neural network training and gesture technology, which is applied in the fields of computer vision and deep learning, can solve problems such as errors, large detection errors, and large gesture detection limitations, so as to achieve good training effects, reduce occlusion effects, and reduce illumination changes and object occlusions. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further described below with reference to the accompanying drawings and in combination with preferred embodiments.

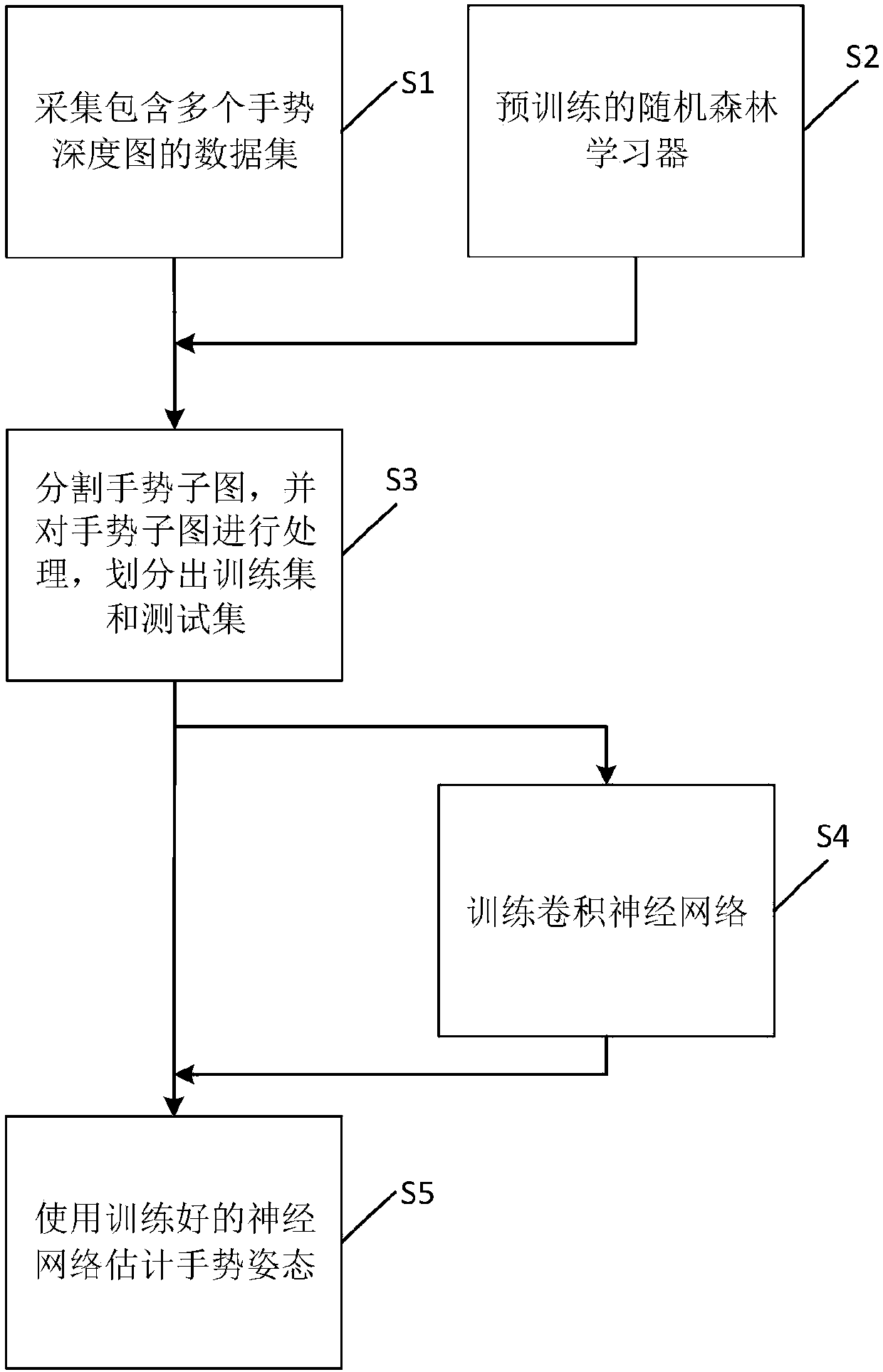

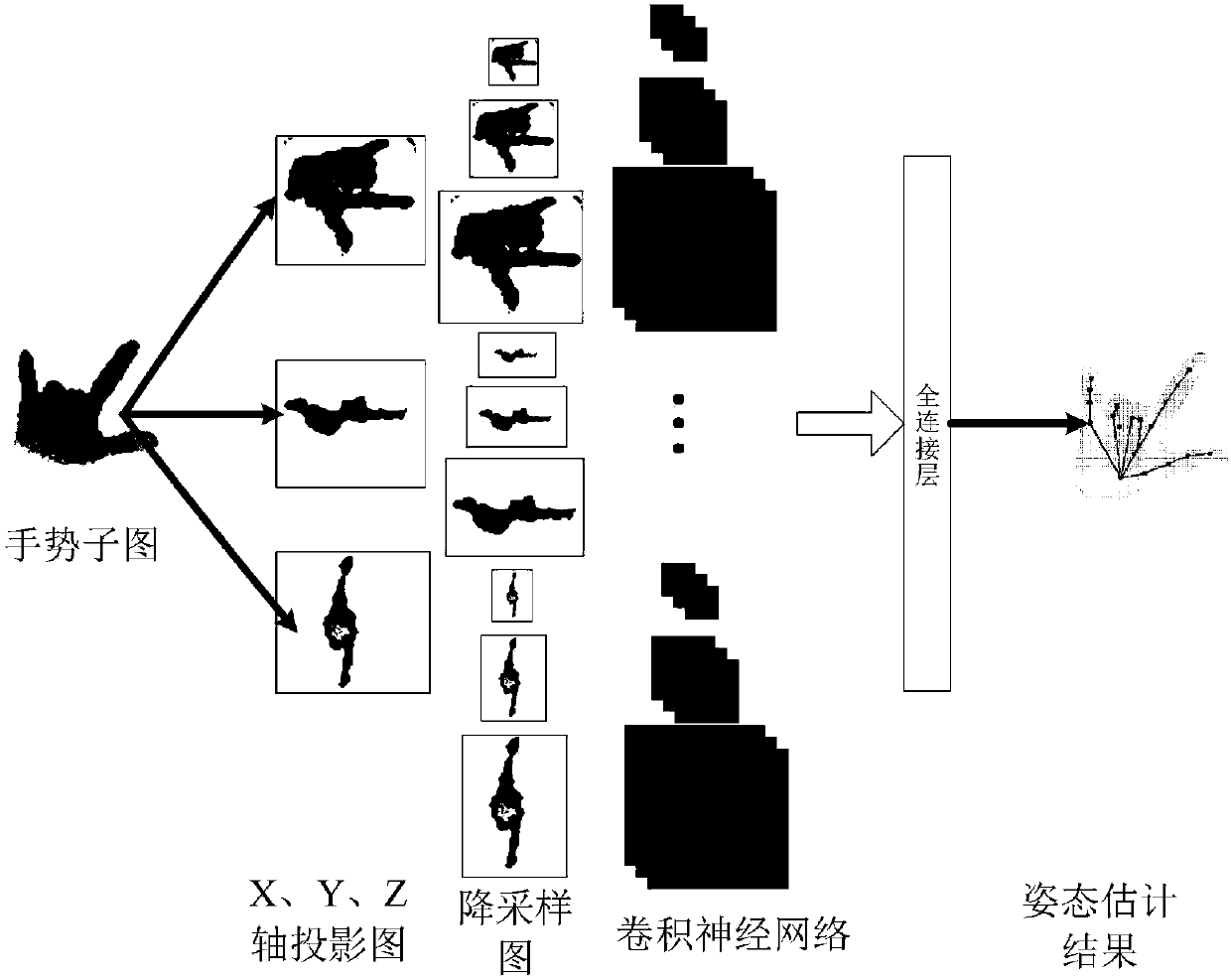

[0038] Such as figure 1 As shown, the three-dimensional gesture estimation method of the preferred embodiment of the present invention includes the following steps:

[0039] S1: Collect the data set of the gesture depth map; specifically include the following steps:

[0040] S11: Use multiple depth cameras to collect gesture depth pictures of different people, collect multiple pictures containing many different angles and various gestures for each gesture of each person, and organize the collected pictures into a picture library;

[0041] S12: Label each picture in the picture library; the human hand skeleton contains multiple joint points, and each joint point has a certain degree of freedom. In order to accurately locate the detailed information of the gesture joint point position and posture, in this embodiment Mark the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com