Real person verification method via videos

A video and real-person technology, applied in the field of real-person verification, can solve problems such as long verification time, application limitations of the face identity authentication system, forged faces, etc., and achieve the effect of improving authenticity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

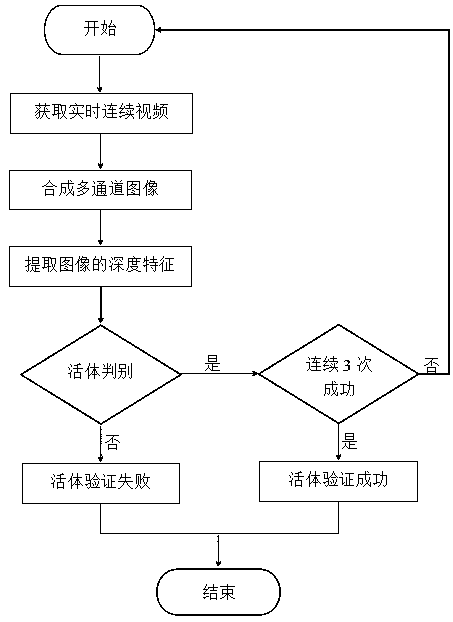

[0032] A method of real-person verification via video, such as figure 1 As shown, it mainly includes the following steps:

[0033] Step A1: Collect continuous video, convert the continuous video frames in the video stream into multiple single-channel images, and then combine the multiple single-channel images into one multi-channel image;

[0034] Step A2: Input the three-channel image synthesized in Step A1 into the deep learning training model and extract deep features;

[0035] Step A3: Use the living body judgment method to judge whether the person in the current image is alive, and output the result.

[0036] In the present invention, if repeated detection is successful for 3 consecutive times, the living body verification is successful; otherwise, the living body verification fails.

[0037] The present invention uses a camera to capture continuous video of the person to be verified, converts the continuous video frames in the video stream into multiple single-channel ...

Embodiment 2

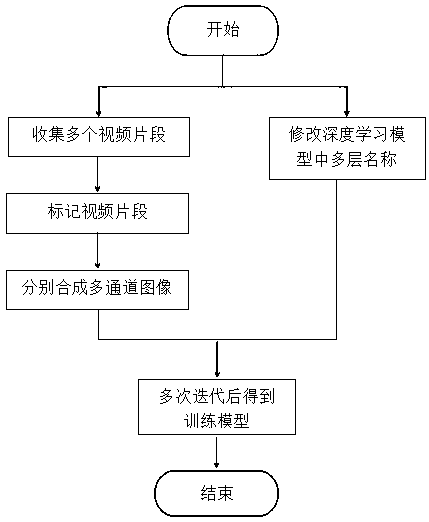

[0040] This embodiment is further optimized on the basis of embodiment 1, as figure 2 As shown, the generation steps of the training model shown mainly include the following steps:

[0041] Step A31: Collect multiple real and known video clips of the living body, mark the video clips of the living body and non-living body video clips, and combine each video clip into a multi-channel image. channel image with 0 as label;

[0042] Step A32: input the marked multi-channel image information in step A31 into the modified VGG Face model to obtain a fine-tuned face recognition model;

[0043] Step 33: After several iterations, a living body recognition model is obtained, that is, a trained VGG Face deep learning model is obtained.

[0044] The deep learning model in the step A2 is a VGG Face deep learning model; the output parameter mum-outptut of fc8 in the VGG Face model is 2, and the name parameter name of fc8 is fc8_living; in the environment of Caffe, use the mark The actual...

Embodiment 3

[0047] This embodiment is further optimized on the basis of Embodiment 1 or 2. The method of processing the video stream into a multi-channel image is as follows: first collect the continuous video of the verifier through the camera, and then extract multiple frames of color images in the video stream, and convert the color images into multi-channel images. The image is converted to a single-channel grayscale image; three single-channel grayscale images are combined into a three-channel color image.

[0048] The method for synthesizing multi-channel images in the present invention is to extract multi-frame color images in the video stream first, and convert the color images into single-channel grayscale images; three single-channel grayscale images are combined to form a three-channel color image, wherein the first One image is used as the B channel of the color image, the second image is used as the G channel of the color image, and the third image is used as the R channel of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com