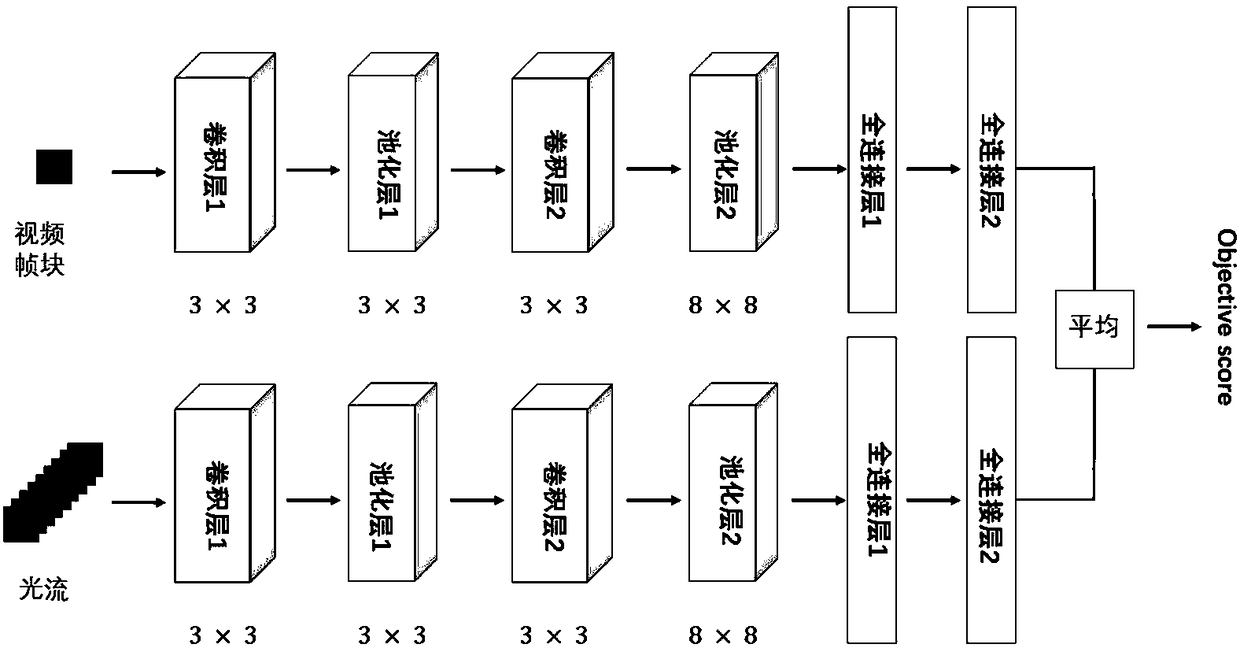

Virtual reality video quality evaluation method based on double-flow convolutional neural network

A convolutional neural network and virtual reality technology, applied in the field of virtual reality video quality evaluation, can solve the problems of no VR video, normative standards and objective evaluation system, etc., to simplify the process of feature extraction, simple preprocessing method, and time-consuming small effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] A virtual reality video quality assessment method based on two-stream convolutional neural network, each distorted VR video pair is composed of the left video V l and right video V r Composition, the evaluation method includes the following steps:

[0017] Step 1: Construct difference video V according to the principle of stereo perception d . First grayscale each frame of the original VR video and the distorted VR video, and then use the left video V l with the right video V r Get the required difference video. Compute the sum value video V at the video position (x, y, z) d The value of is shown in formula (1):

[0018] V d (x,y,z)=|V l (x,y,z)-V r (x,y,z)| (1)

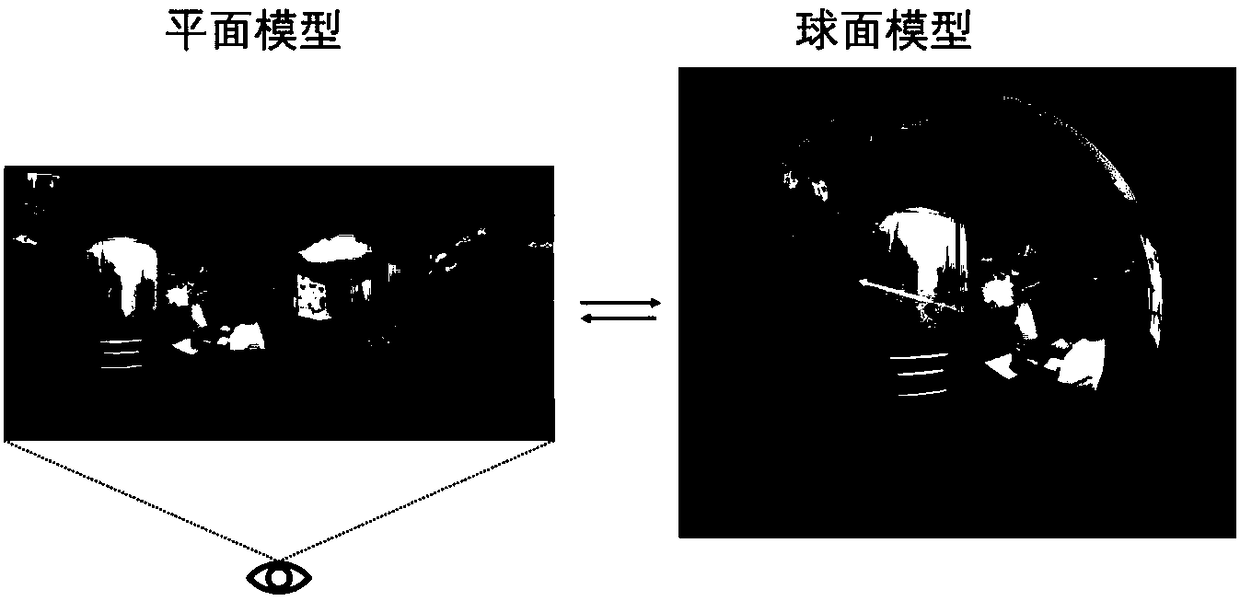

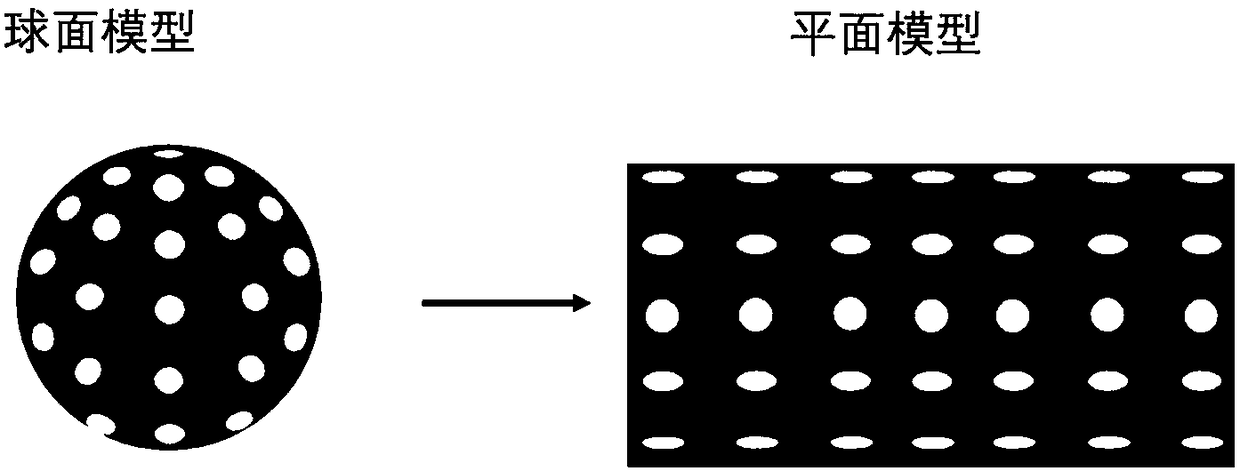

[0019] Step 2: According to the characteristics of virtual reality video projection and back-projection, spatially compress video frames at different positions, that is, down-sampling, and down-sample a video frame with a resolution of w×h by s times , to obtain a video frame with a resolution of (w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com