3D Pose Estimation Method of Space Object Based on Image Sequence

A technology of three-dimensional posture and space object, which is applied in image analysis, image enhancement, image data processing, etc., to achieve the effect of simplifying the difficulty and achieving good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

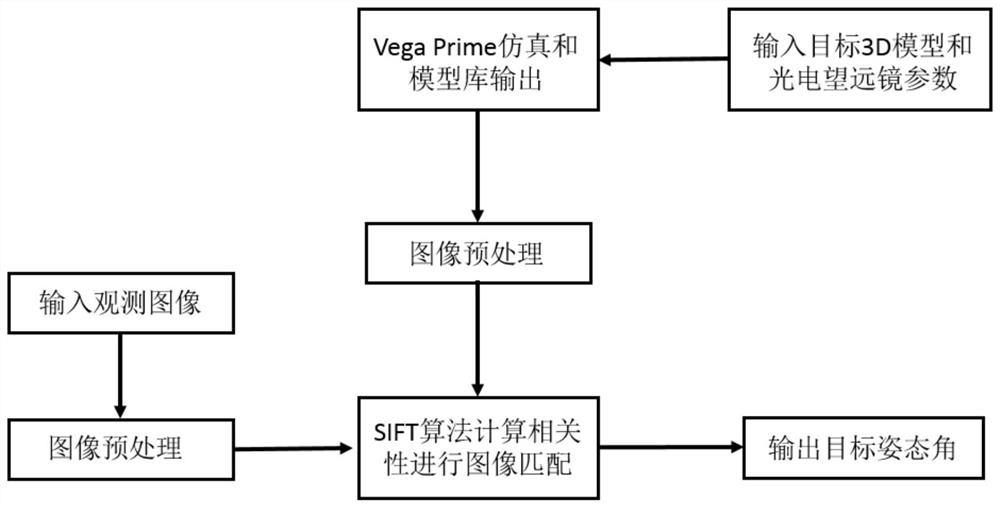

[0034] Specific implementation mode 1. Combination figure 2 Describe this embodiment, the method for estimating the three-dimensional pose of a space object based on image sequences described in this embodiment, the specific process of this method is:

[0035] Step 1. Preprocessing the observation image;

[0036] Step 2, image acquisition is performed on the three-dimensional attitude of the space target, and an object matching image library is obtained;

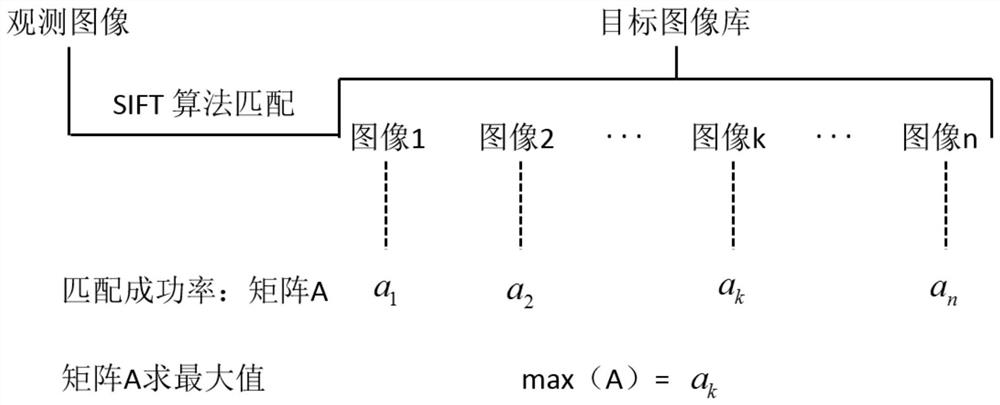

[0037] Step 3, using the scale-invariant feature algorithm to match the observed image preprocessed in step 1 with the image in the target matching image library in step 2, and filter to obtain the most similar image;

[0038] Step 4, reversely calculate the three-dimensional attitude parameter value of the space object, and output the attitude angle of the space object.

[0039] In this embodiment, Professor David G. Lowe of the University of British Columbia proposed a feature detection method based on invariant technol...

specific Embodiment approach 2

[0040] Specific embodiment 2. This embodiment is a further description of specific embodiment 1. The preprocessing of the observed image in step 1 is to perform noise reduction or enhancement processing on the observed image.

[0041] In this embodiment, the purpose of preprocessing is to obtain a better feature point extraction effect when using the SIFT algorithm.

specific Embodiment approach 3

[0042] Specific implementation mode three, this implementation mode is to further explain specific implementation mode one, the specific process of obtaining the target matching image library described in step 2 is:

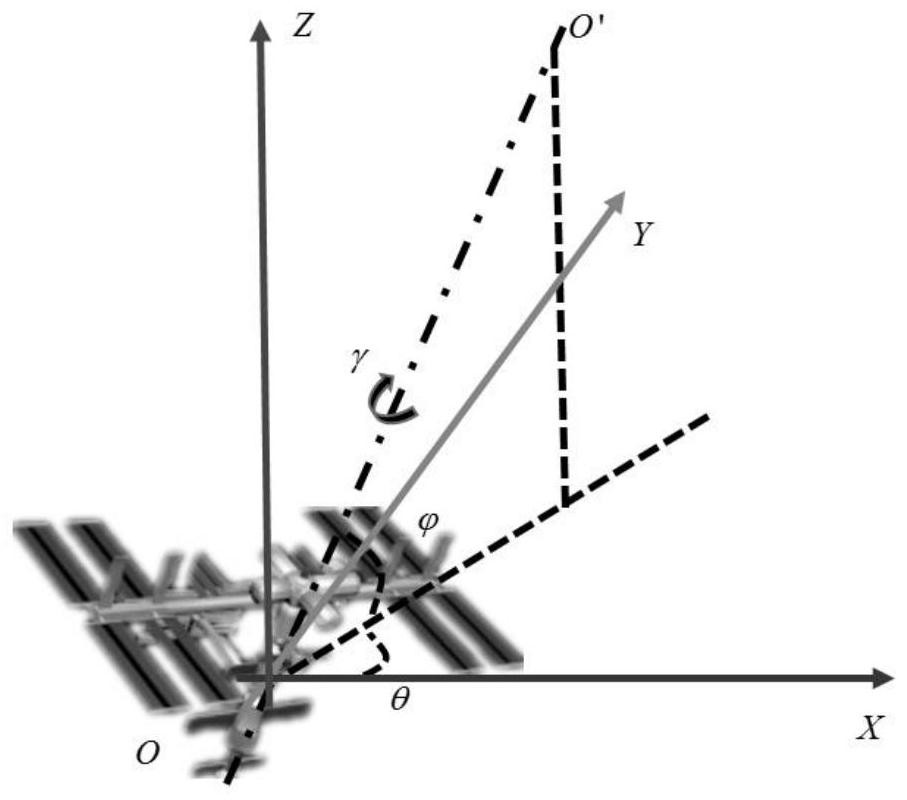

[0043] Obtain a 3D model of a space target, define the initial 3D attitude parameter value (0,0,0) for the 3D model, change the initial 3D attitude parameter value (0,0,0), construct a model library, and obtain a target matching image library .

[0044] In this embodiment, the images in the object matching image library contain the three-dimensional attitude parameter information of the space object, and each image can independently determine the three-dimensional attitude parameters of the space object in the current state.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com