Human-machine cooperation system gesture recognition control method based on deep learning

A gesture recognition, human-machine collaboration technology, applied in neural learning methods, character and pattern recognition, computer parts and other directions, can solve problems such as low accuracy, unsafe hidden dangers, and machine injury, achieve high accuracy and solve collaborative safety problems. and the effect of high precision work problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

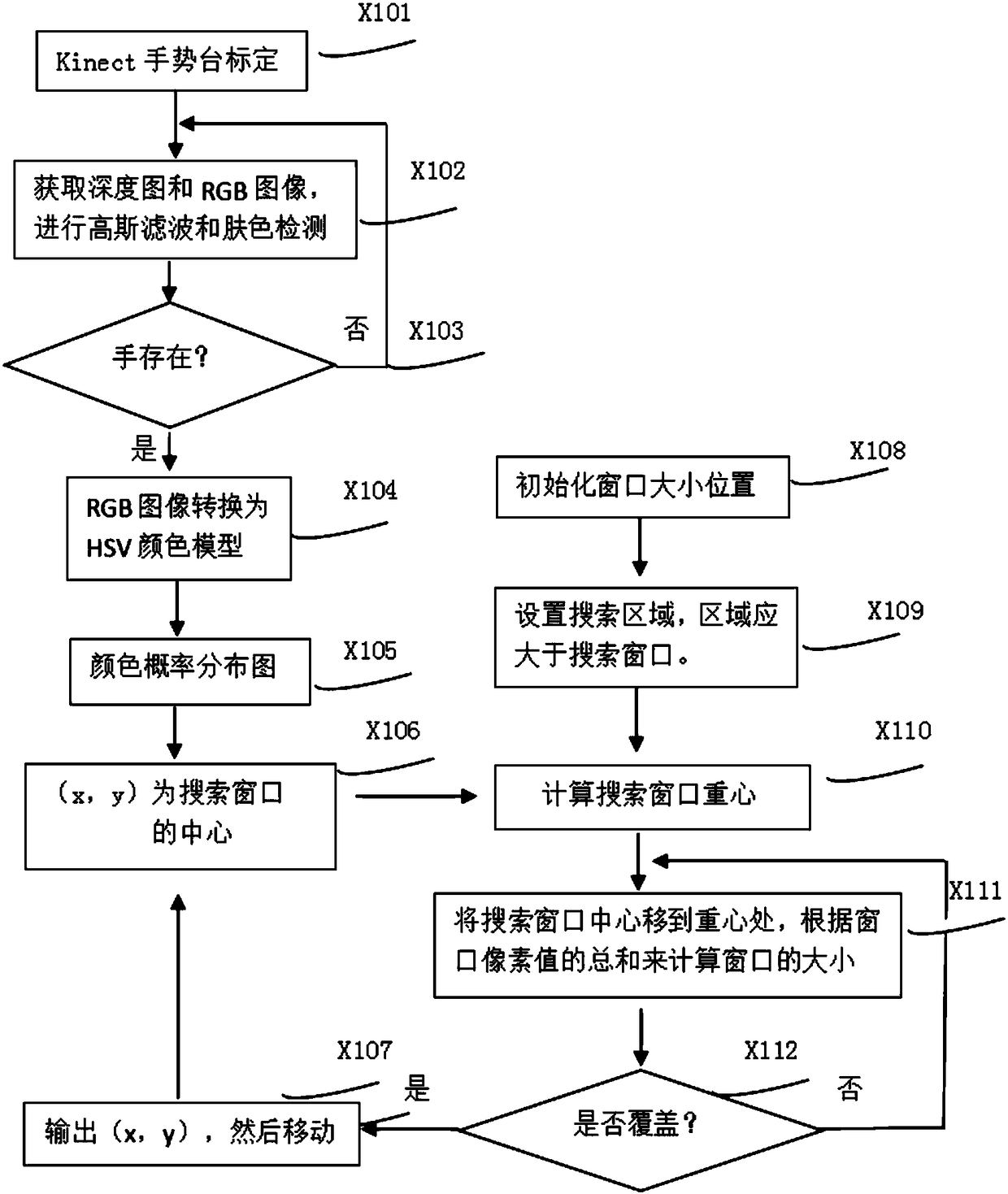

[0019] The present invention is a gesture recognition control method of a human-computer cooperation system based on deep learning, comprising the following steps:

[0020] Step 1. Track the gesture in real time and obtain the gesture image of the operator;

[0021] In step 1, the real-time tracking of the gesture and the acquisition of the operator's gesture image specifically include: using the kinect somatosensory camera to collect the user's gesture image; using the camshift tracking algorithm to confirm that the gesture image is within the collection range, and performing real-time tracking and sampling; The operator's gesture RGB image and depth image are transmitted to the PC through USB for processing.

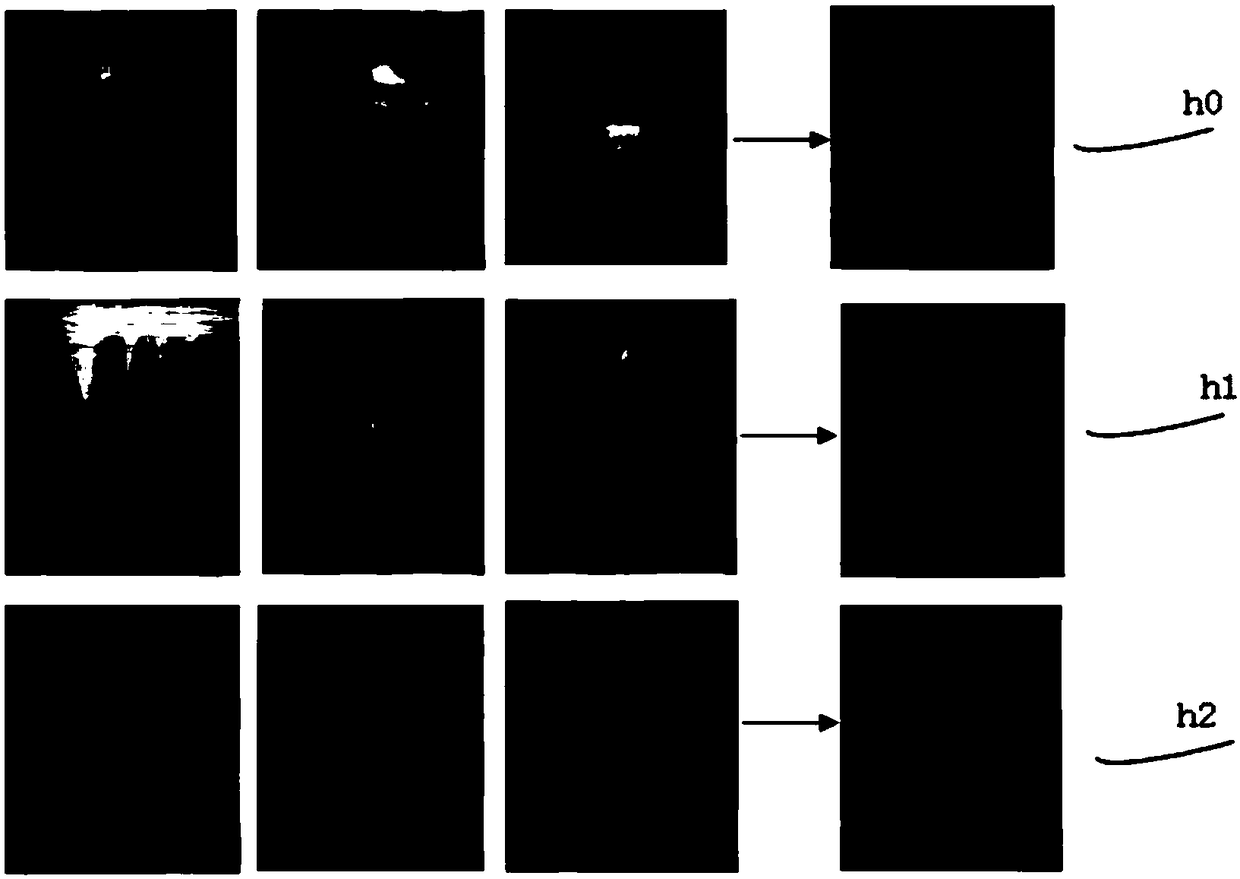

[0022] Use the camshift tracking algorithm to confirm that the gesture image is within the collection range, and perform real-time ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com