Urban land utilization information analysis method based on deep neural network

A deep neural network and urban technology, applied in the field of remote sensing image processing, can solve the problem of limited accuracy of urban land use extraction, achieve the best classification performance and improve the accuracy rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

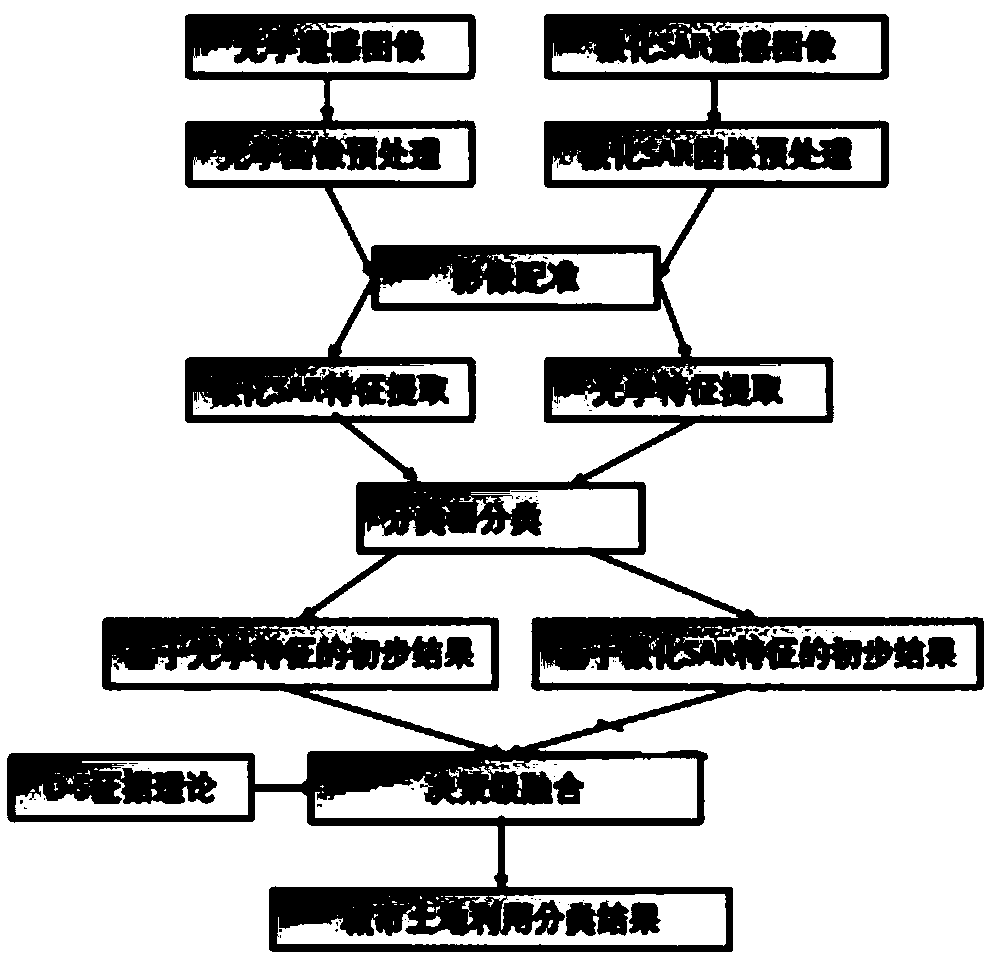

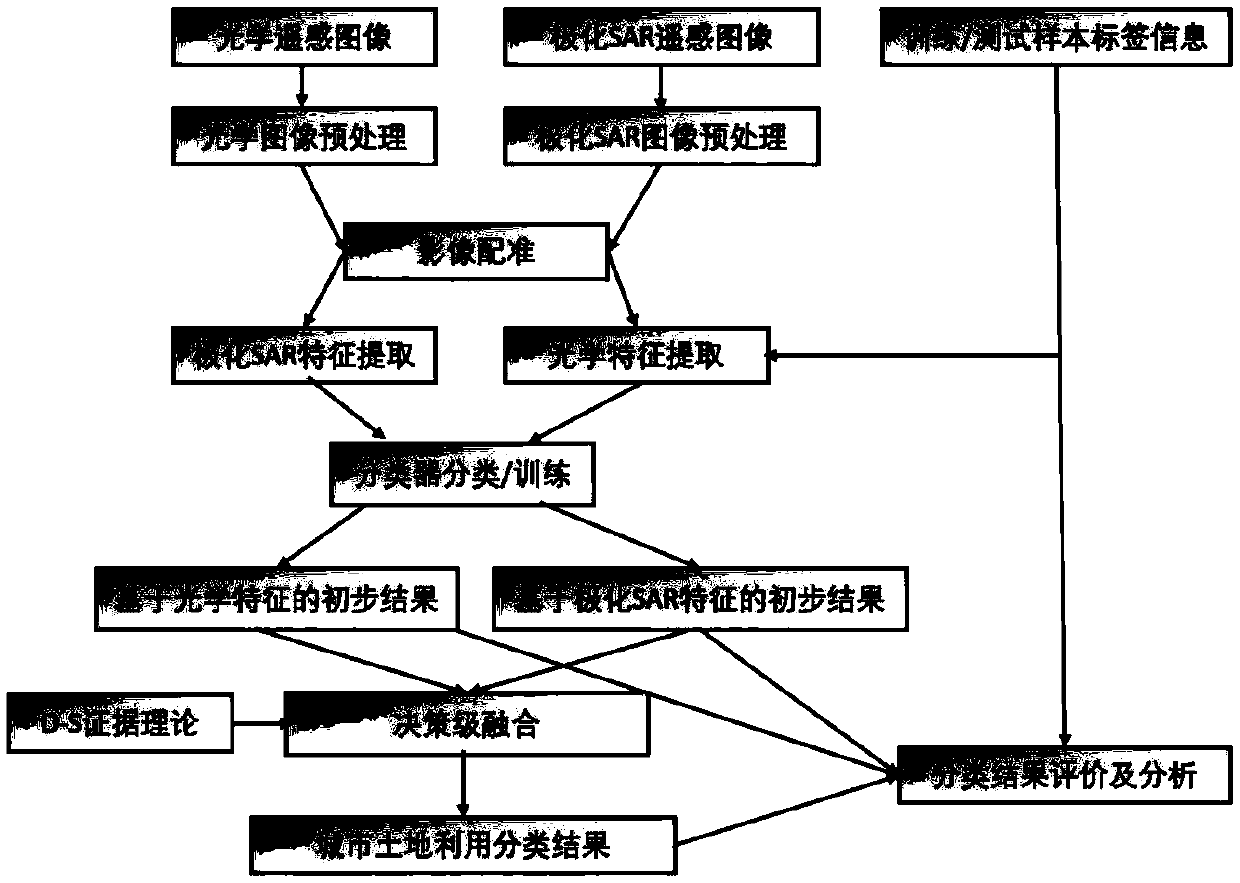

[0067] Such as Figure 1A as shown, Figure 1A A schematic flow diagram of a method for analyzing urban land use information based on a deep neural network in this embodiment is shown, and the method includes the following steps:

[0068] S1. Obtain a set of fully polarimetric SAR data from microwave remote sensing satellites and a set of multispectral images from optical remote sensing satellites, preprocess the fully polarimetric SAR data to be processed, and obtain preprocessed fully polarimetric SAR data; The optical image is preprocessed to obtain the preprocessed optical image.

[0069] In this embodiment, the full-polarization SAR data and the optical image belong to different images of the same urban land.

[0070] It should be noted that the multispectral optical image to be processed (that is, the optical image) is obtained in advance, and then the optical image is subjected to preprocessing such as atmospheric correction, geometric correction, radiometric correction...

Embodiment 2

[0106] Based on the content of the above-mentioned first embodiment, each step in the above-mentioned first embodiment will be described in detail below.

[0107] (1) Optical feature extraction

[0108] 1. Multi-spectral band information extraction

[0109] The radiation intensity information of each band of the optical image can be extracted: band1, band2 to bandn.

[0110] 2. Gray level co-occurrence matrix (GLCM) information extraction

[0111] The weighted summation of the intensity of each channel of the optical image is carried out to obtain the radiation intensity information:

[0112] A=c 1 ·band1+c 2 ·band2...c n ·bandn

[0113] where the coefficient c i selected by experience;

[0114] 3. Use gray level co-occurrence matrix to extract texture information of optical image: mean (Mean), correlation coefficient (Correlation), variance (Variance), homogeneity (Homogeneity), contrast (Contrast), difference (Dissimilarity), entropy ( Entropy) and so on.

[0115] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com