Training method, identification method, device and processing device for recurrent neural network

A recursive neural network, a technology to be recognized, applied in the field of action recognition, can solve problems such as incapable of action recognition and prediction, inability to learn action timing conversion relations, and no effective solution proposed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

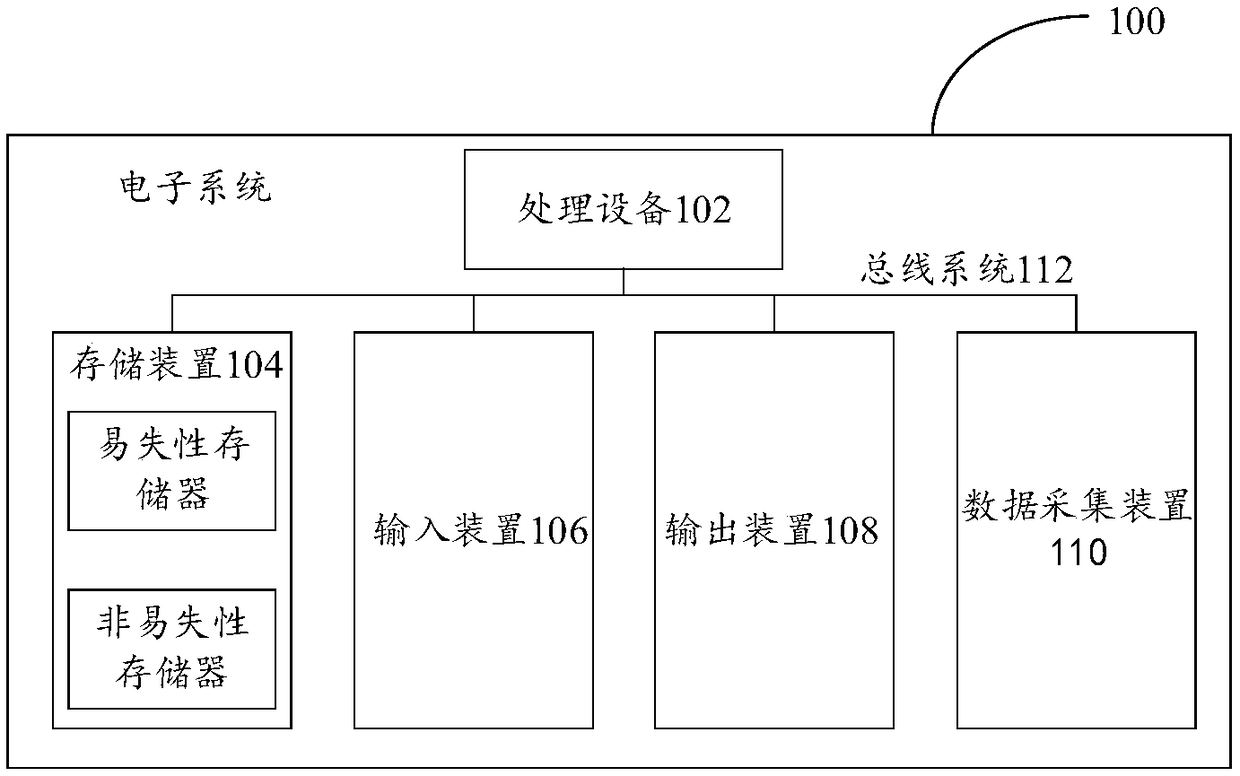

[0033] First, refer to figure 1 To describe an example electronic system 100 for implementing the motion recognition method, apparatus, and processing device and storage medium thereof according to the embodiments of the present invention.

[0034] likefigure 1 As a schematic structural diagram of an electronic system shown, the electronic system 100 includes one or more processing devices 102 and one or more storage devices 104 . Optionally, figure 1 The illustrated electronic system 100 may also include an input device 106, an output device 108, and a data acquisition device 110 interconnected by a bus system 112 and / or other form of connection mechanism (not shown). It should be noted that figure 1 The illustrated components and structures of the electronic system 100 are exemplary and not limiting, and the electronic system may have other components and structures as desired.

[0035] The processing device 102 may be a gateway, a smart terminal, or a device including a ...

Embodiment 2

[0043] According to an embodiment of the present invention, an embodiment of an action recognition method is provided. It should be noted that the steps shown in the flowchart of the accompanying drawings may be executed in a computer system such as a set of computer-executable instructions, and although A logical order is shown in the flowcharts, but in some cases steps shown or described may be performed in an order different from that herein.

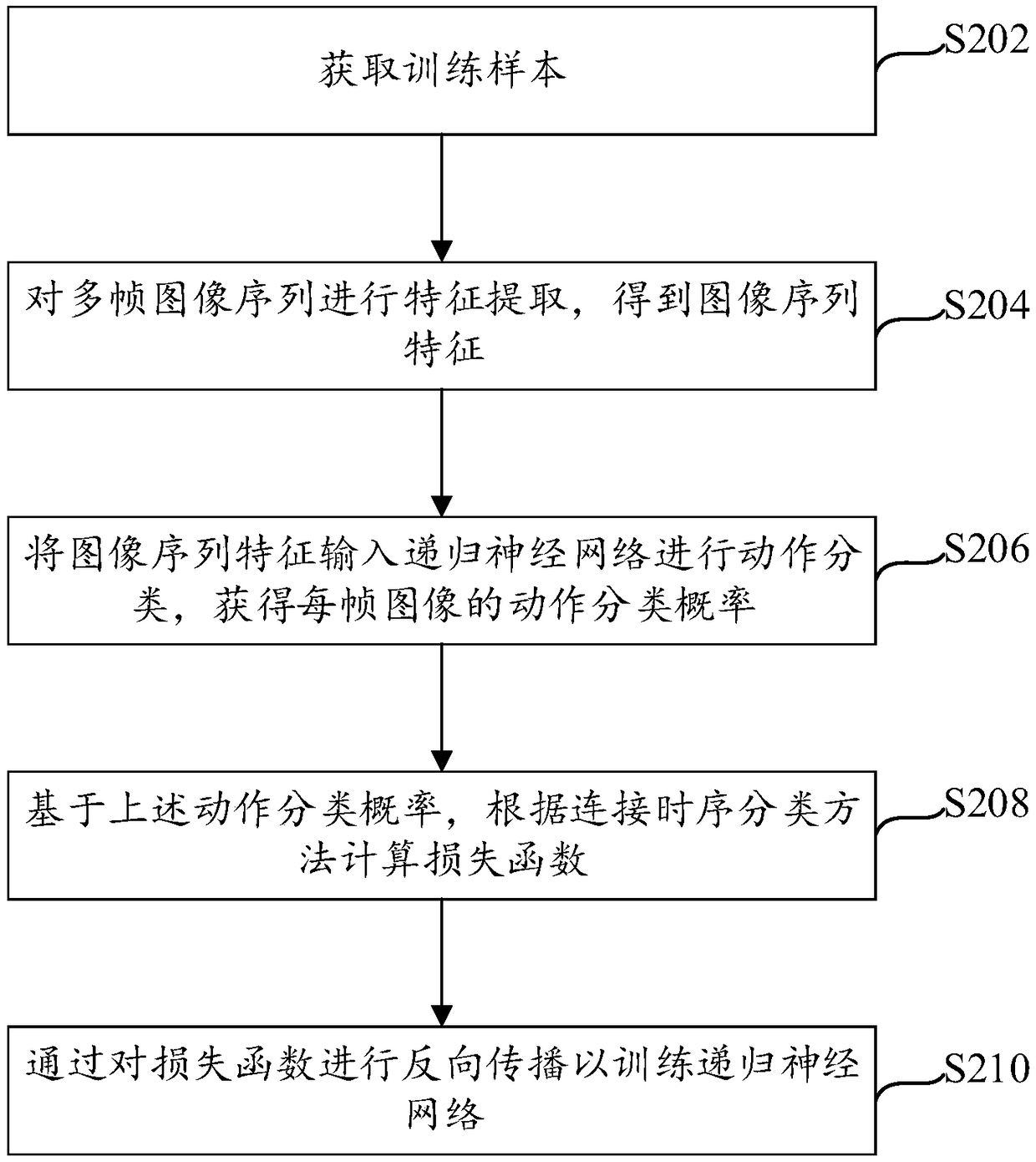

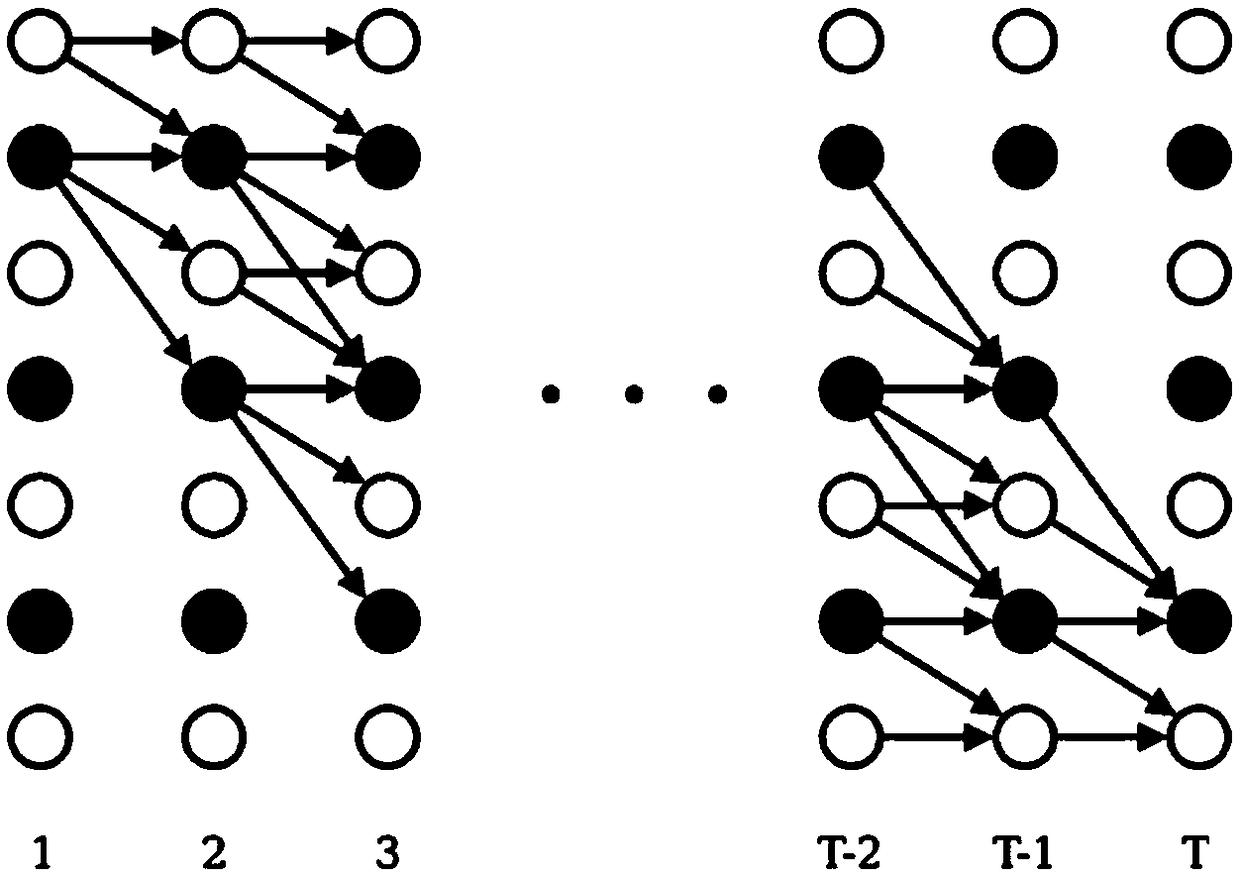

[0044] The recurrent neural network used in the embodiment of the present invention is trained by the action recognition network training method based on the CTC (dynamic programming method connection temporal classification method, Connectionist temporal classification) loss function, see figure 2 The flow chart of the recurrent neural network training method shown, the method specifically includes the following steps:

[0045] Step S202, acquiring training samples. The training samples include multi-frame image sequences and acti...

Embodiment 3

[0069] Corresponding to the training method of the recurrent neural network provided in the second embodiment, the embodiment of the present invention provides a training device of the recurrent neural network, see Figure 5 A structural block diagram of a training device for a recurrent neural network shown, including:

[0070] A sample acquisition module 502, configured to acquire training samples, where the training samples include a multi-frame image sequence of the video and an action identifier corresponding to the video;

[0071] The feature extraction module 504 is configured to perform feature extraction on the multi-frame image sequence to obtain the image sequence feature, and the image sequence feature includes the feature of each frame of image;

[0072] The action classification module 506 is used to input the image sequence feature into the recurrent neural network for action classification, and obtain the action classification probability of each frame of image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com