Sparse reconstruction-based KA-STAP (Knowledge Assistance-Space-Time Adaptive Processing) clutter and noise covariance matrix high-accuracy estimation method

A KA-STAP, covariance matrix technology, applied in radio wave measurement systems, instruments, etc., can solve problems such as STAP performance degradation, and achieve the effect of improving clutter suppression and target detection performance, small computational load, and easy engineering implementation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] Below in conjunction with accompanying drawing, technical scheme of the present invention will be further described:

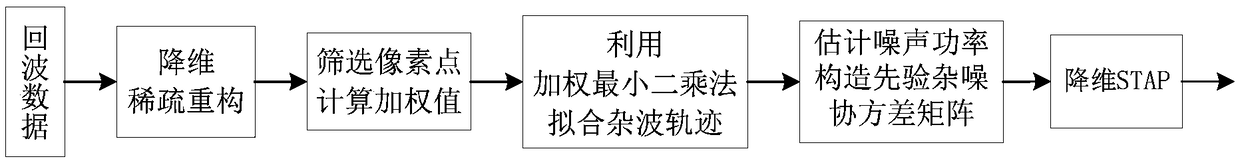

[0059] Such as figure 1 As shown, a KA-STAP noise covariance matrix high-precision estimation method based on sparse reconstruction, specifically includes the following steps:

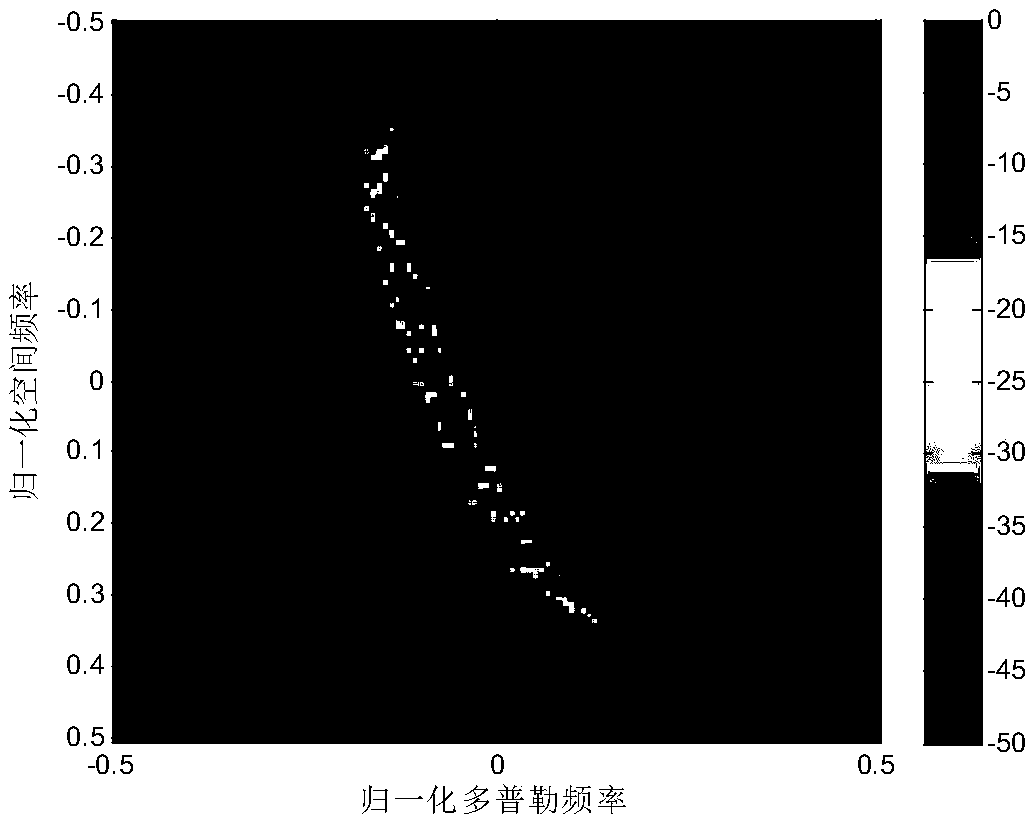

[0060] S1, analyze airborne radar echo data, and use sparse reconstruction to obtain high-resolution two-dimensional space-time spectrum;

[0061] S2, screening the pixels on the two-dimensional space-time spectrum, and calculating the weighted value corresponding to the pixels;

[0062] S3, using the weighted least squares method to fit the clutter trajectory;

[0063] S4, estimate the noise power according to the sparsely reconstructed space-time spectrum, and construct a priori clutter plus noise covariance matrix;

[0064] S5, using the prior noise covariance matrix and dimensionality reduction STAP to perform adaptive filtering and target detection on the detection unit....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com