A named data network adaptive caching strategy for vehicle networking

A technology for naming data networks and caching strategies, applied in electrical components, transmission systems, etc., can solve problems such as low utilization of cache resources and reduce cache diversity, and achieve low cache efficiency, avoid broadcast storms, and release cache space Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention is described in further detail below in conjunction with accompanying drawing:

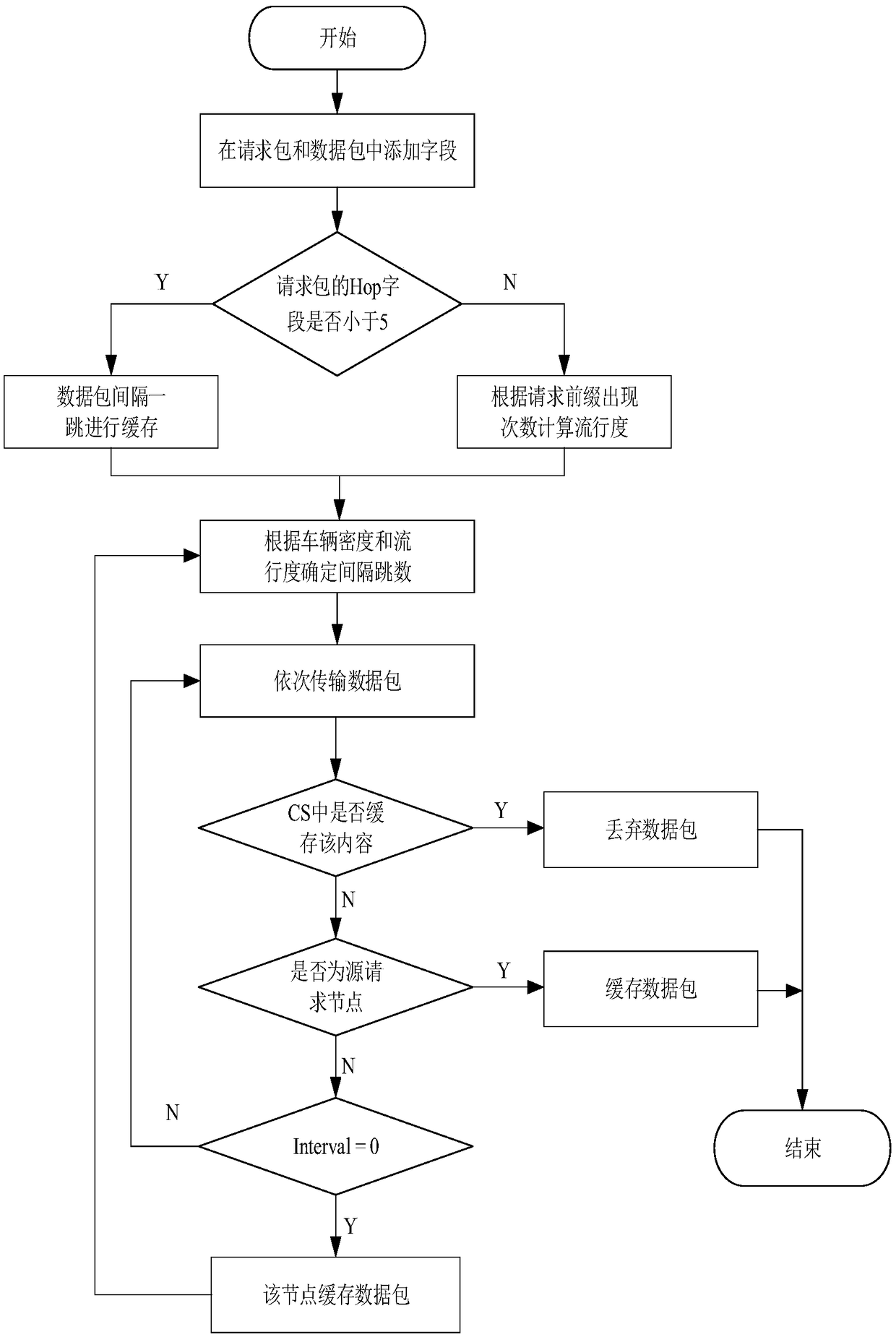

[0027] Such as figure 1 As shown, the present invention is a named data network adaptive caching strategy oriented to the Internet of Vehicles,

[0028] Let the source request node be V i , the data source node is V j1 , the set of vehicle nodes in the Data return path is V j ={V j1 ,V j2 ,V j3 ...V jn}, V j2 is the data source node V j1 Return the next node, V j3 ~V jn It is the next node on the backhaul path in turn; the last node V of the backhaul jn i.e. V i .

[0029] Specifically include the following steps:

[0030] Step 1): Add fields in the Interest package and Data package,

[0031] Add the hop number Hop field in the data packet format of the Named Data Network Interest packet, record the number of nodes that the Interest packet passes through, add 1 to the Hop field every time a node passes, and add the number of occurrences of the request pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com