An image fusion method based on convolution neural network

A convolutional neural network, image fusion technology, applied in instruments, character and pattern recognition, computer parts and other directions, can solve problems such as missing relevant information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

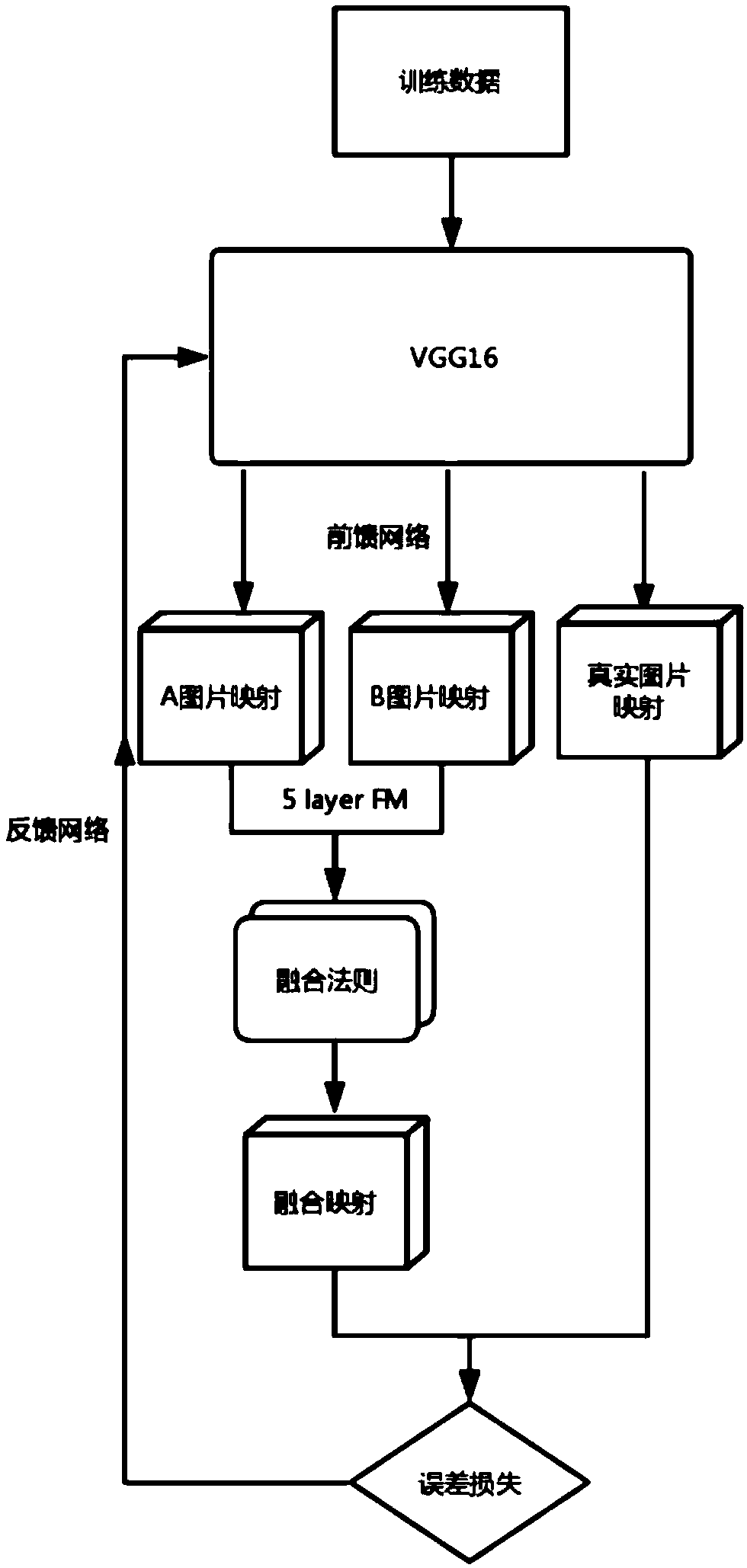

[0042] Embodiment 1: adopt the method in the above-mentioned content of the invention to such as Figure 4 In (a) and (b), the two multi-focus images to be fused are fused. We choose the following Figure 5 The 7 high-definition pictures shown are cropped and blurred with MATLAB to obtain a set of 70 training pictures.

[0043] Convert 70 training data pictures into .h5 files and input them into the training model. The training set contains two sets of data, one is real clear pictures, and the other is ten blurred pictures based on clear pictures. When the real picture is the marked picture, it is used for the calculation of the error. After several iterations, two sets of weights of the model are obtained and saved.

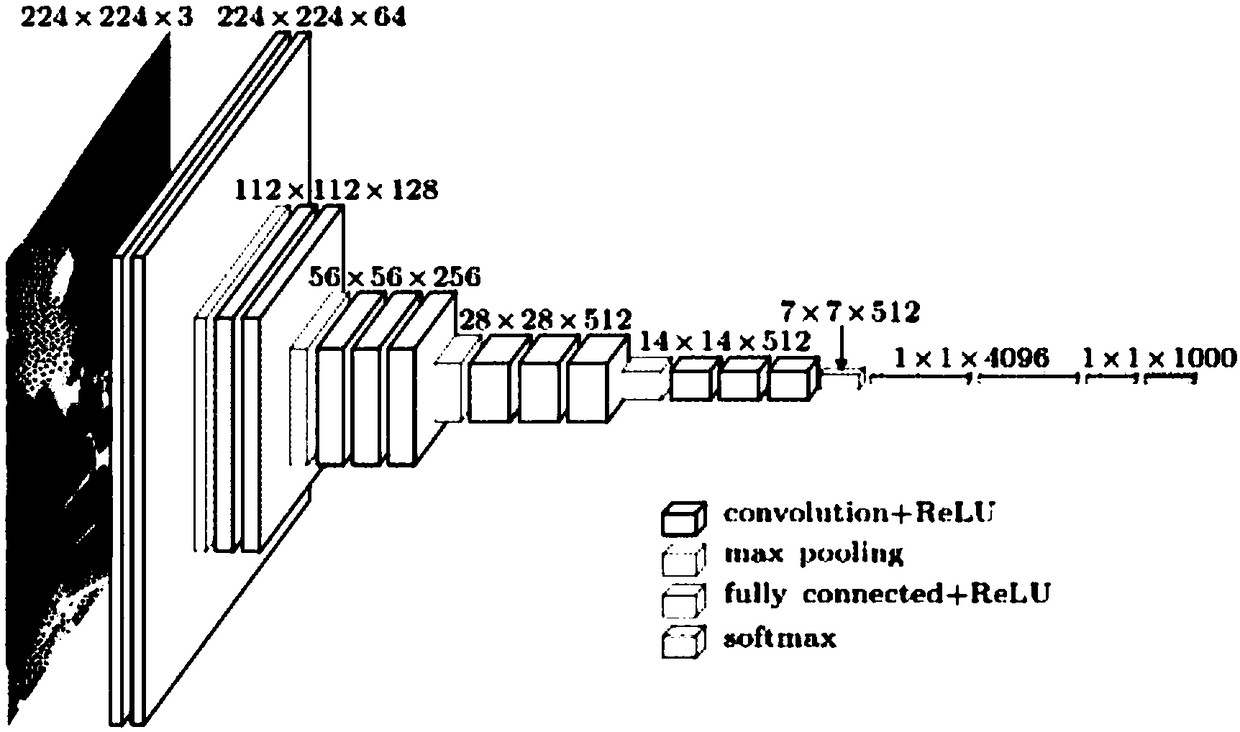

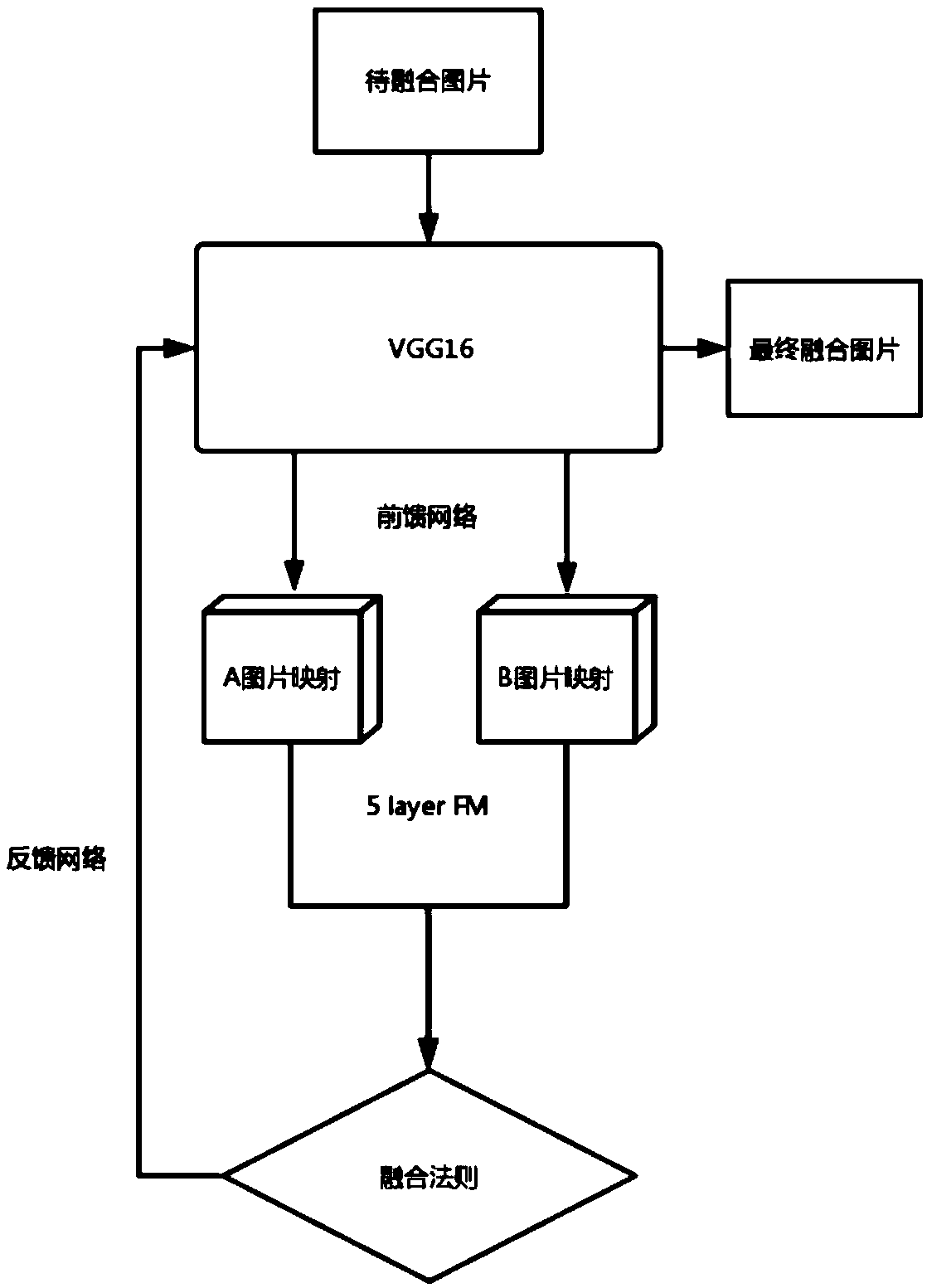

[0044] Initialize the weights of the VGG16 model (read the two sets of weights generated by training), and after the fused image passes through the network, five feature maps (corresponding to the five layers of VGG16) will be obtained. Only the fusion rule is...

Embodiment 2

[0049] Embodiment 2: adopt the method in the summary of the invention and the specific value pair in embodiment 1 such as Image 6 In (a) and (b), two medical images to be fused are fused, one is a CT image, and the other is an MRI image. The training data set is as follows: Figure 7 As shown, the final fusion image is Image 6 Shown in c in, its result and the contrast of prior art are shown in table 2.

[0050] Table 2 Comparison between this method and the prior art on the fusion results of medical pictures

[0051] method

[0052] It can be seen from Table 2 that this method has obvious advantages over other conventional methods in the fusion of medical pictures, especially in the Qcv index, which shows that analyzing pictures through neural networks is more in line with human understanding and cognition.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com