A cross-camera pedestrian positioning method fused integrated with a space-time model

A space-time model and cross-camera technology, applied in the field of pedestrian positioning, can solve the problems of inaccurate modeling results and low real-time pedestrian re-identification, and achieve the effect of complete and reliable models, increased fault tolerance, and increased accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

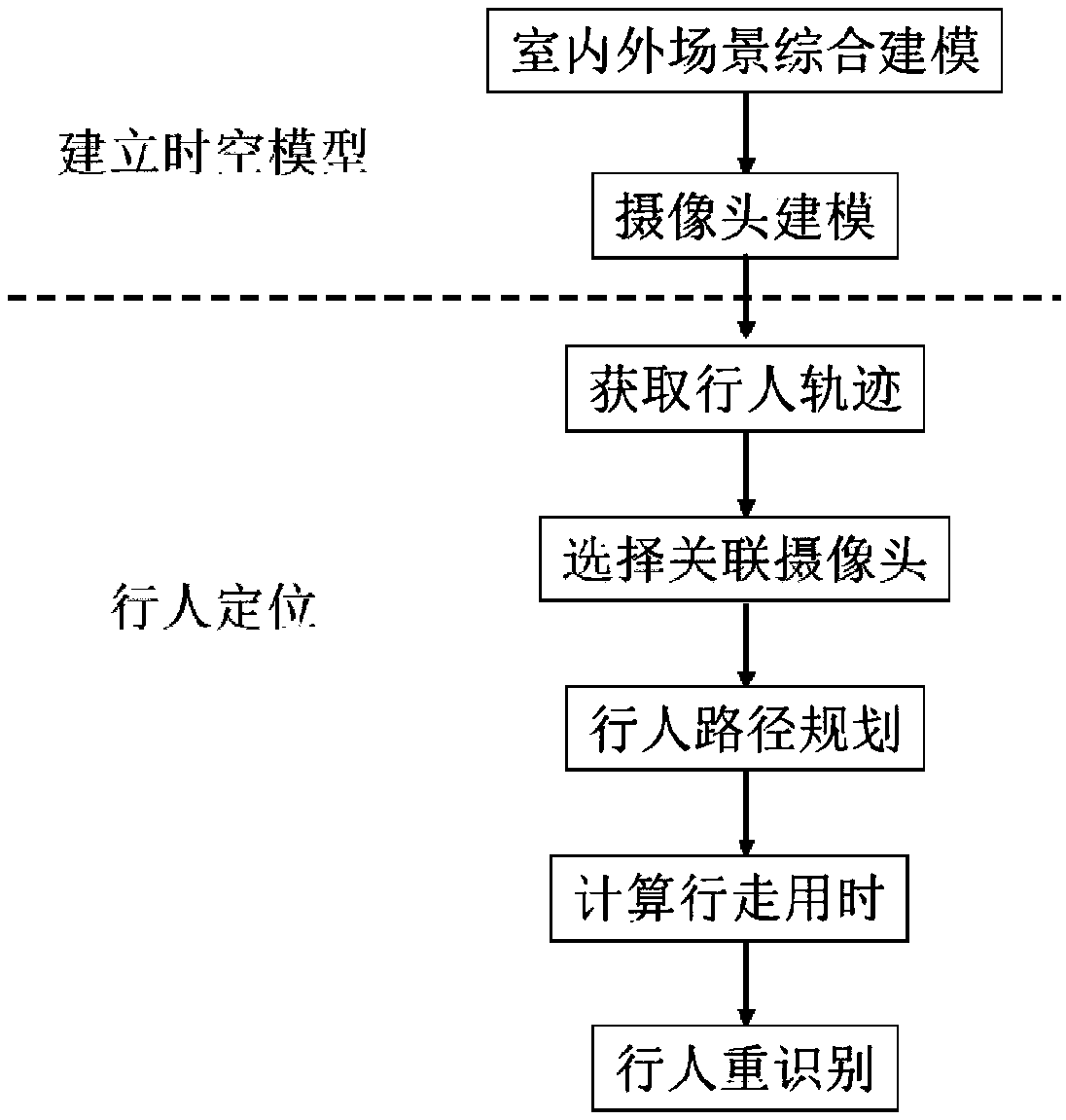

[0067] like figure 1 As shown, this embodiment provides a cross-camera pedestrian positioning method fused with a spatio-temporal model, including the following steps:

[0068] S1. Establish a spatio-temporal model: Carry out comprehensive indoor and outdoor scene modeling and in-scene camera modeling for the camera deployment area in the positioning space, so that the system has complete pedestrian perception and path planning capabilities;

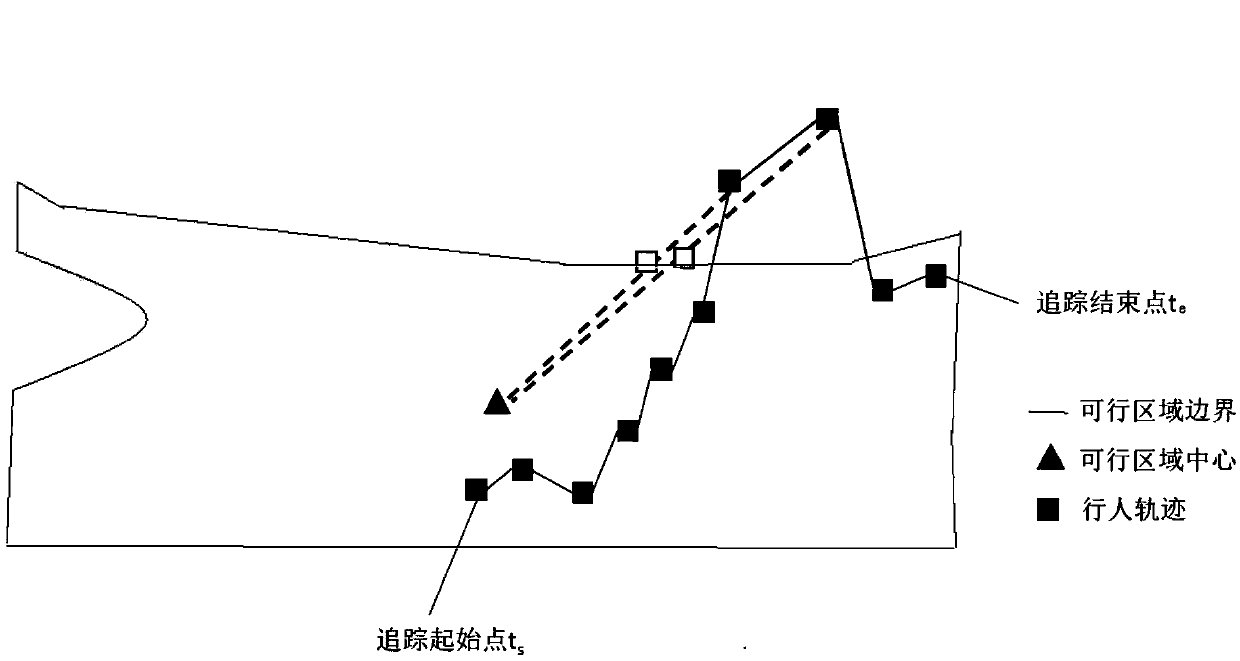

[0069] S2. Acquiring the pedestrian trajectory: assuming that the pedestrian is designated in the initial camera image, the walking trajectory of the designated pedestrian in the initial camera image is acquired;

[0070] S3. Select the associated camera: the specified pedestrian appears in the next camera image after walking out of the initial camera image, and the next camera is set as the associated camera, and the associated camera is selected through different strategies for the different travel routes of the specified pedestrian; ...

Embodiment 2

[0075] This embodiment is further optimized on the basis of Embodiment 1, specifically: the comprehensive indoor and outdoor scene modeling in S1 includes outdoor scene modeling and indoor scene modeling to form a complete building internal and external model for subsequent pedestrians Accurate tracking provides basic information, and the model contains complete space-time information to provide path planning capabilities. Among them,

[0076] Outdoor scene modeling: use general map services to extract the outdoor map model of the positioning space, including the GPS coordinates of each point in the map, the block type and parameters;

[0077] Indoor scene modeling: use BIM modeling tools to model the BIM model of the interior of the building. The modeling objects include beams, columns, panels, walls, stairs, elevators and doors, etc.;

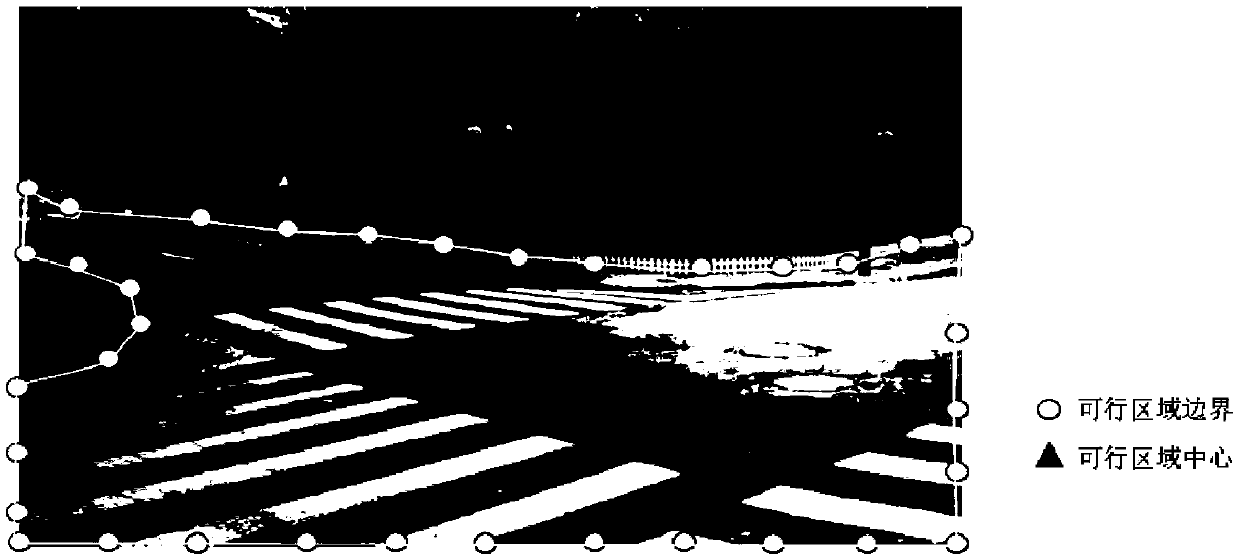

[0078] like figure 2 As shown, the camera modeling in the scene in S1 includes describing camera attributes and visual area mapping, where...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com