An unmanned driving path selection method based on multi-sensor cooperation

An unmanned driving and multi-sensor technology, applied in the direction of neural learning methods, instruments, biological neural network models, etc., can solve the problems of low information recognition accuracy and difficult resource allocation, so as to improve recognition accuracy and solve the problem of excessive calculation , the effect of high-precision path planning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

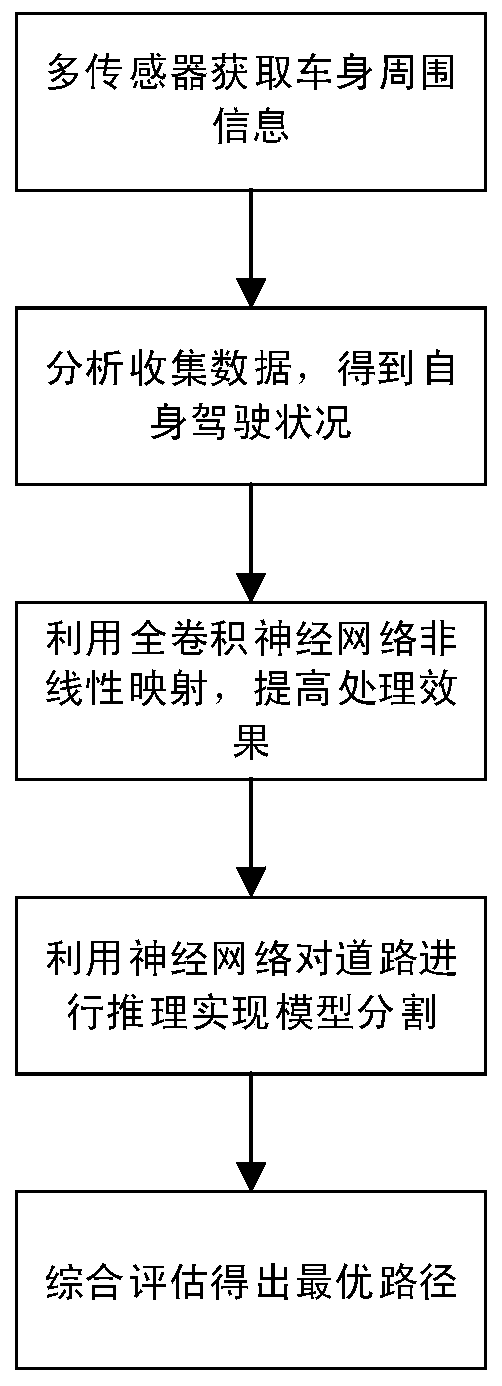

[0036] A method for unmanned driving path selection based on multi-sensor cooperation, see figure 1 with figure 2 , the method includes the following steps:

[0037] 101: Arrange multiple sensors around the vehicle body to achieve full coverage of information around the vehicle body and obtain all basic information around the vehicle body;

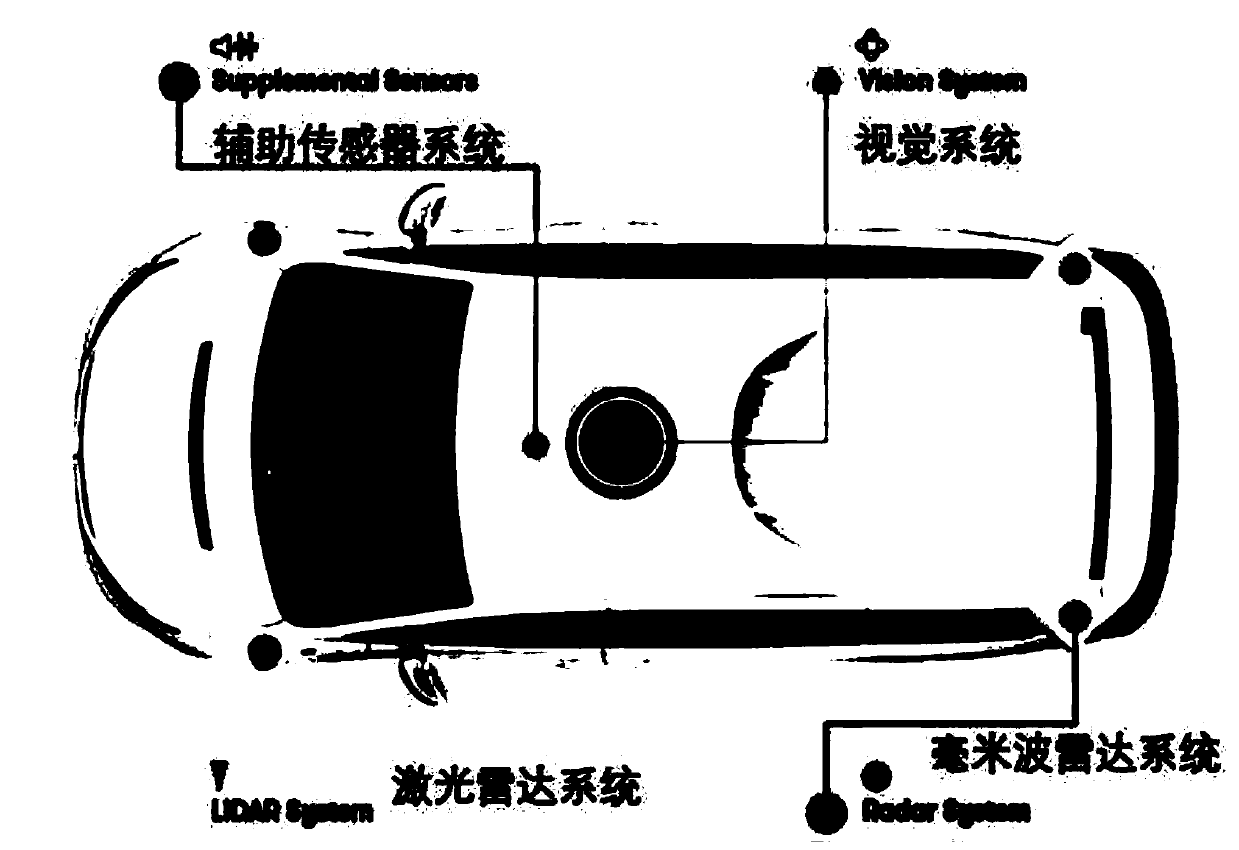

[0038] Among them, the existing unmanned driving sensors generally use perception sensors and high-sensitivity cameras. The embodiment of the present invention uses multi-cooperative sensors and high-sensitivity cameras, and adjusts and replaces the sensor distribution according to the importance of different positions of the vehicle body in driving recognition. The specific sensor layout is as follows: the sensor must be able to ensure 360-degree complete coverage And according to the importance of different parts, the detection distance of front, rear, left and right is set reasonably. Usually, the detection distance of the front is g...

Embodiment 2

[0050] Combine below Figure 1 to Figure 5 , the specific example further introduces the scheme in embodiment 1, see the following description for details:

[0051] 201: specific arrangement of sensors;

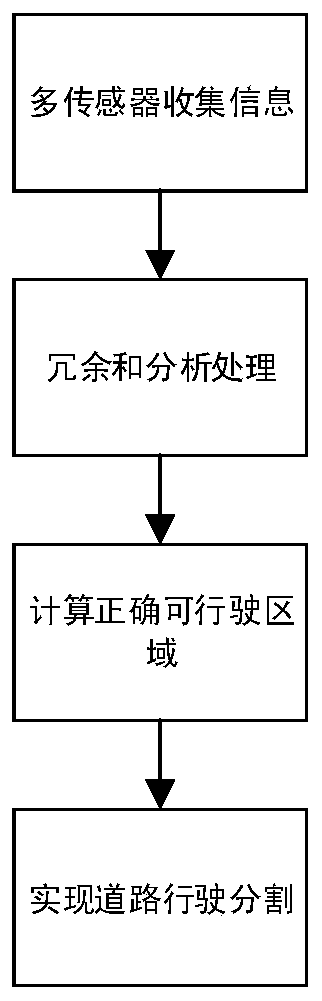

[0052] When implementing it, it is usually necessary to balance coverage and redundancy. Such as image 3 As shown in the figure, laser radars are arranged in the front, rear, roof and both sides of the headlights of the car; millimeter-wave radar systems are arranged near the front and rear headlights and the fuel tank cap; a 360-degree real-time capture of visual information is installed on the roof of the car. Sensitivity high-speed camera and sound collection aids. And considering the inaccuracy of a single sensor, each part needs more than two kinds of sensors to cover.

[0053] 202: Make a classification and division of the road information around the car body obtained by the sensor, the distance from the target around the car body, and the driving of the car itself...

Embodiment 3

[0068] Below in conjunction with specific examples, the scheme in embodiment 1 and 2 is verified for feasibility, see the following description for details:

[0069] This experiment uses the FCN (Fully Convolutional Neural Network) model, which is compared with the CNN (Convolutional Neural Network) model in the urban interior, urban roads, field roads, and rural roads. The experimental results show that this method has certain advantages, not only the recognition accuracy has been improved, but also the time processing has been improved to a certain extent.

[0070] At the same time, this method uses the Adam algorithm to optimize the learning rate, uses the method of simultaneously updating the entire network and increasing a certain learning rate for training, draws the loss learning change curve, and obtains the optimal learning rate. In the backpropagation update parameter phase, using the Adam algorithm, β 1 with beta 2 Take 0.9 and 0.999 respectively. Under this algo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com